Daniel W. Surry

Adrian G. Grubb

David C. Ensminger

Jenelle Ouimette

Authors

Daniel W. Surry is an Associate Professor in the Instructional Design and Development program at the University of South Alabama. Correspondence regarding this article can be addressed to: dsurry@usouthal.edu

Adrian G. Grubb is a doctoral student in the Instructional Design and Development program at the University of South Alabama.

David C. Ensminger is a Clinical Assistant Professor in the School of Education at Loyola University Chicago.

Jenelle Ouimette is a doctoral student in the Instructional Design and Development program at the University of South Alabama.

Abstract

This paper describes the results of a survey to determine the factors that serve as barriers or enablers to the implementation of web-based learning in colleges of education. A total of 229 faculty members responded to the survey. Of these, 104 had never taught a web-based course while 125 had taught at least one online course. Results of the survey showed that Education faculty in this sample had an overall neutral position about the readiness of colleges of education to implement web-based learning. The survey found that financial resources, infrastructure, and support were seen as barriers to implementation while organizational culture, policies, a commitment to learning, and evaluation were seen as enablers to implementation. Open-ended responses showed that there were interesting differences based on the perceived lack of time and perceived lack of social interaction between faculty who have taught online and those who have not.

Résumé

Cet article décrit les résultats d’un sondage visant à déterminer les facteurs qui font obstacle ou qui contribuent à la mise en œuvre de l’apprentissage en ligne dans les établissements d’enseignement. Un total de 229 membres du corps professoral ont répondu au sondage. De ce nombre, 104 n’avaient jamais donné un cours en ligne tandis que 125 avaient enseigné au moins un cours en ligne. Les résultats du sondage ont montré que la faculté d’éducation de cet échantillon était globalement neutre au sujet de la volonté des établissements d’enseignement à mettre en œuvre l’apprentissage en ligne. Le sondage a révélé que les ressources financières, l’infrastructure et le soutien étaient considérés comme des facteurs obstacles à la mise en œuvre alors que la culture organisationnelle, les politiques, l’engagement envers l’apprentissage et l'évaluation étaient considérés comme des facteurs facilitants de la mise en œuvre. Les questions à réponses libres ont mis en lumière des différences intéressantes entre les professeurs qui avaient enseigné en ligne et ceux qui ne l’avaient pas fait relativement au manque de temps et d’interaction sociale perçu.

The use of web-based learning (WBL) has had a major impact on higher education. Many universities have begun to offer a large number of courses, or even entire degree programs, online. New institutions of higher education, some existing completely online or with only limited numbers of on-campus courses, have been established in the last decade. Colleges of education, like other colleges within the university, have begun to use web-based learning to deliver instruction to their students. The advantages of web-based learning for colleges of education are well documented. Web-based learning allows colleges to increase their enrolment by attracting students from outside their local geographic area, reduces the demand on facilities such as classrooms, parking, and computer labs, allows colleges to stay competitive in the changing educational marketplace (Inglis, 1999), and provide faculty and students with more flexible scheduling options.

While there can be no debate that web-based learning is making a major impact on colleges of education, or that WBL offers many potential advantages, the implementation of WBL within a college of education can be a challenging process. In order to facilitate the implementation of WBL in colleges of education, administrators and change agents must understand and address a wide array of issues. The issues to be addressed include technical and fiscal issues, and also issues related to faculty workloads, retention, tenure and promotion, shared governance, student expectations, academic quality, intellectual property, and organizational policies, among many others.

The purpose of this study was to explore the factors that may affect the implementation of web-based learning by faculty members at colleges of education in the United States. While several studies have focused on identifying the barriers to implementation (e.g., Al-Senaidi, Lin, & Poirot, 2009; Berge, Muilenburg, & Haneghan, 2002; Pajo & Wallace, 2001; Rogers, 2000), this study explored factors that served to enable implementation as well as those that acted as barriers. The identification of both barriers and enablers has become more prevalent in recent implementation research (e.g., Jasinski, 2006; Samarawickrema & Stacey, 2007) and provides for a more holistic understanding of the implementation process.

Studying the implementation of web-based learning in colleges of education is important for two main reasons. First, from a theoretical perspective, such research adds to the overall body of literature related to the implementation of innovations. The field of implementation research is still in a relatively early stage of development. While important research related to the adoption and diffusion of innovations began as early as the 1940s (Rogers, 2003), researchers did not begin to shift their focus from adoption to implementation until the late 1970s (Surry & Ely, 2007). Additional implementation research in all areas is needed to contribute to the evolving understanding of this important topic. Second, from a practical perspective, developing a better understanding of how Education faculty view the implementation of technological innovations such as web-based learning can inform the planning process for subsequent innovations. Research on implementation by Education faculty may help to facilitate the implementation of future innovations and could potentially result in significant savings of time and money.

Implementation research is one part of the broader research field related to the diffusion of innovations. The overall diffusion process can be characterized as having five stages: Knowledge, persuasion, decision, implementation, and confirmation (Rogers, 2003). In the knowledge stage, potential adopters of an innovation seek out, or are provided with, information about the innovation. In the persuasion stage, potential adopters form opinions about an innovation based on the information they received and their interactions with peers, change agents, and others. In the decision stage, potential adopters decide to adopt or reject an innovation. If the innovation is adopted, it is introduced into an organization or social system and utilized in the implementation stage. The decision to adopt an innovation, such as web-based learning, is not the end of the process but merely the precipitating act of the implementation stage. It is during the implementation stage where the difficult tasks related to the actual introduction and utilization of an innovation take place. The final stage of the process, confirmation, is the point at which individuals, organizations, and social systems continue to use the innovation or discontinue its use. The confirmation stage is analogous to the concept of institutionalization in which an innovation is integrated into the daily practice of an organization until its use becomes routine (Surry & Ely, 2007).

The decision to adopt an innovation can be made rather easily. However, fostering the actual use, and eventual institutionalization, of an innovation can be an extremely difficult and frustrating process. A wide variety of variables interact to facilitate or impede the implementation of an innovation. These variables include organizational issues, social and cultural factors, technical and economic factors, and the characteristics of the individuals using the innovation. Ely (1990, 1999) developed a list of eight conditions that affect the implementation of an innovation. These conditions are dissatisfaction with the status quo, knowledge and skills, resources, time, rewards or incentives, participation, commitment, and leadership. These conditions have been shown to affect the implementation of both technological and non-technological innovations and to be applicable to implementation research within different types of institutions (Ensminger & Surry, 2002). Ely’s work has served as the theoretical basis for many implementation studies. Much of the research related to Ely’s conditions has been related to education, specifically innovations in K-12 school settings (e.g., Bauder, 1993; Ravitz, 1999; Read, 1994). Ensminger and Surry (2008) found that the eight implementation conditions were useful for the study of implementation in higher education and business and industry, as well as in K-12 settings.

There is a large and growing body of literature specific to the implementation of innovations in higher education. Much of the literature in this area has focused on the implementation of web-based innovations. Rogers (2000) found that higher education technology coordinators thought that funding, release time, training, technical support, and lack of knowledge were barriers to the adoption of technology at community colleges and state universities in her sample. She concluded that the barriers to technology adoption in education are interrelated and should be addressed in a holistic manner by technology planners. Pajo and Wallace (2001) found that the time to learn about, develop, and use new technologies was the primary barrier to the use of web-based learning by higher education faculty. Berge, Muilenburg, and Haneghan (2002) found faculty compensation and time, issues related to organizational change, and technical support to be the most important barriers to the use of distance learning technologies. In addition, they found statistically significant differences in the relative ranking of the barriers by respondents based on demographic variables including work setting, subject matter, and expertise with technology. Samarawickrema and Stacey (2007) identified a number of variables that influenced higher education faculty to adopt web-based learning. They found that institutional variables such as top down directives, political factors, pressure to increase enrollments, and funding were the most important reasons why faculty in their sample adopted web-based learning. Al-Senaidi et al. (2009) determined that faculty members in their sample viewed lack of time and lack of institutional support as the major barriers to the use of information and communications technology for teaching. They also found statistically significant differences in barrier ratings based on demographic variables including gender and academic rank.

Surry, Ensminger, and Haab (2005) drew on the theories of Rogers (2003) and Ely (1990, 1999), among others, to create a model for implementing innovations into higher education settings. Their model has seven components: Resources, infrastructure, people, policies, evaluation, and support. The model, known as RIPPLES, has been used to study implementation in higher education by several researchers. Romero and Sorden (2008) used the model as a framework to study the implementation of an online learning management system (LMS) at a university in Mexico. They concluded that infrastructure and support were the two most critical factors that facilitated the implementation process. They also found that policies were a barrier to implementation. Benson and Palaskas (2006) also used the model to study the implementation of a learning management system at a university. They found that issues related to the components of people, policies, learning, and evaluation were the highest institutional priorities needed to foster the effective implementation of the LMS at their university. They also concluded that the RIPPLES model was an effective tool for the post-adoption study of an innovation and could be used to guide organizational decision making in regard to implementation. Buchan and Swann (2007) also found the RIPPLES model to be useful in studying the implementation of an innovation in a higher education setting. The model has also been effectively applied to the study of implementation of innovations in preschool (Tan & Tan, 2004) and library (Moen & Murray, 2003) settings.

The two studies based on the RIPPLES model that are most relevant to the current study are Nyirongo’s (2009) investigation of barriers and enablers to the use of technology among higher education faculty and Jasinski’s (2006) study of the implementation of innovative practices in eLearning by vocational educators. Nyirongo used the components to study the factors that served as barriers or enablers to the implementation of electronic technologies at a university in Malawi. She found that resources, infrastructure, people (especially shared decision making), and support (especially administrative support) were the major barriers to implementation among faculty in her sample. She also found that while the adoption of information and communication technologies has been widespread at the university in her study, the application of those technologies to teaching has been weak.

Jasinski (2006) used the seven components of the RIPPLES model to study the implementation of web-based learning among vocational educators in Australia. Her study used an online survey and had a sample size of 260 respondents. She found that the organization’s focus on learning outcomes was a key enabler of implementation in her study. The organization’s technology infrastructure was identified as the key barrier to implementation. Support was also identified as a barrier. Interestingly, Jasinski identified specific aspects of each component of the model that acted as a barrier or as an enabler. For example, while infrastructure was found to be an overall barrier, the amount of available server space was identified as an enabler to implementation. This result points out the need to understand each of the model’s components in detail in order to have a comprehensive understanding of any implementation setting. Jasinski concluded that all seven components of the model were important to consider during the implementation process. She also wrote that the RIPPLES model was both “a model for implementation readiness” (p. 71) and a “viable and practical implementation model” (p. 4).

This review of the literature informed the present study in five important ways. First, the review showed that the study of implementation in higher education is an important and growing area of research with numerous unanswered questions and rich opportunities for continued investigation. Second, the review showed that the perspectives and opinions of faculty play an essential role in the implementation of any innovation, especially instructional innovations, in higher education. Third, the review highlighted differences between faculty member’s views of implementation based on demographic variables such as age, gender, and subject matter. This research appears to confirm that higher education faculty should not be seen as a homogeneous group when planning for the implementation of an innovation. Fourth, the review revealed that the focus of implementation research is gradually moving from the determination of barriers to implementation to a broader focus that includes both barriers and enablers to innovation. Finally, the review confirmed that the RIPPLES model (Surry et al., 2005) offers a valid theoretical framework to use in investigating the research questions for this study.

Data was collected in this study using a web-based survey. An email message containing information about the study and a link to the survey was sent to the dean or other contact person at a random sample of colleges of education in the United States. Quantitative data was analyzed using both descriptive and inferential statistics. Qualitative data, in the form of open-ended survey questions, was analyzed using a constant comparative analysis approach. Prior to data collection, the study was reviewed and approved by the Institutional Review Board at our university to ensure human subjects research compliance. More detailed information about each phase of the data collection and analysis process is included in the remainder of this section.

The survey used in this study contained a total of 52 items including demographic questions, multiple-choice questions, and open-ended questions. The survey was the same survey used in the Jasinski (2006) study with three differences. The first difference was that the wording of the survey was modified to reflect differences in the sample. Because Jasinski’s study sampled vocational educators in Australia, the instructions and item stems in the survey had to be altered to make them usable for Education faculty in the United States. A second change was that Jasinski’s study only sampled adopters while this study sampled both adopters and non-adopters. Non-adopters in this study (i.e., faculty who had never taught a web-based course) were branched to a different set of questions than adopters. This was done because of the belief that non-adopters of web-based learning would not have sufficient knowledge or experience to answer questions related to the implementation of web-based courses. The third difference was in the way the survey data were analyzed. Jasinski reported the results of individual survey items and conducted analyses of variance using individual item scores as the dependent variable. For this study, a summated scale using the seven items on the survey that most closely related to the overall construct of an organization’s implementation readiness was developed and used.

Using Jasinski’s (2006) data, a factor analysis was conducted and it was determined that seven items on the survey loaded onto one factor. Each of those seven items represented one of the seven components of the RIPPLES model (i.e., resources, infrastructure, people, policies, learning, evaluation, support). The seven items were then used to develop a summated score measuring each respondent’s perception of their organization’s overall implementation readiness. Because a seven-point response scale was used for the survey items, the summated scale for the seven survey items ranged from 7 to 49. It is this summated scale that is referred to as the Implementation Readiness Scale (IRS). A higher score on the IRS indicates that a respondent believes their organization is more prepared to implement an innovation. The mean IRS for participants in the Jasinski study was 26.1 with a standard deviation of 8.95. Because the IRS score was slightly below 28, the midpoint of the scale, it can be hypothesized that the sample in Jasinski’s study had a moderately negative view of their organization’s readiness for implementation. However, more research is needed to confirm the relationship between IRS scores and overall views of implementation readiness. After an IRS score was developed for each participant in the Jasinski study, Cronbach's alpha was used to measure the internal consistency of the scale. The IRS showed an alpha of 0.896, which we considered to be sufficiently strong to warrant the use of the IRS in the present study.

The population for this study was comprised of faculty members at colleges of education in the United States. The population included both faculty who had taught a web-based course and those who had not. To obtain a sample, a list of all colleges of education that were accredited by the National Council for Accreditation of Teacher Education (NCATE) was identified. This resulted in a list of approximately 660 institutions. A random number generator was used to select 15% of the colleges (n=100). The dean or other appropriate contact person was identified at each of the selected colleges. Email messages were sent to each contact person. The emails contained information about the study and a link to the web-based survey. In the email, the contact person was asked to forward information about the study to faculty members in their college. One week after sending out the initial set of email messages, the researchers had received approximately 75 responses. Because this was not considered a large sample, another 100 colleges were randomly selected from the NCATE list and the process of sending out email messages to the appropriate contact person was repeated. The second email campaign generated another 70 responses. Another 120 colleges were randomly selected and the sampling procedure was repeated. Email messages were sent to contact persons at a total of 320 of the 660 NCATE accredited colleges of education (48.5%) over a five week period which resulted in a total of 236 faculty responses to the survey.

A total of 236 faculty members responded to the survey. Because respondents were not asked to identify which college of education they were associated with, it was impossible to determine how many different colleges were represented in the sample. Respondents stated they taught a wide variety of subject matter areas with elementary education (n=53) being the most commonly stated area. Of the 236 total respondents, 132 reported that they had taught at least one web-based course and 104 reported that they had never taught a web-based course. Seven respondents from the group that had taught a web-based course did not answer a minimum number of questions on the survey and were excluded from the sample. This left 125 respondents in the group that had taught a web-based course.

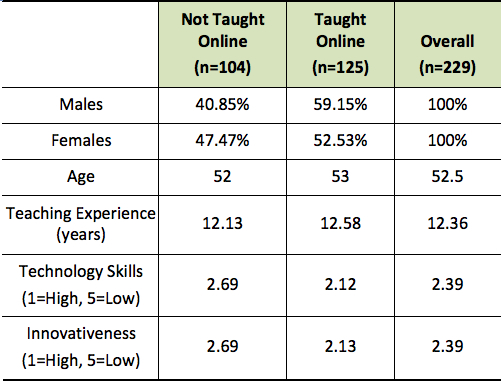

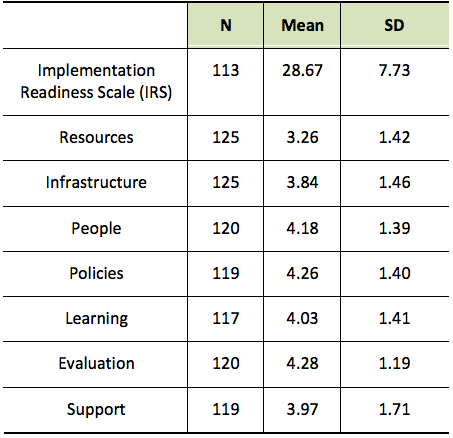

As shown in Table 1, 59% of the male respondents and 52.5% of the female respondents had taught at least one web-based course. The average age for the respondents who had taught a web-based course was 53 years while the average age for those who had not was 52 years. The two groups had approximately the same number of years teaching experience in higher education. Respondents who had taught at least one web-based course identified themselves as having higher technology skills and as being more innovative than respondents who had never taught a web-based course.

Table 1: Demographic information for the overall sample and two sub-groups

The results of the study are presented in this section. A description of the participants of the study is followed by a presentation of results related to each of the three research questions. A brief description of the results of exploratory analyses is provided to examine data not directly related to the research questions. In the section, the qualitative results of the study are presented.

Research Question One: How do faculty at colleges of education in the United States rate their college’s overall readiness, and the components of readiness, to implement web-based learning?

To investigate this research question, data from respondents who had taught at least one web-based course was used. Respondents who had not taught at least one web-based course were branched to a different section of the survey and did not respond to questions related to their college’s readiness to implement WBL. This choice was made because respondents who had not taught at least one web-based course were considered to be non-adopters of WBL and, as such, it was assumed that these faculty members were in the knowledge, persuasion, or decision stage (Rogers, 2003). Respondents who had taught at least one web-based course were assumed to be in the implementation or confirmation stage of the innovation process and, as such, may offer valid insights into the implementation process at their college.

To determine the ratings for overall readiness, an Implementation Readiness Scale (IRS) score for each respondent was developed using a process identical to the process used to calculate an IRS score for the Jasinski (2006) sample. As with the Jasinski study, a factor analysis determined that the seven items related to implementation readiness all loaded on the same factor for this sample. After an IRS score was developed for each participant in the sample, and it was determined that each item loaded onto the same factor, Cronbach's alpha was used to measure the internal consistency of the scale. The IRS showed an alpha of 0.848. This was slightly lower than the alpha for Jasinski’s sample (0.896), but still represented a strong level of internal consistency.

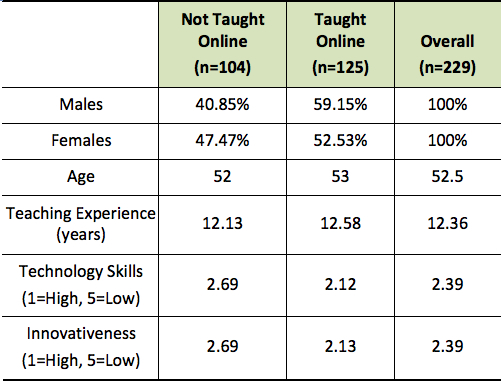

As shown in Table 2, the mean IRS score for this sample was 28.67 with a standard deviation of 7.73. Because 28 is the midpoint of the scale, it can be hypothesized that the higher education faculty members in this sample had an overall neutral view of their organization’s readiness to implement web-based learning. Table 2 also shows the mean score for each of the seven components of the RIPPLES model. The components were measured on a seven point scale with 1 representing a major barrier to implementation and 7 representing a major enabler of implementation. The component with the lowest mean score was resources (3.26). This suggests that faculty members in this sample viewed the financial resources of their college of education, and the way those resources are allocated, to be the main barrier to the implementation of web-based learning. The components with the highest mean scores were policies (4.26) and evaluation (4.28). This suggests that faculty members in this sample viewed the policies of their college of education and their college’s ongoing process of evaluation to be the main enablers for the implementation of web-based learning.

Table 2: Mean and standard deviation for each component of the IRS for respondents who have taught at least one web-based course

Research Question Two: Are there differences in faculty ratings of their college’s overall readiness to implement web-based learning based on demographic variables such as age, gender, and technological skill level?

To answer this question, a series of analyses of variance were conducted using each of the demographic variables identified on the survey as the independent variable and the overall IRS score as the dependent variable. This resulted in two statistically significant findings. First, an analysis of variance revealed a statistically significant difference, F(1,110) = 4.07, p < .05, in IRS scores based on gender. The mean IRS score for females (n=71) was 29.65 while the mean IRS score for males (n=41) was 26.66. This shows that female faculty in this sample had an overall more favourable view of their college’s readiness to implement web-based learning than did the male faculty in this sample.

Second, an analysis of variance revealed a statistically significant difference, F(3,109) = 5.31, p < .01, in IRS score based on the type of university where the respondents worked. Respondents who worked at traditional universities with limited online courses (n=44) had a mean IRS score of 31.79. Respondents who worked at traditional universities with many online courses (n=45) had a mean IRS score of 26.87. Respondents who worked at hybrid universities with a mixture of traditional and online courses (n=22) had a mean IRS score of 25.59. This finding seems to suggest that faculty at colleges that teach fewer online courses have an overall more favourable view of their college’s readiness to implement web-based learning than did faculty at colleges that teach more online courses.

Research Question Three: Are there differences in faculty ratings of barriers and enablers based on demographic variables such as age, gender, and technological skill level?

To answer this question, a series of analyses of variance were conducted using each of the demographic variables identified on the survey as the independent variable and each of the seven components of the RIPPLES model as the dependent variable. This resulted in five statistically significant findings. First, an analysis of variance revealed a statistically significant difference, F(5,113) = 3.73, p < .01, between teaching experience in higher education and evaluation. While there was a statistically significant difference, the results are somewhat mixed. Faculty members who had taught in higher education for 11-15 years (n=16) had the highest mean score for evaluation 5.13, meaning that group viewed evaluation as a strong enabler to the implementation of WBL. However, both faculty members who had taught 6-10 (n=33) years and those who had taught 16-20 years (n=11) viewed evaluation as less of an enabler. More research is needed into the relationship between years of teaching experience and the component of evaluation.

A second statistically significant difference was found based on a respondent’s age group and the component of people. Because the mean year of birth for respondents in this sample was 1957, all respondents were grouped into those born in or before 1957 or those born after 1957. An analysis of variance revealed a statistically significant difference, F(1,98) = 4.28, p < .05, between those born after 1957 (n=39) and those born in or before 1957 (n=61) based on the component of people. Those in the younger group had a mean score for the people component of 4.49 while those in the older group had a mean score of 3.90. This shows that respondents born after 1957 appeared to regard the organizational culture, communication channels, and amount of shared decision making in their college as being an enabler to the implementation of web-based learning while those born in or before 1957 saw those as being a barrier to implementation. An analysis of variance also revealed a statistically significant difference, F(1,98) = 5.46, p < .05, between the older and younger groups based on the policies of their college of education. The younger group viewed policies as an enabler to implementation of WBL while the older group viewed the policies of their college as having a neutral affect on implementation. These two findings suggest that the age of the faculty members within a college of education may be an important factor to consider when implementing web-based learning. More research is needed to explore further the implications of these two findings.

Two other statistically significant differences were found based on a faculty member’s perception of the quality of the web-based courses they taught and on the type of university where faculty member worked. Faculty members who perceived their web-based courses to be of very high quality viewed their college’s technology infrastructure as more of a barrier to implementation than did faculty members who perceived their web-based courses to be of high quality. This could suggest that faculty members who desire to teach high quality web-based courses feel held back by the technology infrastructure of a college, while the infrastructure is seen as less of an issue for faculty who are less concerned with teaching high quality web-based courses. The researchers did not attempt to define or quantify the term “quality” in the instrument used in this study. As a result, respondents likely used widely differing and highly subjective criteria for rating a course as a “very high quality” course or as a “high quality” course. In the second statistically significant difference, respondents to the survey who taught at colleges with limited online courses viewed the component of support as being an enabler to implementation while those at colleges that taught more online courses viewed support as a barrier to implementation. This finding could suggest that support comes to be seen as more critical to the implementation of web-based learning as the number of web-based courses increases. More research is needed to explore these two findings further.

After analyzing data related to the three primary research questions, a series of statistical analyses were conducted to identify other important findings. During this exploratory analysis phase, numerous statistically significant results were found to be related to demographic variables included on the survey. A full discussion of each of the statistically significant results is beyond the scope of the present paper. Several findings that are especially interesting will be discussed briefly in the following section.

The first interesting finding of the exploratory analysis is that there was a significant correlation, r = 0.743, n = 231, p = 0.00, between a respondent’s self-reported level of technology skills and their perceived innovativeness. Respondents with higher levels of technology skill saw themselves as being more innovative than those with lower levels of technology skill. A second interesting finding was that there was a statistically significant difference in respondents’ self-reported level of technology skills and gender, F(1,229) = 5.85, p < .05 Males in this sample perceived themselves as having higher technology skills than females. Somewhat surprisingly, considering the strong correlation that was found between technology skills and perceived innovativeness, no significant difference was found between the levels of perceived innovativeness based on gender. However, a statistically significant difference in perceived innovativeness between people who have taught at least one web-based course and those who have not taught online, F(1,227) = 22.78, p < .01 was found. Respondents who have taught at least one web-based course perceived themselves to be significantly more innovative than did respondents who have never taught a web-based course. A statistically significant difference in perceived innovativeness based on age group, F(1,187) = 5.24, p < .05 was also found. Respondents in the older group, those born in or before 1957, perceived themselves to be significantly less innovative than those in the younger group, those born after 1957.

Two other findings from the exploratory analysis, both related to self-reported levels of technology skills, were especially interesting. First, an analysis of variance revealed a statistically significant difference, F(1,228) = 28.97, p < .01, between the self-reported technology skill level of respondents who had taught at least one web-based course and those who had not. Those who had taught a web-based course reported they had significantly higher technology skills than respondents who have not taught a web-based course. Second, an analysis of variance revealed a statistically significant difference, F(1,188) = 7.41, p < .01, between the self-reported technology skill level based on age group. Respondents in the younger group, those born after 1957, reported they had significantly higher technology skills than respondents who were born in or before 1957. This finding was not surprising considering the strong correlation found between self-reported technology skill level and perceived innovativeness and the fact that the younger group also reported higher levels of perceived innovativeness.

After the data related to the study’s three research questions was analyzed and a series of exploratory analyses were conducted, the research team analyzed the qualitative data collected during the study. Three open-ended questions were answered both by respondents who had taught at least one web-based course and by those who had not.

To analyze the responses to the open-ended questions, a constant comparative analysis approach was employed (Johnson & Christensen, 2004). The team began with using an open coding process. For each of the three open-ended questions, two of the authors independently analyzed the responses and assigned open codes to each response. Following this, each of the two authors independently developed response categories for each question. Next, the two authors discussed the codes and categories they had each developed. This discussion resulted in the creation of a final set of categories. The two authors then independently went through all the responses to each question and assigned categories to each response using a selective coding process with the final set of categories. After this, the two authors met a final time to discuss each response and to reach a final agreement about how each response would be categorized.

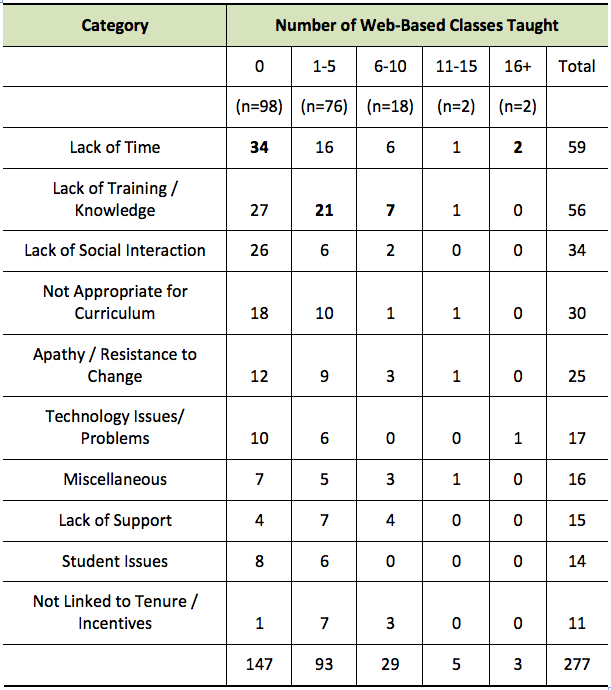

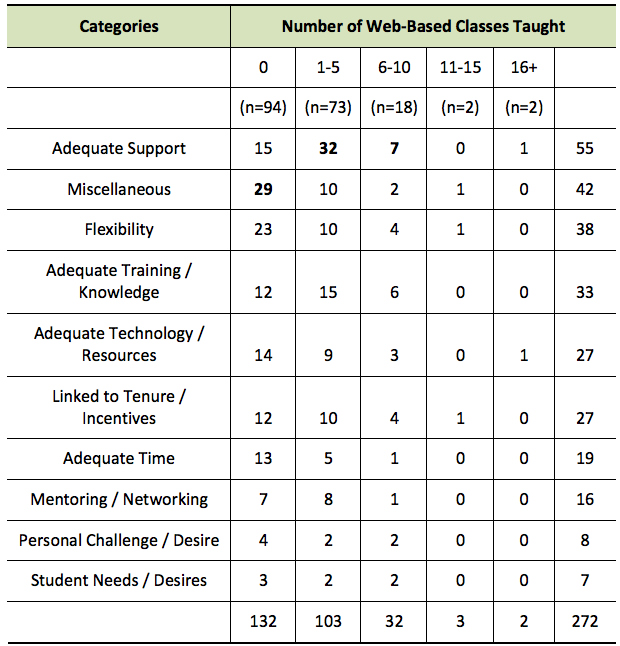

The first open-ended question asked respondents to list the two biggest factors that prevented college faculty from teaching web-based courses. As shown in Table 3, lack of time and lack of training or knowledge were the two most commonly listed factors. Interestingly, lack of time was the most commonly listed factor for respondents who had never taught a web-based course but lack of time was not the most commonly listed factor for respondents who had taught between one and 10 web-based courses. Also, a lack of social interaction was the third most commonly listed factor for respondents who had never taught a web-based course, but only the seventh most commonly listed factor for those who have taught between one and five web-based courses. These findings suggest that there are important differences in the perceptions of barriers to web-based learning between those who have taught a web-based course and those who have not.

Table 3: In your opinion, what are the two biggest factors that prevent (or discourage) college faculty from teaching totally web-based courses?

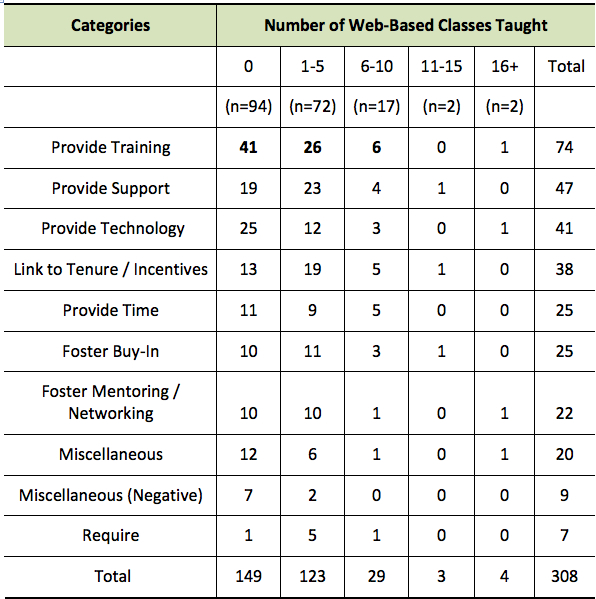

The second open-ended question asked respondents to list the two biggest factors that made it easier for college faculty to teach web-based courses. As shown in Table 4, adequate support was the most commonly listed factor for respondents who had taught at least one web-based course. However support was only the third most commonly listed factor for respondents who had never taught a web-based course. The most commonly listed factor for the respondents who had never taught a web-based course was flexibility. Interestingly, flexibility was the third most commonly listed factor for respondents who had taught at least one web-based course. These findings suggest that support is a crucial factor in encouraging faculty to teach web-based courses. They also suggest that there are important differences in perceptions of the flexibility of web-based learning between those who have taught a web-based course and those who have not.

Table 4: In your opinion, what are the two biggest factors that make it easier (or more likely) for college faculty to teach totally web-based courses?

The third open-ended question invited respondents to list the two things they would do it they were responsible for fostering the use of web-based learning in their college of education. As shown in Table 5, both respondents who have taught a web-based course and those who have not included training as the most commonly listed response. Support, technology, and issues related to tenure and promotion were among the top four most commonly listed responses for both groups. Unlike responses to the other two open-ended questions, there appears to be general agreement between respondents who have taught online and those who have not in regard to how best to foster the implementation of web-based learning.

Table 5: If you were in charge of fostering the use of web-based learning by faculty in your college or school, and you had unlimited resources, what would be the two main things you would do?

The analysis of the quantitative data collected for this study resulted in a number of important findings, the most of which is that faculty participants from colleges of education in the United States have a generally neutral view of their college’s readiness to implement web-based learning. The mean score on the Implementation Readiness Scale for this sample was 28.67. This result is near the midpoint of the scale and seems to suggest that, in general, colleges of education are fairly well positioned to implement WBL but that they could improve their readiness. Results of this study also suggest that financial resources are seen by faculty as the primary barrier to the implementation of WBL. “Resources” refers to the amount of financial resources within a college, the financial resources specifically devoted to WBL, and to the way those resources are allocated. The technological infrastructure of a college was identified by faculty participants as the second most important barrier to the implementation of WBL in colleges of education. These results suggest that in spite of the importance of other factors, money and technology are seen as the biggest barriers to the use of web-based learning by Education faculty. The central role that resources and infrastructure play in the implementation process for these faculty members echoes previous research on this topic (e.g., Jasinski, 2006; Nyirongo, 2009; Romero & Sorden, 2008). More research is needed to determine the specific ways that financial resources affect implementation and the specific elements of a technology infrastructure that are seen as most crucial to implementing web-based learning. It should be noted that the mean IRS score in this study was higher than that found in Jasinski’s study. This tends to support the idea that the readiness scale does identify differences between groups and could be useful in studying different populations who are implementing a similar innovation.

The analysis of survey data also showed important differences in the readiness scores between male and female faculty members. Female respondents in this study had overall higher perceptions of their college’s readiness to implement WBL than did male respondents. The study also found that males in this sample had higher self-reported levels of technology skills than females. Significant differences based on gender were also found among faculty in the Al-Senaidi et al. (2009) study of the implementation of technology in higher education. These findings suggest that gender may be an important variable to consider when planning for implementation in higher education. More research is needed to explore reasons why female faculty members expressed overall higher perceptions of organizational readiness to implement innovations, why male faculty members self-report higher technology skills, and if there are actual differences in technology skills between male and female faculty members. Understanding what role, if any, gender-based differences in technology skills and perceptions of organizational implementation readiness play in the implementation process could allow administrators to develop more informed implementation, support, and professional development plans and significantly facilitate the utilization of innovations in higher education.

The analysis of the qualitative data collected for this study also resulted in several important findings, of which three are considered to be most important. First, there were differences in the perception of time as a barrier to implementation by faculty who had taught a web-based course and those who had not. Faculty who have never taught a web-based course listed time as a key barrier to implementation more often than faculty who have taught a web-based course. The view that time is a barrier to implementation of WBL is consistent with previous studies on the topic (e.g., Al-Senaidi et al., 2009; Berge et al., 2002; Pajo & Wallace, 2001). The second important qualitative finding is that faculty who have never taught a web-based course more commonly listed flexibility as an enabler of implementation than did faculty who have taught a web-based course. This may suggest that flexibility is less of an advantage of WBL in actual practice than previously thought. Additional research to determine whether flexibility is an actual advantage for faculty teaching web-based courses is needed. The third important finding from the qualitative data is that training was the most commonly listed suggestion for fostering the implementation of WBL for both faculty who have taught a web-based course and those who have not.

There were two main limitations to this study, both of which are related to the study’s sample. The first main limitation is sample size. From the 330 colleges and universities included in the sampling process, that 229 faculty responses constitute a very small sample given the large number of faculty members at colleges of education in the United States. The second main limitation was sample composition. Because the sampling process relied on a contact person at each college to forward information about the study to their faculty, it is possible that colleges with positive experiences with WBL or those with collegial relationships between administration and faculty are over-represented in the sample. It is also possible that only those faculty with strong viewpoints about web-based learning, or those with stronger than average technology skills, responded to the web-based survey. Finally, because the sample was drawn from NCATE accredited colleges, faculty at other colleges are not represented. Both of the main limitations related to the sample point out some of the difficulty inherent in conducting online, survey-based research. Subsequent research in this area will either have to employ methods other than survey research or will have to identify winning strategies for encouraging higher response rates to online surveys.

Based upon the results of this study, four recommendations have emerged for administrators and others who are planning to implement web-based learning in colleges of education. First, it is critical for administrators to understand the implementation of WBL from the unique perspective of their faculty. Implementation is a highly contextualized and localized process. This study and those cited in the literature review all pointed out that there are significant differences in perspectives about implementation among faculty based on a wide variety of demographic variables. It is likely that every college of education will represent a unique implementation challenge. Administrators and change agents should resist using generic strategies to foster implementation and try to tailor their implementation plans to their unique situation. Second, it is important for administrators to not underestimate or minimize the importance of financial resources and technology infrastructure to the implementation process. There may be a tendency to overemphasize the importance of “soft” factors such as flexibility of scheduling or pedagogical issues during the implementation of WBL. While those factors are no doubt important, the findings of this study strongly suggest that having sufficient financial resources, allocating those resources wisely, and establishing a strong technology infrastructure are the most important enablers for the implementation of web-based learning. A third recommendation is to provide effective support and training to faculty throughout the implementation process.

The findings of this study suggest that support should increase as the number of web-based courses taught increases. Therefore, administrators should plan to increase the amount of support, not decrease supports, over time. Faculty who have taught web-based courses, and those who have not, have both listed training as an important step in fostering the implementation of WBL. It may be necessary to offer different types of training for faculty in different stages of the innovation process. For example, faculty in the persuasion stage may require training that emphasizes the practical benefits of WBL while those in the confirmation stage may require training that focuses on ways to make WBL more routine and efficient. A fourth recommendation is to pair experienced users of web-based learning with inexperienced users. This study found important differences in the perceptions about WBL between faculty who have taught online and those who have not. Pairing inexperienced and experienced users of WBL could help to facilitate implementation by dispelling unfounded negative perceptions of WBL, preparing new users for unanticipated problems and opportunities, and enhancing training and support through a peer tutoring model.

Online technologies are changing and challenging higher education. Colleges of education are facing the challenge of using technology effectively while maintaining a commitment to academic quality. Web-based learning offers colleges an important tool for reaching new students, interacting with current students in new ways, reducing costs, and making better use of limited resources. In order for web-based learning to be used most effectively in higher education, faculty must assume leadership roles in its design, use, and evolution. Plans for implementation of web-based learning in higher education that ignore the central role faculty plays in the implementation process are doomed to failure. Administrators must be proactive in their efforts to understand the implementation of WBL from the faculty member’s perspective and ensure faculty concerns are addressed in a meaningful way. If administrators understand and account for the factors that faculty believe serve as barriers or enablers to implementation, web-based learning can benefit colleges of education, faculty, and students. Based on the findings of this study, administrators should focus on understanding and accounting for faculty concerns related to financial resources and technological infrastructure and issues related to support and training in order to facilitate the implementation of web-based learning. The results of this study also suggest that linking web-based learning to retention, tenure, and promotion would help to facilitate implementation.

Al-Senaidi, S., Lin, L., & Poirot, J. (2009). Barriers to adopting technology for teaching and learning in Oman. Computers & Education, 53, 575-590.

Bauder, D. Y. (1993). Computer integration in K-12 schools: Conditions related to adoption and implementation. Dissertation Abstracts International, 54(8), 2991A. (UMI No. 9401653)

Berge, Z. L., Muilenburg, L. Y., & Haneghan, J. V. (2002). Barriers to distance education and training: Survey results. The Quarterly Review of Distance Education, 3(4), pp: 409-418.

Benson, R., & Palaskas, T. (2006). Introducing a new management learning system: An institutional case study. Australasian Journal of Educational Technology, 22(4), 548-567.

Buchan, J. F., & Swann, M. (2007). A bridge too far or a bridge to the future? A case study in online assessment at Charles Sturt University. Australasian Journal of Educational Technology, 23(3), 408-434.

Ely, D. P. (1990). Conditions that facilitate the implementation of educational technology innovations. Journal of Research on Computing in Education, 23(2), 298-305.

Ely, D. P. (1999). Conditions that facilitate the implementation of educational technology innovations. Educational Technology, 39, 23-27.

Ensminger, D., & Surry, D. W. (2002, April). Faculty Perceptions of Factors that Facilitate the Implementation of Online Programs. Paper presented at the Mid-South Instructional Technology Conference, Murfreesboro, TN.

Ensminger, D. C., & Surry, D. W. (2008). Relative ranking of conditions that facilitate innovation implementation in the USA. Australasian Journal of Educational Technology, 24(5), 611-626.

Inglis, A. (1999). Is online delivery less costly than print and is it meaningful to ask? Distance Education, 20(2), 220-239.

Jasinski, M. (2006). Innovate and integrate: Embedding innovative practices. Brisbane, QLD: Australian Flexible Learning Network.

Johnson, R. B., & Christensen, L. B. (2004). Educational research: Quantitative, qualitative, and mixed approaches. Boston: Allyn and Bacon

Moen, W. E., & Murray, K. D. (2003). ZLOT Project Phase 2 System Implementation of the LOT Resource Discovery Service: Technology adoption of Texas academic and public libraries. Denton, TX: University of North Texas.

Nyirongo, N. K. (2009). Technology adoption and integration: A descriptive study of a higher education institution in a developing nation. Unpublished doctoral dissertation, Virginia Polytechnic Institute and State University.

Pajo, K., & Wallace, C. (2001). Barriers to the uptake of web based technology by university teachers. Journal of Distance Education, 16(1), 70-84.

Ravitz, J. L. (1999). Conditions that facilitate teacher internet use in schools with high internet connectivity: A national survey. Dissertation Abstracts International, 60(4) 1094A. (UMI No. 9925992).

Read, C. H. (1994). Conditions that facilitate the use of shared decision making in schools. Dissertation Abstracts International, 55(8) 2239A. (UMI No. 9434003).

Rogers, E. M. (2003). Diffusion of innovations (5th ed.). New York: Free Press.

Rogers, P. L. (2000). Barriers to adopting emerging technologies in education. Journal of Educational Computing Research, 22, 455-472.

Romero, J. L. R., & Sorden, S. D. (2008). Implementing an online learning management zystem: An experience of international collaboration. In J. Luca, & E. R. Weippl (Eds.), Proceedings of the ED-MEDIA 2008-World Conference on Educational Multimedia, Hypermedia & Telecommunications. (pp. 840-847). Chesapeake, VA: Association for Advancement of Computing in Education.

Samarawickrema, G., & Stacey, E. (2007). Adopting web-based learning and teaching: A case study in higher education. Distance Education, 28(3), 313-333.

Surry, D. W., Ensminger, D. C., & Haab, M. (2005). A model for integrating instructional technology into higher education. British Journal of Educational Technology. 36(2), 327-329

Surry, D. W., & Ely, D. P. (2007). Adoption, diffusion, implementation, and institutionalization of educational innovations. In R. Reiser, & J. V. Dempsey (Eds.), Trends & issues in instructional design and technology (2nd ed.) (pp. 104-111). Upper Saddle River, NJ: Prentice-Hall.

Tan, H. P., & Tan, M. T. K., (2004). Adoption and diffusion of IT in early childhood pedagogy: Crossing the Invisible Chasm. Twenty-Fifth International Conference on Information Systems (Proceedings ICIS 2004), (pp. 12-22). Washington, DC: Association for Information Systems.