Table 1. Level of Use Matrix (Hall, et al, 1975, pp.89-91)

Doug Orr

Rick Mrazek

Authors

Doug Orr is the Blended-Learning Coordinator, Curriculum Re-Development Centre at the University of Lethbridge. Correspondence regarding this article can be sent to: doug.orr@uleth.ca

Rick Mrazek is a professor, Faculty of Education at the University of Lethbridge.

Learning organizations rely on collaborative information and understanding to support and sustain professional growth and development. A collaborative self-assessment instrument can provide clear articulation and characterization of the level of adoption of innovation such as the use of instructional technologies. Adapted from the “Level of Use” (LoU) and “Stages of Concern” indices, the Level of Adoption (LoA) survey was developed to assess changes in understanding of and competence with emerging and innovative educational technologies. The LoA survey, while reflecting the criteria and framework of the original LoU from which it was derived, utilizes a specifically structured on-line, self-reporting scale of “level of adoption” to promote collaborative self-reflection and discussion. Growth in knowledge of, and confidence with, specific emergent technologies is clearly indicated by the results of this pilot study, thus supporting the use of collaborative reflection and assessment to foster personal and systemic professional development.

Les organisations apprenantes s’appuient sur des informations et une compréhension issues de la collaboration afin de soutenir et d’entretenir la croissance et le perfectionnement professionnels. Un instrument d’auto-évaluation collaboratif permet d’articuler et de caractériser de manière explicite le niveau d’adoption des innovations, comme l’utilisation de technologies éducatives, par exemple. Adapté à partir des indices de « niveau d’utilisation » (ou « LoU » pour Level of Use) et de « niveaux de préoccupation », l’instrument d’enquête sur le niveau d’adoption (ou « LoA » pour Level of Adoption) a été conçu afin d’évaluer les changements qui surviennent dans la compréhension des technologies éducatives émergentes et innovatrices ainsi que dans les compétences relatives à ces technologies. L’instrument d’enquête LoA, bien qu’il reflète les critères et le cadre de l’indice original LoU dont il est dérivé, utilise une échelle d’autodéclaration en ligne du « niveau d’adoption » qui est structurée spécifiquement afin de promouvoir l’autoréflexion et les discussions collaboratives. Les résultats de cette étude pilote démontrent clairement une croissance des connaissances et de la confiance relatives à certaines technologies émergentes en particulier, ce qui vient du même coup appuyer l’utilisation de la réflexion et de l’évaluation collaboratives afin de favoriser le perfectionnement personnel et professionnel systémique.

David Garvin (1993) proposed that a learning organization is “skilled at ... modifying its behaviour to reflect new knowledge and insights” (p. 80). One component of developing this skill, Tom Guskey (2005) suggests, is the ability to use data and information to inform change initiatives. Educators within learning organizations, it can be further argued, routinely engage in purposeful conversations about learners and learning (Lipton & Wellman, 2007). How might these information-based conversations be promoted and supported within a variety of educational environments? Of particular interest to us is the adoption of innovative instructional technologies to enhance learning, and the use of technology to encourage collaborative conversations regarding this adoption. As society moves into the 21st century, educators at all levels are proactively adopting new teaching methodologies and technologies (Davies, Lavin & Korte, 2008) to help students gain an understanding of material taught. Useful collaborative information regarding the adoption of instructional innovations must be actively cultivated (Steele & Boudett, 2008), and is arguably most powerful when the educators concerned have ownership of the inquiry process themselves (Reason & Reason, 2007).

The “Level of Use of an Innovation" (LoU) and “Stages of Concern” (SoC) assessments, identified by Hall, Loucks, Rutherford and Newlove (1975), as key components of the Concerns-Based Adoption Model (CBAM) can provide an articulation and characterization of the stages of adoption of an educational innovation. The LoU has been identified as “a valuable diagnostic tool for planning and facilitating the change process” (Hall & Hord, 1987, p. 81) and is intended to describe the actual behaviours of adopters rather than affective attributes (Hall, et al., 1975). We have used the concepts and components of the LoU and SoC to develop a collaborative data-gathering instrument to inform professional understanding and discussions regarding the adoption of technological innovations for learning, and have identified our instrument as the “Level of Adoption” (LoA) survey.

The thoughtful use of the LoU and SoC by a “professional learning community” (Dufour & Eaker, 1998) or a “community of professional practice” (Wenger, 1998) may allow members of such a community to self-assess their process and progress toward adoption of an innovation and to identify critical decision points throughout the process. Increasingly, these types of communities focus on continuous evolution of professional inquiry (Garrison & Vaughn, 2008) to address the enhancement of teaching and learning in blended and online environments. An adaptation of the LoU was previously used by one of the authors while working with teachers in a school jurisdiction to allow members of that professional community to self-assess personal and systemic professional change during the course of a staff development program focused on the adoption of specific innovative educational practices. Components of the LoU and SoC indices have been adapted by various researchers to assess and facilitate personal, collective, and systemic professional growth during planned processes of implementation and adoption of educational technology innovations (Adey, 1995; Bailey & Palsha, 1992; Gershner, Snider & Sharla, 2001; Griswold, 1993; Newhouse, 2001 ). In this study, we were interested in piloting a “Level of Adoption” (LoA) survey to collaboratively inform professional educators regarding their adoption of innovations in educational technology.

During “summer-session” (May-August) 2007 we taught a blended-delivery graduate level education course at the University of Lethbridge (Alberta, Canada) titled “Using Emergent Technologies to Support School Improvement.” During May and June students accessed readings, assignments, and instruction online via the university’s learning management system (LMS). For two weeks in July the class convened in an intensive daily three-hour on-campus format. Following this, course activities continued online via the LMS. The students in this course were seasoned classroom teachers and school administrators who brought to the course a range of experience and expertise with educational technologies. The class wished to ascertain (a) what levels of experience, expertise, and confidence with various technologies they were bringing to the course, and (b) if this experience, expertise, and confidence changed as a result of course participation. To that end, a LoA survey was developed and administered to the class via the LMS survey function.

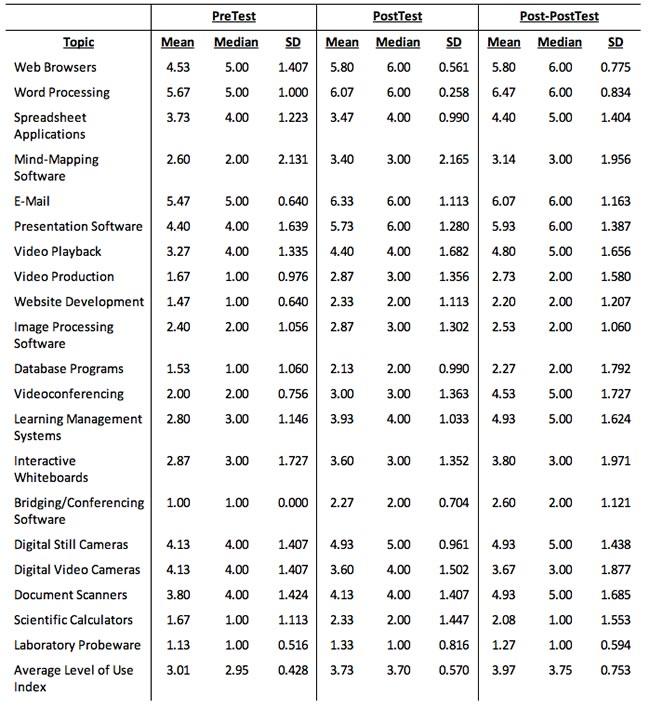

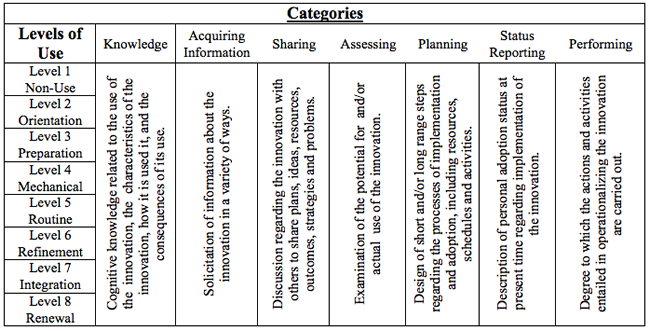

While a focused interview format is traditionally used to collect LoU data (Gershner, Snider & Sharla, 2001;Hord, Rutherford, Huling-Austin & Hall, 1987; ), our adaptation utilized a specifically structured self-reporting scale of “level of adoption” to allow participants to self-reflect through the reporting process. The original “Level of Use” matrix (Hall, et al., 1975) identifies eight levels or stages of adoption of an innovation: “non-use”, “orientation”, “preparation”, “mechanical use”, “routine”, “refinement”, “integration”, and “renewal” (p. 84). Each of these levels of adoption is further defined in the terms of the attributes or actions of participants regarding “knowledge”, “acquiring information’, “sharing”, “assessing”, “planning”, “status reporting”, and “performing” as indicated by Table 1. This complex of descriptors from the original CBAM/LoU (Hall, et al, 1975) was not used directly in our application as an assessment of level of adoption of educational technologies, but was utilized to frame precise stem structures and level descriptors related to the specific educational technologies of interest.

Table 1. Level of Use Matrix (Hall, et al, 1975, pp.89-91)

As the attribution of level of adoption is self-reported, attention was paid to the design of the LoA for this purpose and in this format in order to be able to address issues of content validity (Neuman, 1997). The validity of the LoA survey, we believe, depends primarily on the skill and care applied to framing accurate and focused descriptors. In this instance, it was critical to ensure that the self-reporting scale devised was as specific as possible and accurately described the kinds of behaviours and changes in professional knowledge and praxis which the participants wished to assess. The response choices were worded identically for each stem related to specific technologies being investigated.

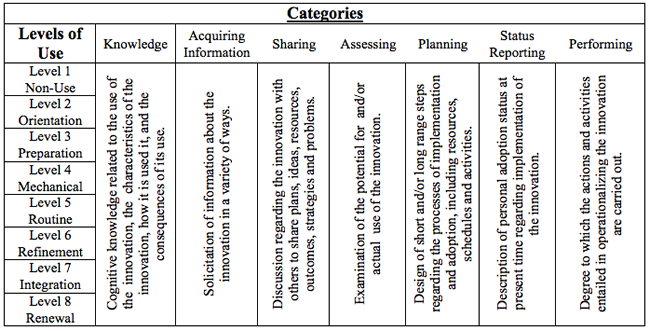

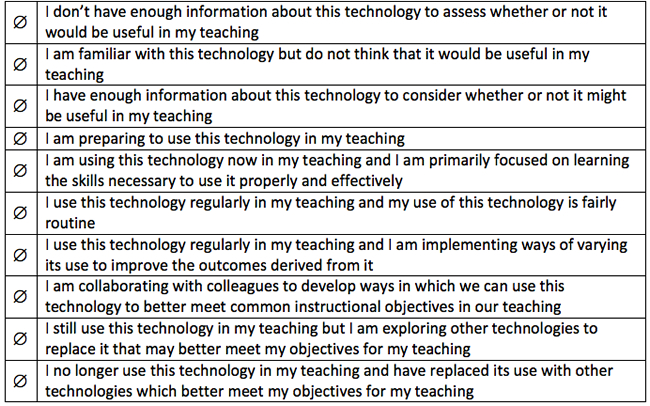

Further, it was deemed important to use identical “radio buttons” or “check boxes” to identify individual choices rather than numbers (0, 1, 2, 3, etc.) on the forms used to assess level of adoption, so that no implied value was associated with a specific response (see Figure 1). The “levels” of the LoA in this application should not imply a hierarchical progression, but rather a nominal description of the state of the community’s adoption of an innovation.

Figure 1. Level of adoption descriptors: adapted by D. Orr, from Hord, et al. (1987)

Though nominal in nature as described above, the results were considered (for purposes of analysis) in an ordinal fashion – indicating degrees of adoption. It is our contention that, as this use of the LoA is intended to collaboratively inform professional praxis and development, the instrument may be administered subsequently to the same participants in an identical form throughout the process of a professional development program (in this instance a graduate course in educational technology) to assess efficacy of the program and to provide a self-reflective “mirror” for participants engaged in their own professional development.

The LoA, we posit, can be used to collect information over time, sampling a population at various points throughout the implementation of an innovative practice – one of the strengths of this type of tool. If the descriptor stems and responses are framed carefully and appropriately, the same survey can be repeated at various times during a project and the results can reasonably be expected to provide useful longitudinal information regarding change in professional understanding and practice.

In our construct, where the intention is to facilitate collaborative decision-making, professional growth, and personal reflection, the LoA survey asks participants to self-identify their own degrees of adoption of various educational technologies. Respondents selected a “level of adoption” descriptive of their perceived degree of knowledge, utilization, confidence, or competence; ranging from “non-use” through “orientation”, “preparation”, “mechanical use”, “routine”, “refinement”, and “integration”, to “renewal”; consistent with the eight levels of adoption of an innovation defined by the “Level of Use” index (Hall & Hord, 1987; Hall, et al, 1975; Hord, et al, 1987). Respondents in this pilot study self-identified their level of adoption of twenty common educational/instructional technologies: web browsers, word processing software, spreadsheet software, mind-mapping software, e-mail/web-mail, presentation software, video-playback software, video production software, web site development software, image processing software, database software, videoconferencing, learning/content management systems, interactive whiteboards, interactive conferencing/bridging software, digital still cameras, digital video cameras, document scanners, scientific/graphing calculators, and laboratory probeware/interface systems.

For this pilot study, a class cohort of 26 graduate students was surveyed concerning their level of adoption of various educational technologies twice during the course and again four months after the conclusion of the course. Students responded to three identical, 20-item, LoA surveys via the class online learning management system – the “pretest” survey in June prior to the students’ arrival on campus, the “posttest” survey in August at the conclusion of the on-campus course component, and the “post-posttest” survey in December of the same year. Twenty-five students (96%) responded to the “pre-test” survey, twenty-two (84%) responded to the “post-test” level-of-use survey, and seventeen (65%) responded to the post-posttest survey. Twenty-one students (81%) responded to both the pre-test and posttest surveys, while fifteen (58%) responded to all three (pre-, post-, and post-post-) surveys. Comparison of these three data sets reflects changes in self-reported knowledge and utilization of, and confidence and competence with, emergent educational technologies.

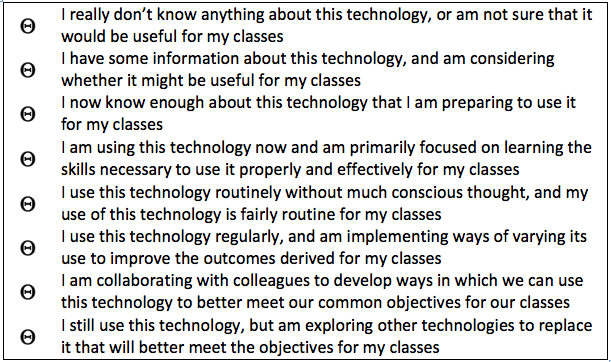

To reflect the possible potential for the use of this instrument as an indicator of change in praxis during and following a professional development program, we chose to restrict our analysis of results to the responses from the fifteen participants who completed all three administrations of the instrument. Due to the relatively small size of this data sample, we avoided rigorous statistical investigation of the data (restricting measures of significance to Chi2 only) and focused on inferences we believe can reasonably be drawn from descriptive analyses in the context of professional development and change in professional praxis. Results (see Appendix - Table 2) indicate self-reported increase of use for all 20 technology categories, and an increased “average level of use” (average of category means).

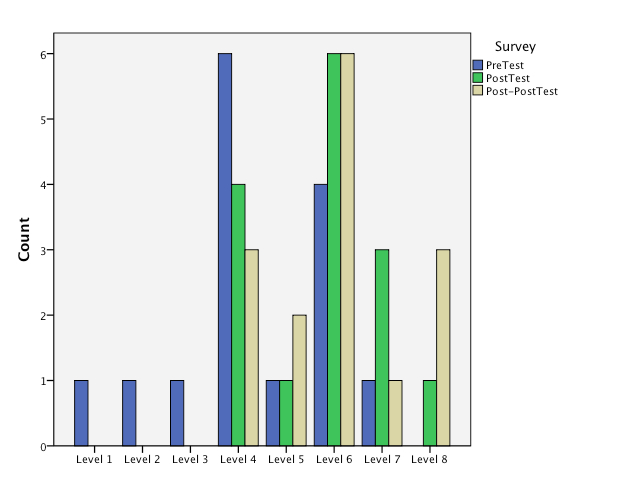

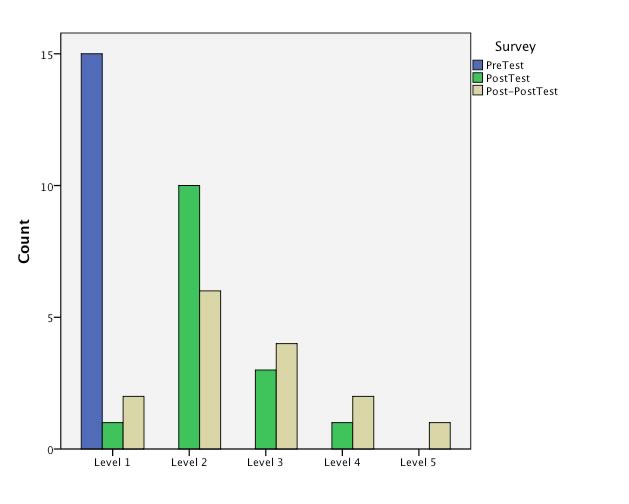

Peripheral technologies, which were commonly used by students and instructors during the course but not directly addressed by the instructional activities (such as web browsers, word processing, spreadsheet applications, and e-mail) never-the-less revealed increased reported levels of use over the three administrations of the survey. The results for the use of “presentation software” (such as PowerPoint and Keynote) are worth noting. The use of this technology was not directly taught to students, but was consistently modeled by instructors throughout the on-campus course component. Results (Figure 2) indicate a noticeable change from self-reported relatively low levels of adoption to considerably higher levels. The mean and median values increased from 4.40 to 5.93 and 4.00 to 6.00 respectively between the pretest and post-posttest administrations. And, interestingly, a number of students selected this technology as a topic and/or medium for their major course project.

Figure 2. Reported levels of adoption of presentation software (n=15)

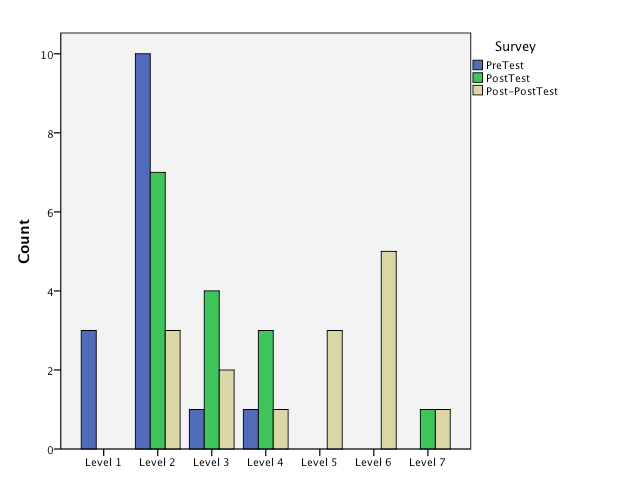

Of greatest interest to us were the results for videoconferencing, learning management system, interactive whiteboard, and conferencing/bridging technologies; as these topics were the foci of specific teaching-learning activities in the on-campus course component. The pretest results regarding, for example, videoconferencing (Figure 3) indicated that thirteen of fifteen respondents either had little or no knowledge regarding or were merely “considering” the usefulness of educational videoconferencing; while the other two respondents reported themselves to be “preparing” and “focusing on learning skills necessary” to use videoconferencing technologies respectively (mean=2.00, median=2.00).

Figure 3. Reported levels of adoption of videoconferencing (n=15)

By the conclusion of the course in August there was an obvious, and not unexpected, increase in reported level of use (mean=3.00, median=3.00). It is important to note the significant (p<0.005) increase in reported level of use as these students (practicing educational professionals) returned to the workplace and had the opportunity to access and apply these technologies within their schools (mean=4.53, median=5.00). Nine respondents reported their level of use as “routine” or higher on the post-posttest.

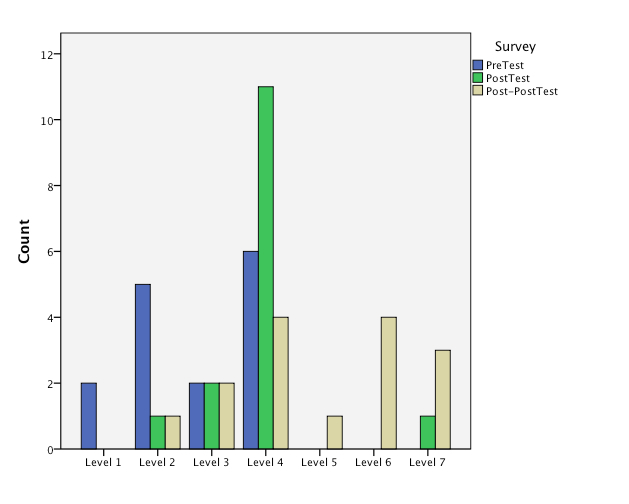

Similar findings regarding continuing professional growth and positive change in praxis were reported for learning management system, interactive whiteboard, and bridging/conferencing technologies. A comprehensive learning management system (LMS) was used to deliver, complement, and supplement instruction for these graduate students throughout both the off-campus and on-campus components of the course. The students were expected to use this LMS to engage in collaborative discussions, to access assignments and readings, and to post written assignments. One topic specifically covered during the on-campus course component was the application of learning management systems in K-12 classrooms. As with videoconferencing, results indicated a noteworthy change in reported use of this technology over the course of this study (Figure 4). Initially 13 of 15 respondents reported themselves to be at level one (“non-use”) or two (“orientation”), with the highest level of use (one respondent) reported merely as “mechanical use” (mean=2.80, median=3.00). By December (following the conclusion of the course and return to the workplace) eight respondents indicated LMS levels ranging from “routine,” to “refinement,” to “integration” (mean= 4.93, median=5.00).

Figure 4. Reported levels of adoption of learning management systems (n=15)

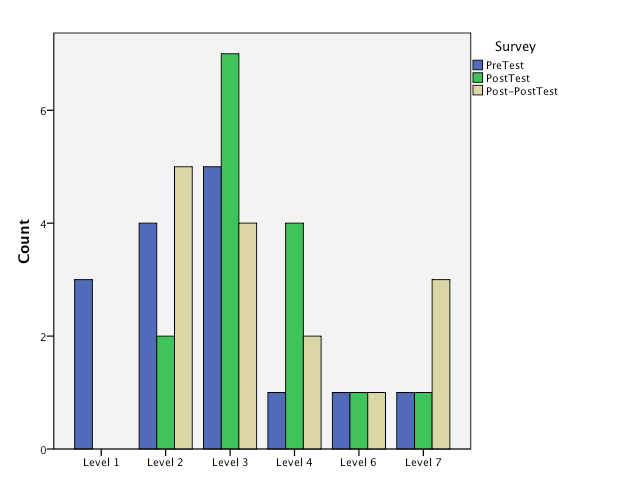

The changes in level of adoption reported for interactive whiteboard technologies (Figure 5) were of considerable interest as this technology is being introduced into many schools in our region. During the on-campus class we specifically instructed students about the classroom use of this technology and its application supporting instruction delivered via videoconference. It is worth noting reported levels of adoption regarding “orientation” and “preparation” between the August survey (administered at the end of the class) and the December survey (administered after these practitioners had returned to their school districts). This result provokes further questions concerning participants’ perceptions of the “potential” use of a technology (perhaps surfaced during the class?) and their “actual” use of the technology once back in the schools. Of note, never-the-less is the increase in the number of respondents reporting themselves as engaging in collaborative adoption at the “integration” level for both interactive whiteboard and LMS technologies.

Figure 5. Reported levels of adoption of interactive whiteboard technologies (n=15)

The significant (p<0.001) result for the reported use of bridging/conferencing software (Figure 6), possibly reflects the introduction of a technology with which these educators had little or no previous experience. Of note was the number of respondents (four) reporting “preparation” for use, and the three respondents reporting either “mechanical” or “routine” use of this technology on the December post-posttest survey, and the concomitant increase in the mean reported level of use from 1.00 to 2.60. The National Staff Development Council (2003) identifies collaborative practice within learning communities as a vital component of authentic and efficacious professional growth and change. Of particular interest, in terms of the development of communities of professional practice is the move from “skill development” and “mechanical” levels of use to “refinement” and “collaborative integration” which is reflected in these results.

Figure 6. Reported levels of adoption of bridging/conferencing software (n=15)

Questions concerning the accuracy of data are always of concern. Clearly the number of participants involved in this administration of the self-reported level of adoption survey limits the ability to establish effect-size changes, or to explore questions of reliability. Never-the-less, it is worth considering, within the context of a community of professional practice, strategies for promoting the validity and reliability of responses in order to corroborate the potential of this type of information-gathering to support collaborative professional development initiatives.

We posit that it is firstly critical to create a supportive, collaborative, and intellectually and emotionally secure professional community of learners, before asking participants to use a self-reporting, self reflective tool such as the LoA to inform progress of and decisions about their professional growth and development. It is crucial that respondents know (a) that responses are anonymous (on-line survey tools facilitate this, but other “blind” techniques work as well), and (b) that it is “OK” to be at whatever level one is at. It is critical as well to stress with respondents that this tool is used to inform programs and processes, not to evaluate people. Thus, “non-users” of particular technologies should be empowered to voice disinterest in or lack of knowledge about, a program by indicating a low level of use.

Similarly, there should be no perceived “status” attached to users who report themselves to be at refinement, integration or renewal levels of use. This reinforces the importance of writing clear, well-articulated, appropriate, non-judgmental, and non-evaluative stems and responses. No less importantly, one could and should collect related “innovation configurations” (Hall & Hord, 1987; Newhouse, 2001); such as teacher artifacts, login summaries, participation counts, attitude surveys, participant surveys, and classroom observations with which to corroborate and elucidate the LoA results for the community. It is critical throughout the process to maintain complete transparency in the collection and dissemination of results. Where a professional development program or innovation adoption is cooperatively and collaboratively initiated, planned, and implemented, the participants will ideally wish to respond to the LoA as honestly as possible in order to accurately assess a program or innovation adoption process over which they have ownership as members of a community of professional practice with a shared vision of professional growth and change (Dufour & Eaker, 1998). Sharing results of the LoA survey with participants encourages ownership of both the information and the process (Reason & Reason, 2007), as an impetus to faculty engagement with the adoption of innovation (Waddell & Lee, 2008). In our further applications of this instrument, we are implementing on-line survey software which reports aggregate results to all participants in real-time as responses to the LoA survey accrue.

We believe the results from this pilot project indicate positive professional growth in respondents’ knowledge and utilization of, as well as confidence and competence with, emergent educational technologies. Where addressed by the course content, growth in knowledge of and confidence with emergent technologies, as defined by the survey criteria, is clearly indicated by the results of this level of adoption survey. We are primarily interested in the process of the development of the “Level of Adoption of an Innovation” survey as a self-reporting, self-reflective professional tool; and how the information derived from the results can be used to facilitate planning for and implementation of innovative changes within a professional community of learners. We are currently implementing similar adaptations of the LoA survey within other communities of professional practice, and investigating ways in which adaptations for specific purposes can be derived from the original work of Hall, et al. (1975) and Hord, et al. (1987) and generalized through the LoA to various communities of professional practice.

The LoA survey used in this study, focusing on the adoption of instructional technologies, is being further adapted and applied to inform and support the collaborative professional development of university faculty members, with a revised catalogue of emergent and 21st century technologies relevant to post-secondary instruction. The updated catalogue of technologies includes social networking, simulations and video-gaming, video-streaming, podcasting and vodcasting, and assistive technologies. Additionally, an on-line version of this up-dated LoA survey, including real-time aggregate reporting to participants, is being used to inform environmental, wildlife and outdoor educators across Canada and internationally regarding their adoption of innovative technologies to support instructional practices. Guskey (2005) identifies the importance of providing data to “improve the quality of professional learning ... activities” (p. 16). A critical challenge as we implement these new applications have been articulating concise descriptive statements accurately reflecting the matrix of adoption of innovation (Hall, et al, 1975), while addressing the unique requirements of specific collaborative professional development initiatives and unique communities of practice and inquiry.

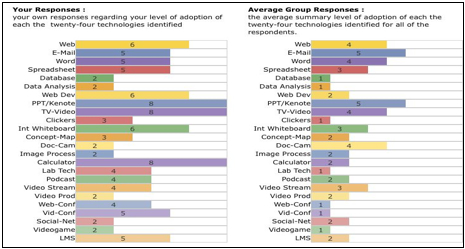

It is intended that a professional community should access this instrument on an on-going basis at critical points in a systemic decision-making process to collaboratively assess changes in praxis regarding adoption of technologies for instruction. The on-line, reporting program we recently adopted provides real-time, aggregate comparative information (Figure 7), which can both document and inform a collaborative professional development initiative.

Figure 7. Online, real-time, aggregate response reporting

The feedback from members of other communities which are using this instrument regarding their perceptions of its efficacy, our own analysis of the responses, and our continuing discussions regarding emergent technologies that potentially address the teaching and learning needs of the 21st century are informing on-going iterations of this self-reflective collaborative assessment tool.

Comments from participating respondents have provided significant information as we are continually revising and refining the instrument. The most common comment identified the desire to be able to indicate that one may be very familiar with a specific technology and consciously chose not to use it for instructional purposes. There's no option for "I know quite a bit about this tool and choose not to use it", which is the case for several of the technological tools.

Another respondent identified the need to indicate the adoption of new technologies which have completely replaced “older” ones which are still identified on our list. These comments have validated and enhanced our on-going discussions about the necessity of both expanding our adoption model and reconceptualizing it in a more cyclic fashion to account for the ever- and rapidly-evolving nature of “emergent” technologies. One significant comment, worthy of note, responds to the instrument’s inherent presumption of the value of adoption of specific technologies.

Technologies have both positive and negative implications for curriculum, pedagogy and 'learning' that cannot be accounted for, here, in the way this instrument already 'determines' according to different levels of adoption. Where, for instance, can I state that often I do not adopt technologies because I fundamentally believe there are numerous negatives and problems associated with them?

We would contend that this comment speaks well to the intended use of this instrument, the importance of ensuring that its application is linked to and focus derived from an adoption initiative collaboratively designed and shared by a community of professional practice, and the importance of adequate communication of the genesis, purpose, focus, dissemination and application of the survey results. While our investigations have focused on the adoption of technologies for instruction, the CBAM and LOU (Hall & Hord, 1987; Hall et al, 1975; Hord, et al, 1987) from which our self-assessment level-of-adoption paradigm has been derived, is intended to assess and inform the adoption of any collaboratively identified and validated educational innovation. To be successful, however, the community itself needs to have ownership of the not only the data collected, but the data collection design and process. The collaborative development and application of the LoA should capitalize on “data as an impetus to examine practice and dialogue as a means of engaging ... faculty” (Waddell & Lee, 2008, p. 19). It is our intent that the members of a community of practice and inquiry initiating an adoption initiative would use the LoA framework to develop a unique instrument which addresses their unique needs and informs their unique innovation initiative.

Other comments from respondents, which have addressed everything from the specific technologies identified to the nature and wording of the level descriptors, provide a richly informative critique of the LoA conceptualization and paradigm. As we proceed with the on-going development and refinement of this instrument to serve the collaborative needs of various different communities of professional practice, this information is providing a baseline from which to continually re-examine and re-create the LoA to better meet the needs of these individual communities. Guskey (2005) identifies the importance of providing data to “improve the quality of professional learning ... activities” (p. 16). A critical challenge as we approach these new iterations is articulating concise descriptive statements accurately reflecting the matrix of adoption of innovation (Hall, et al, 1975), while addressing the unique requirements of each community of professional practice and their specific collaborative professional development initiatives, and authentically representing appropriate emergent 21st century educational technologies.

To that end, for our most current project involving school district and school site instructional technology leaders from across the province of Alberta, we have significantly revised our original eight-level matrix to include two additional levels of adoption (Figure 8).

Figure 8. Revised LoA Descriptors: adapted by D. Orr, from Hord, et al (1987)

Based on the original LOU level descriptors (Hall, et al, 1975), we posit that the first two levels both actually represent the original “non-use” level, as regardless of the reason for non-use the respondent would still be indicating “non-adoption” of a collaboratively chosen innovation.

As we are beginning to consider, due to the evolutionary nature of educational technologies and their adoption, a more cyclical view of the innovation adoption process; we suggest that a respondent who would report our new level 10 (“I no longer use ...”) for a particular technology would by corollary also report level 4, 5, or 6 for the “other technologies” which have been adopted in place. This assumption requires further investigation. Never-the-less, based on the original LoU matrix (Hall, et al, 1975, pp. 54-55), we propose that responses at Level 5 are clearly cuspidal – representing adoption of innovation and established changes in praxis to support learning outcomes.

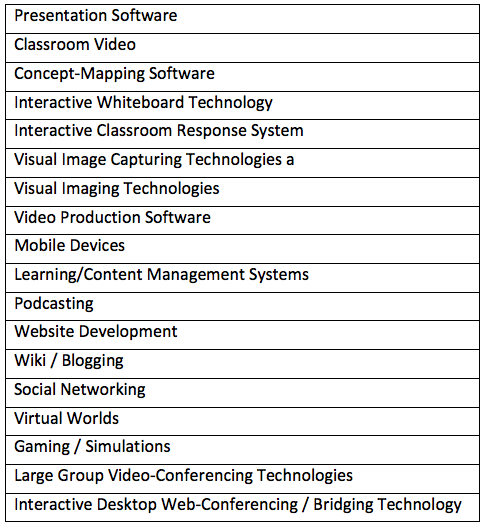

Additionally we are continually culling, refining, redefining and clustering our list of technologies in an attempt to better represent current and continually evolving examples of emergent yet applicable technologies for instruction (Figure 9), recognizing that any such “list” of technologies is constantly evolving in the face of technological innovation and convergence. We have thus created the single category of “classroom video” to include any form of video used in the classroom regardless of mode of delivery or format (i.e., dvd, streaming, embedding, etc). We have certainly had to add “mobile devices” to our list. It seemed important to identify “wikis and blogging” and “social networking” as different categories since they are increasingly being used to meet different educational needs. We have identified “virtual worlds” as a singular category as this application can take on numerous different roles in education; though it may include and overlap other categories (such as “gaming and simulations”).

Figure 9. Revised list of technologies for teaching

Dealing with the evolving overlapping of functionalities through convergence will continue to present difficulties as information/communication technologies and web 2.0/3.0/4.0 applications continually and organically evolve, and concomitant 21st century skills (Crookson, 2009) continue to be posited and debated. Additionally we have removed production and laboratory software (such as word processing, data management, probeware) as these seem increasingly to be standard communication, information or research tools rather than educational technologies–though this interpretation is certainly open to argument. Our LoA application currently in progress (involving school district and school site instructional technology leaders from across the province of Alberta) makes use of this list of technologies, the revised ten-point scale and the online real-time reporting mechanism. We have collected the pre- and posttest data from this group, and are preparing to administer the post-posttest survey for them to inform their sustainable adoption of emergent technologies for instruction.

The on-going organic and evolutionary nature of the LoA itself as an online, self reporting, self reflective, collaborative tool is intended to support any of a variety of very different professional communities in assessing their adoption of educational initiatives to support 21st century learners and enhance teaching and learning. The use of online, real-time data collection, aggregation, and reporting is the critical component, we argue, for providing new and different forms of collaboration to enable the development of collaborative links among educators (NSDC, 2003), usefully disseminating information regarding the adoption of instructional innovations (Steele & Boudett, 2008), and providing the educators concerned with ownership of the inquiry process themselves (Reason & Reason, 2007). We suggest that while the content of the LoA should be context-specific, the over-arching construct and process of its application is critical to its value as an instrument to facilitate data-informed decision-making. Rather than employment as a one-time administrative tool to merely aggregate systemic metrics, it is designed and intended to be deployed by, within and for a collaborative community of professional practice repeatedly over time to inform and facilitate their collective decisions and strategies regarding the adoption of innovative practices to enhance teaching and learning.

Effective and sustainable implementation of instructional change requires that communities of inquiry and professional practice must be empowered to continually “reexamine and reflect on their course curriculum, teaching practice, and use of information and communication technologies” (Garrison & Vaughn, 2008, p. 53). We posit that critical changes in teaching practice are most likely to meet with success only when the professional educators themselves responsible for the implementation of educational change have ownership of both the professional development activities which support the adoption and sustainability of change and the information which informs and directs these activities.

Adey, P. (1995). The effects of a staff development program: The relationship between the level of use of innovative science curriculum activities and student achievement. ERIC Research Report. ED 383567.

Bailey, D., & Palsha, S. (1992). Qualities of the stages of concern questionnaire and implications for educational innovations. Journal of Educational Computing Research, 85(4), 226-232.

Crookson, P. (2009). What would Socrates say? Educational Leadership 67(1), 8-14.

Davies, T., Lavin, A., & Korte, L. (2008). Student perceptions of how technology impacts quality of instruction and learning. Journal of Instructional Pedagogies, 1, 2-16.

DuFour, R., & Eaker, R. (1998). Professional learning communities at work: Best practices for enhancing student achievement. Bloomington, IN.: National Educational Service.

Garvin, D. (1993, July-August). Building a learning organization. Harvard Business Review, 78-91.

Garrison, D.R., & Vaughn, N.D. (2008). Blended learning in higher education: Framework, principles, and guidelines. San Francisco, CA: Jossey-Bass.

Gershner, V., Snider, S., & Sharla, L. (2001). Integrating the use of internet as an instructional tool: Examining the process of change. Journal of Educational Computing Research, 25(3), 283-300.

Griswold, P. (1993). Total quality schools implementation evaluation: A concerns-based approach. ERIC Research Report. ED 385007.

Guskey, T. (2005). Taking a second look at accountability. Journal of Staff Development, 26(1), 10-18.

Hall, G. & Hord, S. (1987). Change in schools: Facilitating the process. Albany, NY.: State University of New York.

Hall, G., Loucks, S., Rutherford, W. & Newlove, B. (1975). Levels of use of the innovation: A framework for analyzing innovation adoption. Journal of Teacher Education, 26(1), 52-56.

Hord, S., Rutherford, W., Huling-Austin, L. & Hall, G. (1987). Taking charge of change. Alexandria, VA.: Association for Supervision and Curriculum Development.

Lipton, L. & Wellman, B. (2007). How to talk so teachers listen. Educational Leadership, 65(1), 30-34.

National Staff Development Council. (2003). Moving NSDC’s staff development standards into practice: Innovation configurations. Oxford, OH: Author.

Neuman, W. (1997). Social research methods: Qualitative and quantitative approaches (3rd ed.). Needhan Heights, MA: Allyn and Bacon.

Newhouse, C. (2001). Applying the concerns-based adoption model to research on computers in classrooms. Journal of Research on Computing in Education 33(5), 1-21.

Reason, C., & Reason, L. (2007). Asking the right questions. Educational Leadership, 65(1), 36-40.

Steele, J., & Boudett, K. (2008). The collaborative advantage. Educational Leadership, 66(4), 55-59.

Waddell, G., & Lee, G. (2008). Crunching numbers, changing practices. Journal of Staff Development, 29(3), 18-21.

Wenger, E. (1998). Communities of practice: Learning, meaning, and identity. New York NY: Cambridge University Press.

Table 2. LoA Results - Mean, Median, and Standard Deviation by Technology (n=15)