Elizabeth Murphy

Author

Elizabeth Murphy is an Associate Professor in the Faculty of Education, Memorial University, Newfoundland where she teaches courses in the Masters of IT in Education program as well as courses in second-language learning.

She was recently funded by the Social Sciences and Humanities Research Council of Canada (SSHRC) to conduct a three-year study of the practice of the e-teacher in the high-school virtual classroom. In addition, she is a co-investigator on a SSHRC funded Community University Research Alliance (CURA) on e-learning in which she is exploring learner-centered e-teaching. She was also recently funded by a strategic joint initiative of SSHRC and the Department of Canadian Heritage to investigate strengthening students’ second-language speaking skills using online synchronous communication.

Correspondence regarding this article can be sent to: emurphy@mun.ca

Abstract: The effectiveness of computer-based learning environments depends on learners’ deployment of metacognitive and self-regulatory processes. Analysis of transmitted messages in a context of Computer Mediated Communication can provide a source of information on metacognitive activity. However, existing models or frameworks (e.g., Henri, 1992) that support the identification and assessment of metacognition have been described as subjective, lacking in clear criteria, and unreliable in contexts of scoring. This paper develops a framework that might be used by researchers analysing transcripts of discussions for evidence of engagement in metacognition, by instructors assessing learners’ participation in online discussions or by designers setting up metacognitive experiences for learners.

Résumé : L’efficacité des environnements d’apprentissage assistés par ordinateur repose sur l’utilisation de processus de métacognition et d’autorégulation par les apprenants. L’analyse de messages transmis dans un contexte de communication assistée par ordinateur peut constituer une source d’information sur l’activité métacognitive. Cependant, les modèles et cadres existants (p. ex. Henri, 1992) qui permettent la reconnaissance et l’évaluation de la métacognition ont été décrits comme subjectifs, dépourvus de critères clairs et peu fiables dans des contextes de notation. Cet article décrit un cadre qui pourrait être utilisé par les chercheurs qui analysent les transcriptions de discussions à la recherche de preuves d’engagement métacognitif, par les instructeurs qui procèdent à l’évaluation de la participation des apprenants à des discussions en ligne ou par les concepteurs qui élaborent des expériences métacognitives pour les apprenants.

There has been widespread recognition in the literature for the value of learner-centered approaches in the classroom (see Duffy & Kirkley, 2004; Lambert & McCombs, 1998; McCombs & Miller, 2007; Reilly, 2005; Weimer, 2002). According to the learner-centered approach, the role of the teacher is optimally as a facilitator, advisor, tutor or coach. The role of learners is as self-regulating, independent, active and engaged constructors of knowledge. Self-regulation requires that learners proactively engage in metacognitive (Mc) processes (Zimmerman & Schunk, 1989) that involve reflecting on and analyzing one’s own thinking (Flavell, 1976).

Metacognition (MC) has received considerable attention in the educational psychology literature. Nelson (1992) cited a 1990 survey in the American Psychologist listing MC among the top 100 topics in cognitive and developmental psychology. The attention is not surprising given that the development of Mc expertise has been described as crucial for fostering and improving individual as well as group and team learning (White & Frederiksen, 2005) and that the use of Mc strategies distinguishes competent from less competent students (Pellegrino, Chudowsky & Glaser, 2001).

Azevedo (2005) argued that the effectiveness of computer-based learning environments, in relation to online learning, actually depends on learners’ regulation of their learning and on the deployment of Mc and self-regulatory processes. In the online classroom, self-regulation and MC take on a particular importance because learners are physically separated from each other and from their teacher. As Topcu and Ubuz (2008) argued, “for students to produce efficient participation and deeper levels of thought in [online] forum discussions, metacognitive strategies that regulate self-awareness, self-control, and self-monitoring are necessary” (p.1).

Henri (1992) observed that the analysis of transmitted messages in a context of Computer-Mediated Communication (CMC), unlike in the traditional teaching or learning situation, can provide a source of information on Mc activity. Henri’s model for analysing CMC includes five dimensions, one of which was the Mc dimension. Her model has figured prominently and frequently in studies involving transcript analysis of CMC. However, these studies have not always paid separate or exclusive attention to the Mc dimension. The few studies that have considered the metacognitive dimension reported difficulties. For example, Hara, Bonk and Angeli (2000) described the category of Mc knowledge as “extremely difficult and subjective” (p. 126). They concluded that MC is, in general, “extremely difficult to reliably score” and that aspects of the instrument “are fairly ambiguous and inadequate for capturing the richness of electronic discussion in a clear manner” (p. 121). Hara (2000) used Henri’s model to analyse the Mc and social dimensions of CMC data of one class but opted not to use Henri’s category of Mc knowledge because it lacked clear and objective criteria for analyzing data.

Luetkehans and Prammanee’s (2002) study of students’ participation in online courses also relied on Henri’s model to analyze online transcripts. The authors described the category of Mc knowledge as difficult to capture. Likewise, Fahy (2002) concluded that Henri’s approach “left unanswered questions about the metacognitive aspects of online interaction” (p. 7). Following their use of Henri’s model, McKenzie and Murphy (2000) concluded that few messages showed evidence of MC and that the scoring of the Mc aspects resulted in poor reliability.

One other instrument that has a focus on MC is Gunawardena, Lowe and Anderson’s (1997) interaction analysis model. This model includes MC in the final phase, Phase V, Item C, as follows: “Metacognitive statements by the participants illustrating their understanding that their knowledge or ways of thinking (cognitive schema) have changed as a result of the conference interaction” (p. 414). They applied the model to the analysis of an online debate but reported difficulty distinguishing discussants’ Mc statements. The difficulty may explain why, of 206 postings, the researchers coded only four in the Mc phase. Kanuka and Anderson (1998) reported similar results when they applied the same model to the analysis of transcripts from an online discussion. They concluded that the majority of the interactions were coded in the lower phases of the model, meaning that they noted few, if any, instances of engagement in MC.

In general, there is an abundance of literature related to analysis of online discussions (see Anderson, Rourke, Garrison, & Archer, 2001; Aviv, Erlich, Ravid & Geva, 2003; Beuchot & Bullen, 2005; Campos, 2004; Cheung & Hew, 2004; Clulow & Brace-Govan, 2001; Fahy & Ally, 2005; Garrison, Anderson & Archer, 2000; Kanuka, 2005; Murphy, 2004a, 2004b; Perkins & Murphy, 2006). However, MC has received meagre attention in this literature especially as compared to other skills, such as, critical thinking.

Topcu and Ubuz (2008) studied MC in online discussions but they did not use an instrument for identifying or measuring metacognition in the discussion. The authors administered pre- and post- tests to discussion participants to identify their levels of MC then compared those results to their participation in the discussion forum. Hurme, Palonen and Järvelä (2006) analysed patterns of interaction and the social aspects of MC in discussions in secondary school students in mathematics problem-solving. The authors did not, however, present any systematic scheme or instrument for analysis of MC. They simply categorized messages as Mc knowledge according to Flavell’s (1979) and Brown’s (1987) person, task and strategy variables, or as Mc skills (Flavell, 1979; Brown, 1987; Schraw & Dennison, 1994) or as not Mc.

Choi, Land and Turgeon (2005) presented “a peer-questioning scaffolding framework intended to facilitate metacognition and learning through scaffolding effective peer-questioning in online discussion” (p.483). The authors did not identify or use any Mc framework to analyse transcripts of the discussions. Other studies have been conducted on MC in online discussions (e.g., Iding, Vick, Crosby, & Auernheimer, 2004; Kramarski & Mizrachi, 2004; Topçu & Ubuz, 2005) or in contexts of CMC (e.g., Gama, 2004; McLoughlin, Lee & Chan, 2006; Worrall & Bell, 2007) but none of these provide any frameworks, indicators or mechanisms for identifying and promoting MC in online discussions.

These studies point to a lack of reliable instruments, frameworks or models for use in the identification or promotion of MC in the context of CMC, in general, and online discussions, in particular. The purpose of this paper is to develop such a framework. The framework may be of use to researchers analysing transcripts of discussions for evidence of engagement in MC. It might be of value to instructors assessing learners’ participation in online discussions or to designers setting up Mc experiences for learners. As Henri (1992) noted regarding her own framework on MC, “it can be used diagnostically, to orient the educator’s approach in support of the learning process” (p. 132).

The paper relies on foundational work in the area of MC, including that of Flavell (1987), Jacobs and Paris (1987) and Brown (1987). It also draws on Schraw and Dennison (1994), who themselves built on Flavell’s work to create their Metacognitive Awareness Inventory. This paper also builds on Anderson et al.’s (2001) taxonomy of Mc knowledge and on Henri’s (1992) work.

I begin with a synthesis of the two-component view of MC. Subsequently, for each component, and beginning with Mc knowledge, I provide an overview of how it has been conceptualized and defined. Next, for each component, I synthesize the associated indicators. The indicators describe the components in more easily identifiable terms—for example, knowledge about how one is approaching the task and in behavioural terms (e.g., analyzing the usefulness of strategies). Finally, for each component, I rely on the indicators to create prompts and examples for identifying and promoting MC in a context of an online discussion. This process will result in a framework that can be used to analyse transcripts of online discussions. The framework is timely given the importance of MC, the emphasis on student-centred learning along with an increase in online learning and online discussions. This framework represents the first attempt beyond Henri’s (1992) framework to develop a systematic approach to identifying and promoting MC in online discussions.

Typical representations of MC are based on the argument that it is comprised of two components or dimensions. In experiments with an inventory of MC, Schraw and Dennison (1994) found that the experiments “strongly supported the two component view of metacognition” (p. 470). Schraw (1998) emphasized that knowledge of cognition and regulation of cognition are interrelated. Furthermore, he argued that both components span a variety of subject areas and are not domain specific. Schraw and Dennison reported that the two components were “strongly intercorrelated, suggesting that knowledge and regulation may work in unison to help students self-regulate” (p. 466). Brown’s (1987) early work on MC also emphasized the two components, which, she noted, are closely related and feed recursively off each other.

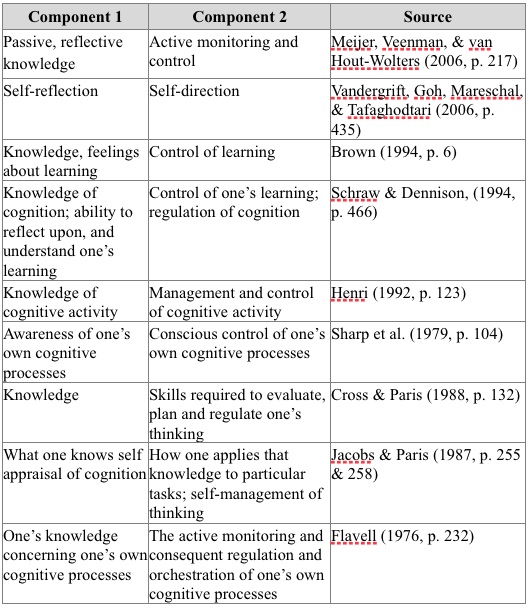

Henri (1992) referred to the components as Knowledge and Skills, Flavell (1987) as Knowledge and Experiences and Jacobs and Paris (1987) as Self-appraisal of cognition and Self-management of thinking. Brown (1994) and Schraw and Dennison (1994) also approached the construct from a two-component point of view by distinguishing between knowledge about cognition and regulation of cognition. One of the exceptions to this two-component view is in the work of Anderson et al. (2001). They provided a detailed framework as well as examples of Mc knowledge, but they subsumed Mc regulation under the broader category of cognition. Some examples of two-component views are synthesized in Table 1.

Table 1. Two component views of MC

Flavell (1987) divided Mc knowledge into the three variables of Person, Task and Strategy. He subdivided the Person variables into three types as follows: Intraindividual, which refers to “knowledge or belief about one’s interests, propensities, aptitudes; Interindividual, which involves comparing between persons; and Universal which involves ‘acquired ideas about universal aspects of human cognition or psychology’” (p. 22). He described the Task variable as relating to “how the nature of the information encountered affects and constrains how one should deal with it” (p. 22). The Strategy variable included the way learners go about reaching their goals.

Henri’s (1992) analytical model of Mc knowledge drew on Flavell’s (1987) Strategy, Person and Task variables. Henri provided definitions for each of the three variables as follows:

Jacobs and Paris (1987) refer to the knowledge component of MC as Self-appraisal of cognition, and explained that it relates to “the static assessment of what an individual knows about a given domain or task” (p. 258). The Self-appraisal may be of “one’s abilities or knowledge, or it might involve an evaluation of the task or consideration of strategies to be used” (pp. 258-259). The category of Self-appraisal of cognition includes three subcategories of knowledge as follows:

Anderson et al.’s (2001) category of Mc knowledge “bridges the cognitive and affective domains” (p. 259). They defined it as “knowledge of cognition in general as well as awareness and knowledge of one’s own cognition (p. 46). They subdivided Mc knowledge as follows: Strategic knowledge; knowledge about cognitive tasks, including appropriate Contextual and Conditional knowledge; and Self knowledge. Their categories of Strategic knowledge; knowledge about cognitive task; and Self-knowledge correspond respectively to Henri’s (1992) and Flavell’s (1987) categories of Strategies; Task and Person.

Strategic knowledge refers to “knowledge of the general strategies for learning, thinking and problem solving ... across many different tasks and subject matters” (p. 56). The authors cautioned that this category includes the strategies but not their actual use. Strategic knowledge encompasses knowledge of different strategies for memorizing content, deriving meaning or understanding what is heard or read in a context of learning. Knowledge about cognitive tasks would include Conditional knowledge about knowledge of the different tasks for which certain strategies might be appropriate. Such knowledge would also comprise an appreciation for the social, cultural and conventional contexts surrounding use of the different strategies. Self-knowledge refers to “knowledge of one’s strengths and weaknesses in relation to cognition and learning” (p. 59), as well as one’s belief about one’s own motivation.

Schraw and Dennison (1994) drew on previous models of MC (e.g., Brown, 1987; Flavell, 1987; Jacobs & Paris, 1987) to divide the construct into two categories of knowledge (Reflection) about cognition and regulation (Control) of cognition. This first category of knowledge about cognition corresponds to Henri’s (1992), Jacobs and Paris’ (1987) as well as Anderson et al.’s (2001) category of Mc knowledge. This first category of knowledge about cognition includes: Declarative knowledge about one’s skills, self, strategies, intellectual resources and abilities as a learner; Procedural knowledge about how to use strategies; and Conditional knowledge about when and why to use strategies.

Jacobs and Paris (1987) and Schraw and Dennison (1994) adopted a similar perspective on Mc knowledge. Flavell (1987) Henri (1992) and Anderson et al. (2001) espoused a perspective that differs from Jacobs and Paris and Schraw and Dennison in that the former group of researchers did not consider knowledge of strategies as part of Person. Anderson et al. included Conditional knowledge with Procedural, and Henri and Flavell did not incorporate Conditional knowledge into their models. Also, whereas Anderson et al., Jacobs and Paris and Schraw and Dennison placed Self-awareness under the variable of Declarative knowledge or the Person variable, Henri put Self-awareness under the component of Mc skills. She defined Self-awareness as the “ability to identify, decipher and interpret correctly the feelings and thoughts connected with a given aspect of the task” (p. 132).

For the purposes of this paper, I will use the term Declarative knowledge to refer to personal knowledge about oneself as a learner, such as one’s strengths and weaknesses, as well as one’s knowledge of strategies. Declarative knowledge will include Flavell’s and Henri’s Person and Strategy variables as well as Anderson’s Self-awareness and Strategies. I will use the term Procedural knowledge to include Flavell’s and Henri’s variable of Task and Anderson et al.’s knowledge of Tasks. Finally, the variable of Strategies (Conditional knowledge) refers to knowing why and when to use certain strategies. Here, I will use the term Conditional knowledge. The three terms will refer respectively to

Henri (1992) provided two indicators for each of the three types of Mc knowledge for a total of six indicators. The indicators for Person are as follows: “Comparing oneself to another as a cognitive agent. Being aware of one’s emotional state” (p. 132). The indicators for the variable of Task are: “Being aware of one’s way of approaching the task. Knowing whether the task is new or known” (p. 132). For Strategies, she also offered two indicators; however, she articulated these more like definitions than indicators: e.g., “Strategies making it possible to reach a cognitive objective of knowledge acquisition. Metacognitive strategies aimed at self-regulation of progress” (p. 132). For Self-awareness, she supplied two indicators but she presents these more as actual examples than indicators: “I am pleased to have learned so much … I am discouraged at the difficulties involved… ” (p. 132; Henri’s ellipsis).

Jacobs and Paris (1987) did not provide actual indicators of Mc knowledge. Instead, they produced an “Index of Reading Awareness Items” (p. 269) but only for the Mc knowledge category of Conditional knowledge and only for use in a context of classroom research with children. One example of an item is as follows:

Anderson et al. provided 20 examples for the three categories of knowledge. An example for Self-knowledge was “knowledge that critiquing essays is a personal strength, whereas writing essays is a personal weakness; awareness of one’s own knowledge level” (p. 46). Other examples in this category refer to knowledge of one’s capabilities, goals and interests related to a particular task. In the category of knowledge about cognitive tasks, such as Contextual and Conditional knowledge, the authors incorporated examples as follows: recall tasks make more demands on memory than recognition tasks; a primary source book may be more difficult to understand than a general textbook and rehearsal of information is one way to retain information. They also included knowledge of the “local and general social, conventional, and cultural norms for how, when, and why to use different strategies” (p. 59). For Knowledge of strategies, they provided strategies, such as mnemonic, elaboration, organizational, planning and comprehension monitoring.

Schraw and Dennison (1994) put forth a comprehensive, complete and tested list of operationalized statements related to the three types of Mc knowledge in the form of a Metacognitive Awareness Inventory (see pp. 473-475). In the category of Declarative knowledge, they presented items such as the following: “I know what kind of information is most important to learn; I am good at organizing information; I know what the teacher expects me to learn; I learn more when I am interested in the topic” (p. 473). Under the heading of Procedural knowledge, indicators include: “I try to use strategies that have worked in the past; I have a specific purpose for each strategy I use; I am aware of what strategies I use when I study” (pp. 473-474). For Conditional knowledge, some of the indicators are as follows:

I learn best when I know something about the topic; I use different learning strategies depending on the situation; I can motivate myself to learn when I need to. I know when each strategy I use will be most effective. (pp. 473-474)

If we conceptualize Mc knowledge as Declarative, Procedural and Conditional, what might be some actual examples of these types of knowledge in a context of an online discussion, i.e., if a researcher or instructor wanted to identify instances of Mc thinking, what types of statements might constitute signs or evidence? Furthermore, for instructional designers or instructors wanting to intentionally engage discussants in Mc thinking, what types of prompts, scaffolds or questioning might elicit this type of thinking? In this section, I draw on the definitions for Declarative, Procedural and Conditional Mc knowledge to suggest corresponding prompts and examples that are specific to text-based online discussions. These might be subsequently revised, added to or adapted in actual contexts of use. They might also be tested for their validity.

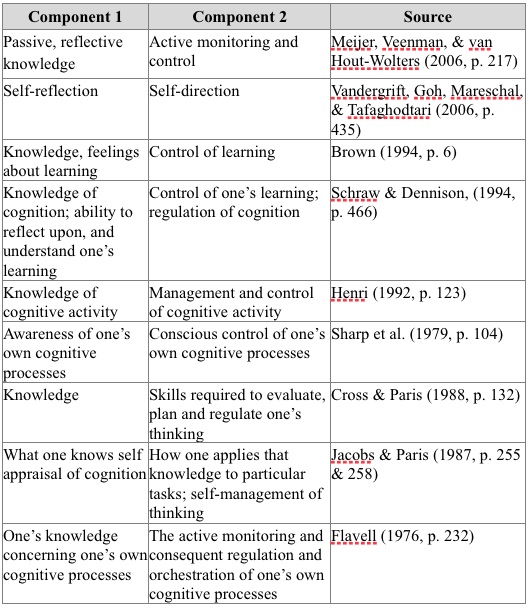

Declarative knowledge would consist of the discussants’ knowledge of him or herself in an online discussion as well as knowledge of strategies relevant to that context. Knowledge of oneself in a discussion would include cognitive as well as affective elements. Such knowledge would relate to a discussant’s particular strengths, weaknesses, interests and feelings associated with participating in an online discussion. Additionally, such knowledge would comprise knowledge of strategies, such as brainstorming in order to come up with initial ideas or asking questions to elicit responses and reactions from other discussants.

Table 2 presents prompts and examples of Declarative knowledge relevant to an online discussion. The examples reflect some types of statements that discussants might make with regard to their own knowledge and behaviours. The list is not exhaustive. The examples are meant as guides to the types of statements that might correspond to Declarative knowledge. The sample prompts might serve to design and scaffold the discussion while the examples represent types of evidence that instructors could use in assessment (i.e., to determine if the discussant actually demonstrates Declarative knowledge.)

Table 2. Prompts and examples for Declarative knowledge

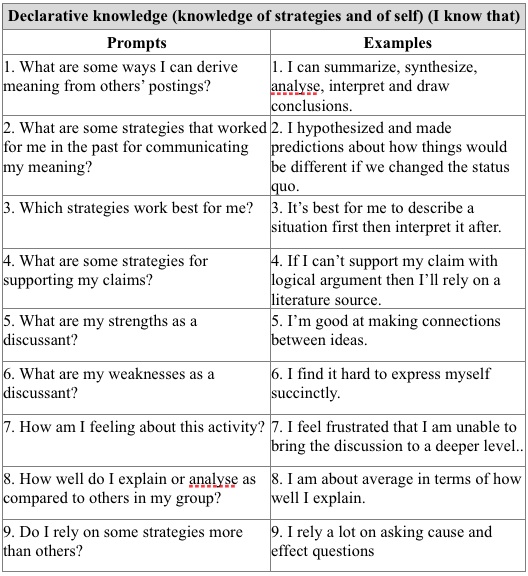

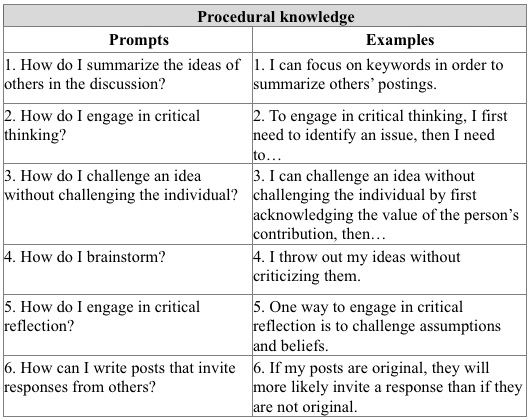

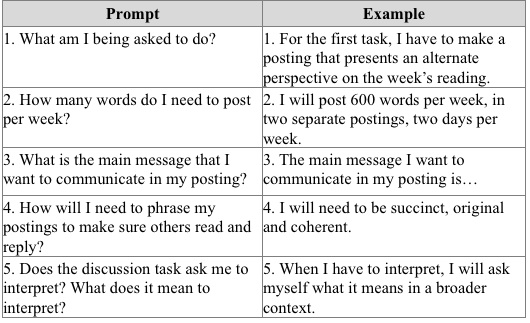

Procedural knowledge would refer to knowledge of how to perform tasks or procedures. This knowledge would range from the simple, such as how to compose a message or how to make a reply, to the more complex (e.g., knowing how to stimulate discussion or how to present an alternate perspective). As with Declarative and Conditional knowledge, Procedural knowledge, or what the discussant needs to know how to do, may vary depending on the context of a discussion, on the instructor or on the course. For example, in one context, the instructor may require a discussant to know how to make postings that reflect critical thinking whereas that requirement may not exist in another discussion. Table 3 presents sample prompts and examples of Procedural knowledge relevant to an online discussion.

Table 3. Prompts and examples for procedural knowledge

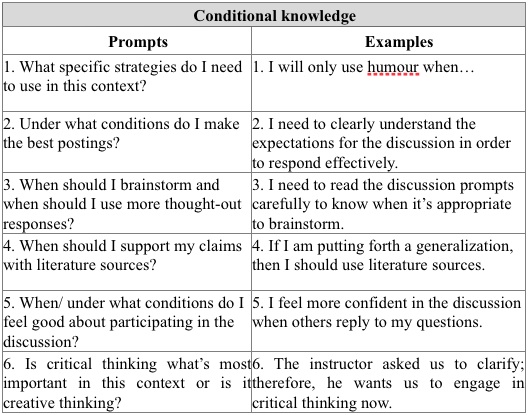

Conditional knowledge involves knowing the contexts and conditions under which use of Procedural and Declarative knowledge should or might be used. Conditional knowledge denotes knowledge of the conditions under which certain strategies might be most effective, such as when a posting is most likely to elicit a response from another, or when one should brainstorm and when one should instead provide a carefully though-out response. Conditional knowledge might also include knowledge of when one learns best or under what conditions one makes the most effective posts. Examples and prompts for Conditional knowledge are presented in Table 4.

Table 4. Prompts and examples for conditional knowledge

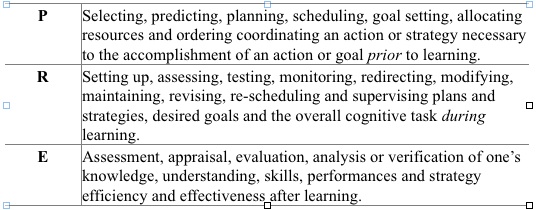

Schraw (1998) noted that, although other skills have been associated with Mc control, “three essential skills are included in all accounts” (p. 115). These are Planning, Monitoring and Evaluation. Brown’s (1978) early work reflected this inclusion of the three skills as did the research of Cross and Paris (1988). Jacobs and Paris referred to the second component of MC as Self-management of thinking which involves “the dynamic aspects of translating knowledge into action” (p. 259). The Self-management of thinking “allows the learner to adjust to changing task demands as well as to successes and failures” (p. 259). Jacobs and Paris subdivided Self-management of thinking into three processes of Planning, Evaluation and Regulation. Henri’s (1992) model for the second component of MC also includes the three skills as well as the additional skill of Self-awareness. However, she did not provide any justification for the inclusion of Self-awareness in this component when others typically incorporated it into Mc knowledge.

Schraw and Dennison (1994) referred to the second component of MC as Regulation of cognition. They drew on earlier models (e.g., Artzt & Armour-Thomas, 1992; Baker, 1989) to divide the category into five skills as follows: Planning, Information management strategies, Comprehension monitoring, Debugging strategies and Evaluation. Comprehension monitoring would be similar to regulation. The additional skills of Debugging strategies and Information management strategies are unlike those in the other models. The authors presented Information management strategies as “skills and strategy sequences used online to process information more efficiently. These include ‘organizing, elaborating, summarizing, selective focusing.’ Debugging strategies are used to correct comprehension and performance errors” (pp. 474-475).

Table 5. Synthesis of definitions of the second component of metacognition of planning (P), regulation (R) and evaluation (E) (Brown, 1987; Henri, 1992; Jacobs & Paris, 1987; Shraw & Dennison, 1994)

Henri (1992) provided a limited number of indicators for each of the types of Mc control. Her labelling of indicators resembled her use of definitions, so that it is difficult to distinguish how they differ; for example, for Planning, she offered the following definition: “Selecting, predicting and ordering an action or strategy necessary to the accomplishment of an action” (p. 132). What she described as an indicator was articulated in the same way as a definition, i.e., “Predicting the consequences of an action” (p. 132).

Schraw and Dennison (1994) provided a detailed list of 52 items related to each of the three processes in their Metacognitive Awareness Inventory. These included for example: Planning: I ask myself questions about the material before I begin; Monitoring: I ask myself periodically if I am meeting my goals and Evaluating: I ask myself if there was an easier way to do things after I finish a task. The authors also offered indicators for Information management strategies such as I slow down when I encounter important information; I focus on the meaning and significance of new information. For Debugging strategies they included items such as I stop and go back over information that is not clear; I change strategies when I fail to understand (pp. 473-474).

Planning involves reading the prompts and any instructions or guidelines before the discussion begins. It involves asking oneself questions about the tasks and kinds of thinking skills in which, as a discussant, one is being asked to engage (e.g., what am I being asked to do: describe, explain, evaluate… ?). Once the discussant has understood, he/she then has to decide on goals for the activity and even attempt to predict in advance the types of behaviours needed to achieve those aims (e.g., I’ll need to articulate what the readings mean in my personal context if I want to be reflective about the course material.) Table 6 lists some prompts and examples of Mc planning in a context of an online discussion although many more are possible. They may vary in their articulation and emphasis depending on the context.

Table 6. Prompts and examples for planning

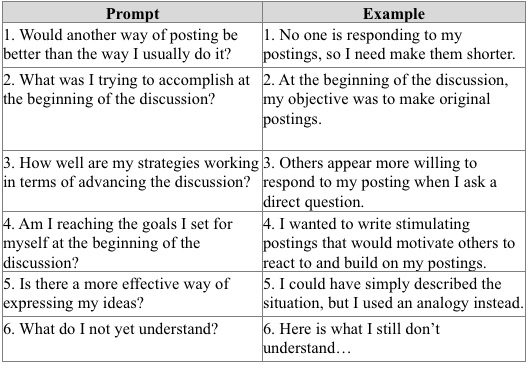

While the discussion is taking place, Regulating involves behaviours such as monitoring how one is communicating. Monitoring might be rereading one’s posts to gauge how they might be clearer or more succinct. Monitoring might require deeper analysis of one’s postings to identify the ratio of lower- to higher- level thinking skills. Monitoring entails asking oneself questions about how well one is doing and whether one’s goals are being met. The discussant then adjusts or regulates his/her communication and thinking accordingly. Regulating would also include Information management strategies and Debugging strategies. Table 7 provides sample prompts and examples for Regulating.

Table 7. Prompts and examples for regulating

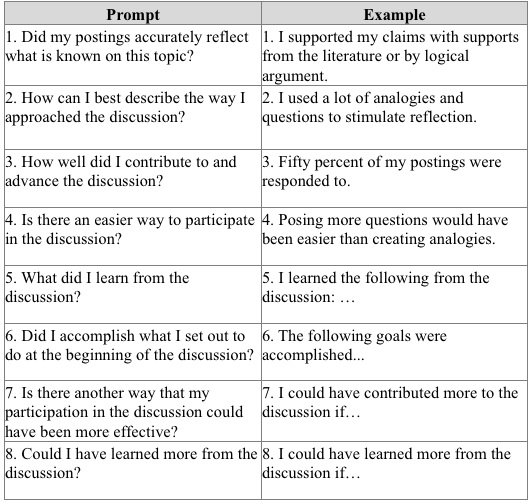

Evaluating occurs once the discussion has ended. The text-based ‘trail’ or archive of everyone’s participation facilitates Evaluating. To aid analysis, most online discussions provide tools for searching and compiling. The discussant can assemble his or her postings and compare them with, for example, a rubric of the requirements for the various discussion tasks. Evaluating also involves a retrospective questioning of one’s behaviours in relation to one’s goals for the discussion. Retrospective questioning may be made easier if discussants have to express their goals as part of the discussion so that they too can be archived. The discussant may also ask whether he/she might have learned more and what knowledge was gained that might be applied in other contexts, such as future discussions. Some sample prompts and examples of evaluating oneself after the discussion are provided in Table 8.

Table 8. Prompts and examples for evaluating

As Henri (1992) noted, CMC represents “a gold mine of information concerning the psycho-social dynamics at work among students” (p. 118). She further remarked that the “attentive educator, reading between the lines in texts transmitted by CMC will find information unavailable in any other learning situation” (p. 118). However, identifying and promoting MC in online discussions will require more than educators reading between the lines. Above all, an emphasis on MC calls upon the educator to actively and explicitly provide the learner with the tools to read between the lines. It shifts the focus of responsibility for learning to the learner. A focus on MC emphasizes the design of the discussion, as well as the prior choice and inclusion of prompts that will engage discussants in Mc knowledge and control.

Strategies for discussants will need to be identified in order to design for MC, because strategies play a pivotal role in MC. Presently, there is little or nothing in the literature that analyzes discussion strategies from the perspective of the learner or discussant. Typically, strategies focus on the experience of the individual moderating the discussion, such as the instructor (see for example Beaudin, 1999). Research into strategies for discussants would identify ways that they could take more explicit charge of their learning.

In this paper, I have developed a framework that might be used by researchers analysing transcripts of discussions for evidence of engagement in metacognition, by instructors assessing learners’ participation in online discussions or by designers setting up metacognitive experiences for learners. Specifically, the prompts and examples provided in this paper are meant as a general guide or as a starting point for identifying and promoting MC in online discussions. The prompts and examples can be modified to suit the specific type of discussion. Not all prompts and examples will be used in any one context. For the individual interested in transcript analysis, evidence of engagement in MC might involve looking for examples similar to those provided in each of the two components and five subcomponents. The analysis might aim to identify whether discussants showed more evidence of MC in one component than in another.

Thank you to research assistants Pamela Osmond and Janine Murphy who helped compile the literature on metacognition and to research assistant Kate Scarth for proofreading. The writing of this paper was funded in part by the Social Sciences and Humanities Research Council of Canada through a grant for a Community University Research Alliance (CURA) on e-learning and a sub-grant of research on learner-centered e-teaching.

Anderson, L., Krathwohl, D., Airasian, P., Cruikshank, K., Mayer, R., Pintrich, P., Raths, J., Wittrock, M. (2001). A taxonomy for learning, teaching and assessment: A revision of Bloom's taxonomy of educational objectives. New York: Longman.

Anderson, T., Rourke, L., Garrison, R., & Archer, W. (2001). Assessing teaching presence in a computer conferencing context. Journal of Asynchronous Learning Networks, 5(2), 1-17.

Artzt, A.F., & Armour-Thomas, E. (1992). Development of a cognitive-metacognitive framework for protocol analysis of mathematical problem solving in small groups. Cognition and Instruction, 9(2), 137-175.

Aviv, R., Erlich, Z., Ravid, G., & Geva, A. (2003). Network analysis of knowledge construction in asynchronous learning networks. Journal of Asynchronous Learning Networks, 7(3), 1-23.

Azevedo, R. (2005). Computer environments as metacognitive tools for enhancing learning. Educational Psychologist, 40(4), 193-197.

Baker, L. (1989). Metacognition, comprehension monitoring, and the adult reader. Educational Psychology Review, 1, 3-38.

Beaudin, B. (1999). Keeping online asynchronous discussions on topic. Journal of Asynchronous Learning Networks, 3(2), 41-53.

Beuchot, A., & Bullen, M. (2005). Interaction and interpersonality in online discussion forums. Distance Education, 26(1), 67-87.

Brown, A. L. (1978). Knowing when, where, and how to remember: a problem of metacognition. In R. Glaser (Ed.), Advances in Instruction Psychology Volume 1 (pp.77-165) New Jersey: Erlbaum.

Brown, A. L. (1987). Metacognition and other mechanisms. In F. E. Weinert & R. H. Kluwe (Eds.), Metacognition, motivation and understanding (pp. 65-116). Hillsdale, NJ: Erlbaum.

Brown, A. L. (1994). The advancement of learning. Educational Researcher, 23(8), 4-12.

Campos, M. (2004). A constructivist method for the analysis of networked cognitive communication and the assessment of collaborative learning and knowledge-building. Journal of Asynchronous Learning Networks, 8(2), 1-29.

Cheung, W. S., & Hew, K. F. (2004). Evaluating the extent of ill-structured problem solving process among pre-service teachers in an asynchronous online discussion and reflection log learning environment. Journal of Educational Computing Research, 30(3), 197-227.

Choi, I., Land, S. & Turgeon, J. (2005). Scaffolding peer-questioning strategies to facilitate metacognition during online small group discussion. Instructional Science, 33, 483–511.

Clulow, V. & Brace-Govan, J. (2001). Learning through bulletin board discussion: A preliminary case analysis of the cognitive dimension. Moving Online, conference proceedings, Southern Cross University, Australia, September.

Cross, D. R., & Paris, S. G. (1988). Developmental and instructional analyses of children’s metacognitive and reading comprehension. Journal of Educational Psychology, 80(2), 131-142.

Duffy, T. M., & Kirkley, J. R (Eds.). (2004). Learner-centered theory and practice in distance education: cases from higher education. Hillsdale, NJ: Erlbaum

Fahy, P. & Ally, M. (2005). Student learning style and asynchronous Computer-Mediated Conferencing (CMC) interaction. American Journal of Distance Education, 2005, 19(1), 5-22 .

Fahy, P. (2002). Use of linguistic qualifiers and intensifiers in a computer conference. The American Journal of Distance Education, 16(1), 5–22.

Flavell, J. H. (1976). Metacognitive aspects of problem solving. In L. B. Resnick (Ed.), The nature of intelligence, (pp. 231-235). Hillsdale, NJ: Erlbaum.

Flavell, J. H. (1979). Metacognition and cognitive monitoring: A new area of cognitive-developmental inquiry. American Psychologist, 34, 906-911.

Flavell, J. H. (1987). Speculations about the nature and development of metacognition. In F. E. Weinert & R. H. Kluwe (Eds.), Metacognition, motivation and understanding (pp. 21-29). Hillsdale, NJ: Erlbaum.

Gama, C. (2004). Metacognition in interactive learning environments: The Reflection Assistant Model.In Intelligent Tutoring Systems: Proceedings 7th International Conference, ITS 2004, Maceió, Alagoas, Brazil. Berlin / Heidelberg: Springer, 668-677.

Garrison, D., Anderson, T. & Archer, W. (2000). Critical inquiry in a text-based environment: Computer conferencing in higher education. Internet and Higher Education, 11(2), 1-14.

Gunawardena, C., Lowe, C., & Anderson, T. (1997). Interactional analysis of a global online debate and the development of constructivist interaction analysis model for computer conferencing. Journal of Educational Computing Research, 17(4), 395-429.

Hara, N. (2000). Visualizing tools to analyze online conferences. Paper presented at the National Convention of the Association for Educational Communications and Technology, Albuquerque, NM. Paper retrieved October 30, 2007, from http://ils.unc.edu/~haran/paper/fca/fca_aera.html

Hara, N., Bonk, C.J., & Angeli C. (2000). Content analysis of online discussion in an applied educational psychology course. Instructional Science, 28 (2), 115-152.

Hurme, T., Palonen, T. & Järvelä, S. (2006). Metacognition in joint discussions: an analysis of the patterns of interaction and the metacognitive content of the networked discussions in mathematics Metacognition and Learning, 1(2).

Henri, F. (1992). Computer conferencing and content analysis. In A. R. Kaye (Ed.), Collaborative learning through computer conferencing: the Najaden papers (pp. 117– 36). Berlin: Springer-Verlag.

Iding, M., Vick, R., Crosby, M.. & Auernheimer, B. (2004). College students’ metacognition in on-line discussions. In L. Cantoni & C. McLoughlin (Eds.), Proceedings of World Conference on Educational Multimedia, Hypermedia and Telecommunications 2004 (pp. 2896-2898). Chesapeake, VA: AACE.

Jacobs, J. E., & Paris, S. G. (1987). Children’s metacognition about reading: Issues in definition, measurement, and instruction. Educational Psychologist, 22(3 & 4), 235-278.

Kanuka, H. (2005). An exploration into facilitating higher levels of learning in a text-based internet learning environment using diverse instructional strategies. Journal of Computer-Mediated Communication, 10(3).

Kanuka, H., & Anderson, T. (1998). On-line social interchange, discord, and knowledge construction. Journal of Distance Education, 13(1), 57-74. Retrieved October 30, 2007 from http://cade.athabascau.ca/vol13.1 /kanuka.html .

Kramarski, B. & Mizrachi N. (2004). Enhancing mathematical literacy with the use of metacognitive guidance in forum discussion. Proceedings Of The 28th Conference Of The International Group For The Psychology Of Mathematics Education, vol 3, pp 169–176.

Lambert, N. M., & McCombs, B. L. (1998). How students learn: Reforming schools through learner-centered education. Washington, D.C.: American Psychological Association.

Luetkehans, L. & Prammanee, N. (2002). Understanding participation in online courses: a triangulated study of perceptions of interaction. In P. Barker & S. Rebelsky (Eds.), Proceedings of world conference on educational multimedia, hypermedia and telecommunications 2002 (pp. 1596-1597). Chesapeake, VA: AACE.

McCombs, B., & Miller, L. (2007). Learner-centered classroom practices and assessments : maximizing student motivation, learning, and achievement. Thousand Oaks, CA: Corwin.

McKenzie., W. & and Murphy, D. (2000). "I hope this goes somewhere": evaluation of an online discussion group. Australian Journal of Educational Technology, 16(3), 239-257.

McLoughlin, C., Lee, M. & Chan, A. (2006). Fostering reflection and metacognition through student-generated podcasts. Paper presented in the Australian Computers in Education Conference.

Meijer, J., Veenman, M., & van Hout-Wolters, B. (2006). Metacognitive activities in text-studying and problem-solving: development of a taxonomy. Educational Research and Evaluation, 12(3), 209-237.

Murphy, E. (2004a).An instrument to support thinking critically about critical thinking in online asynchronous discussions. Australasian Journal of Educational Technology, 20(3), 295-316.

Murphy, E. (2004b). Identifying and measuring ill-structured problem formulation and resolution in online asynchronous discussions. Canadian Journal of Learning and Technology, 30(1).

Nelson, T. (Ed.). (1992). Metacognition: Core readings. Boston: Allyn & Bacon.

Pellegrino, J., Chudowsky, N., & Glaser, R. (Eds.) (2001). Knowing what students know: the science and design of educational assessment. Washington, DC: National Academy Press.

Perkins, C., & Murphy, E. (2006). Identifying and measuring individual engagement in critical thinking in online discussions: An exploratory case study. Educational Technology & Society, 9(1), 298-307.

Reilly, D.H. (2005). Learner-centered education: successful student learning in a nonlinear environment. Frederick, MD: Publish America.

Schraw, G., & Dennison, R. (1994). Assessing metacognitive awareness. Contemporary Educational Psychology, 19, 460-475.

Schraw, G. (1998). Promoting general metacognitive awareness. Instructional Science, 26(1-2), 113–125.

Sharp, D., Cole, M., Lave, C., Ginsburg, H. P., Brown, A. L., & French, L. A. (1979). Education and cognitive development: The evidence from experimental research. Monographs of the Society for Research in Child Development, 44(1 & 2), 1-112.

Topcu, A., & Ubuz, B. (2008). The effects of metacognitive knowledge on the pre-service teachers’ participation in the asynchronous online forum. Educational Technology & Society, 11(3), 1-12.

Topçu, A. & Ubuz, B. (2005). The effect of the metacognitive abilities on the discussion performance in a direct instructional asynchronous online course. In C. Crawford et al. (Eds.), Proceedings of Society for Information Technology and Teacher Education International Conference 2005 (pp. 2351-2356). Chesapeake, VA: AACE.

Vandergrift, L., Goh, C., Mareschal, C., & Tafaghodtari, M. (2006). The metacognitive awareness listening questionnaire: development and validation. Language Learning, 56(3), 431-462.

Worrall, L. & Bell, F. (2007) Metacognition and lifelong e-learning: A contextual and cyclical process. E-Learning, 4(2).

Weimer, M. (2002). Learner-centered teaching: five key changes to practice. San Francisco: Jossey-Bass.

White, B., & Frederiksen, J. (2005). A theoretical framework and approach for fostering metacognitive development. Educational Psychologist, 40(4), 211-223.

Zimmerman, B. J., & Schunk, D. H. (Eds.). (1989). Self-regulated learning and academic achievement: theory, research and practice. New York: Springer-Verlag.