Lan Li

Allen L. Steckelberg

Sribhagyam Srinivasan

Authors

Lan Li is an assistant professor for the College of Education and Human Development at Bowling Green State University, U.S.A. Correspondence regarding this article can be sent to lli@bgsu.edu

Allen L. Steckelberg is an associate professor for the College of Education and Human Sciences at the University of Nebraska-Lincoln, U.S.A. He can be reached at als@unl.edu

Sribhagyam Srinivasan is the Instructional Designer and Distance Learning Administrator at Lamar State College-Orange, U.S.A. and can be reached at Sribhagyam@yahoo.com

Abstract: Peer assessment is an instructional strategy in which students evaluate each other’s performance for the purpose of improving learning. Despite its accepted use in higher education, researchers and educators have reported concerns such as students’ time on task, the impact of peer pressure on the accuracy of marking, and students’ lack of ability to make critical judgments about peers’ work. This study explored student perceptions of a web-based peer assessment system. Findings conclude that web-based peer assessment can be effective in minimizing peer pressure, reducing management workload, stimulating student interactions, and enhancing student understanding of marking criteria and critical assessment skills.

Résumé : L’évaluation par les pairs est une stratégie pédagogique au cours de laquelle l’étudiant évalue la performance de l’autre dans un but d’amélioration de l’apprentissage. Malgré son usage répondu aux études supérieures, les chercheurs et les enseignants ont mentionné certaines préoccupations, notamment en ce qui a trait au temps que les étudiants consacrent à cette tâche, à l’impact de la pression des pairs sur la justesse de l’évaluation, ainsi qu’à l’inaptitude des étudiants à poser un jugement critique sur le travail de leurs pairs. La présente étude explore les perceptions des étudiants à l’égard d’un système d’évaluation en ligne par les pairs. Nos résultats nous permettent de conclure que l’évaluation en ligne par les pairs peut constituer un moyen efficace de réduire la pression des pairs, de diminuer le travail de gestion, de stimuler les interactions entre étudiants et d’améliorer la compréhension des critères d’évaluation par les étudiants ainsi que leurs compétences d’évaluation critique.

Learning and teaching are highly social activities. Interactions between students and teachers and between students and peers play a fundamental role in the learning process. Vygotsky (1978) looked at learning as an activity rooted in social interactions with others and the outside world. He asserted that when children interact with adults or more capable peers, higher mental functions are initiated. Cognitive development is “best fostered in a social environment where students are active participants and where they are helped to reflect on their learning” (Bruning, Schraw, Norby & Ronning, 2004, p. 203).

Promoting student autonomy and encouraging student interactions have been extended to the arena of assessment. Students’ behaviour and attitude toward learning are often shaped by the assessment system (Freeman, 1995). While, instructor-only assessment provides limited feedback and interactions with students, student participation in assessment is usually inadequate. Research has suggested that some power should be transferred to students in order to achieve higher student engagement and to better promote learning (Orsmond & Merry, 1996; Orsmond, Merry, & Reiling, 2002). In order to address this issue, some alternative assessment approaches such as peer assessment, self-assessment, and portfolio assessment have been promoted in recent years. The focus of this paper is to explore the use of peer assessment in higher education, and highlight and discuss a specific peer assessment approach.

Peer assessment is a process in which students evaluate the performance or achievement of peers (Topping, Smith, Swanson & Elliot, 2000). While peer assessment can be summative, peer marking is used to provide accountability and to check the level of learning by assigning a quantitative mark— it often focuses on formative goals. Formative peer assessment usually involves students in two roles: assessors and assessees. As assessors, students provide detailed and constructive feedback regarding the strength and weakness of their peers’ work. As assessees, students view peer feedback and improve their own work. Cheng and Warren (1999) defined this assessment method as a process of reflection on “what learning has taken place and how” (p. 301).

The potential benefits of peer assessment for cognitive development and the learning process have been highlighted in numerous studies. Pope (2001) suggested peer assessment stimulates student motivation and encourages deeper learning. Li and Steckelberg’s study (2005) on peer assessment indicated that the technology-mediated peer assessment provided a scaffolding guide that helped students gradually shift their roles from assessees to assessors. Some of the benefits reported in the study included diagnosing misconceptions and deepening learning. Topping (1998), after reviewing 109 articles focusing on peer assessment, confirmed that peer assessment can yield cognitive benefits for both assessors and assessees in multiple ways: constructive reflection, increased time on task, attention on crucial elements of quality work, and a greater sense of accountability and responsibility.

In spite of the benefits of peer assessment, research has also identified some weaknesses. They include peer pressure, time on task, and student ability to interpret marking criteria and to conduct critical assessment. A number of researchers have noted their concerns regarding peer pressure in peer assessment (e.g. Davies, 2002, Hanrahan & Isaacs, 2001; Topping et al., 2000). Peer pressure can cause feelings of uncertainty and insecurity for students as “marking could be easily affected by friendship, cheating, ego or low self-esteem” (Robinson, 1999, p. 96). When students are aware of the source of the work and/or assessment, potential biases like friendship, gender or race may have a greater influence on their marking and feedback.

Issues of time on task in peer assessment are twofold. One is from the instructor’s perspective. Management of peer feedback documentation requires substantial time (Davies, 2002), especially when confidentiality of assessors and assessees is required in the process. For example, in one of their peer assessment studies, Hanrahan and Isaacs (2001) reported utilizing more than 40 person hours for documentation work in an anonymous distribution system with 244 students. The other aspect of time engagement is drawn from the student perspective. A well-implemented peer assessment is not an easy process. It requires long-term student commitment involving work from defining marking criteria to practicing assessment skills, from constructing projects to submitting them, and from assessing peers to viewing peer comments. In addition, in traditional paper-based peer assessments, submission of and access to feedback are difficult to complete in a timely manner; delays create a challenge to student focus and their ability to follow through on the process.

In addition to peer pressure and time issues, two other common barriers in peer assessment include students’ difficulties in understanding marking criteria and their lack of critical assessment skills. Peer assessment works best when marking criteria are clearly understood by students: “Common to most successful self and peer assessment schemes is the act of making explicit the assessment criteria” (Falchikov, 1995, p.175). Understanding the criteria of a particular project and analyzing a peer's work can lead to an improved awareness of one’s own performance (Freeman, 1995). However, terms and phrases in marking criteria may not convey the same meaning for students and instructors. Students especially lack skills interpreting criteria requiring higher order thinking skills (Orsmond, Merry, & Reiling, 2000). Dochy, Segers and Sluijsmans (1999) stressed the importance of training in “obtaining an optimal impact” (p. 346) on the student learning process. This process can be especially important for students new to peer assessment.

Challenges in peer assessment may explain contradictory results in previous peer assessment research. In the current study, an attempt was made to address the weaknesses in peer assessment by utilizing a web-based assessment system. In this system, students rated and commented upon peers’ projects. Anonymity was assured to reduce peer pressure. Since data were managed by computers, no manual work was needed to maintain the circulation link such as handing out student projects, collecting peer feedback, and distributing feedback to authors of projects. The administrative workload was minimal and student interactions were encouraged. In addition, face-to-face training was provided to deepen student understanding of marking criteria and strengthen their critical assessment skills. This paper further explores student perceptions of this peer assessment model and how it contributes to a student's learning process. In this study, peer rating and feedback were utilized only as formative feedback for the purpose of promoting learning, not as a substitute for instructor grading. Students were aware that scores provided by student assessors were only used as an initial guide for project improvement. Instead, the quality of their peer assessment (whether students were able to identify critical problems in assessed projects and provide constructive feedback) was evaluated and it contributed to their final grades.

Subjects in this study were undergraduate teacher education students who participated in a web-based peer assessment process as part of an introductory educational technology course. This website consisted of two interfaces: a student interface and an instructor interface. Peer assessment was conducted through the student interface. After logging in to an account, students were able to submit their projects through the website. Server-based scripts randomly assigned projects to students for review. Each student played two roles—assessor and assessee. As an assessor, each student viewed two projects and provided feedback to their peers. As an assessee, each student accessed the feedback to his/her own work and was then able to make improvements. The website presented the student work so that peer reviewers were not aware of the identity of the creator. Likewise, ratings and review comments were presented to the creator without identifying the reviewers. Instructors were able to monitor the entire process and were able to track the identities of both creators and reviewers.

The instructor interface served two purposes. First, this interface enabled the instructor to perform administrative tasks to maintain the student database. The instructor had privileges to create, delete, and modify student account information. Second, the instructor was able to track the peer assessment process. The instructor had access to student projects, feedback provided by each student, as well as the peer feedback for each student’s project. The quality of student feedback was monitored as a part of the process. First, the instructor evaluated the assessed projects based on the marking criteria. Second, feedback from student assessors was evaluated to judge whether student assessors could identify critical problems of the assessed projects and provide constructive feedback. Quality of student feedback made up 10% of students’ final project grades. Students understood that both the quality of their projects and the quality of their comments mattered (not the rating) in obtaining a final project score.

Thirty-eight undergraduate teacher education students participated in this study. All the participants were recruited from a required entry-level educational technology application course at a Midwestern U.S. university. Students ranged from freshman to senior standing with various backgrounds and content area emphasis. The project that formed the basis for the peer assessment was a class assignment undertaken by all students as a regular part of the course.

In this study, students were asked to develop a WebQuest proposal. A WebQuest is “an inquiry-oriented lesson format in which most or all the information that learners work with comes from the web” (WebQuest Overview, n.d.). This instructional strategy, developed by Bernie Dodge and Tom March in early 1995, is designed to involve users in a higher-order learning process, such as analysis, synthesis and evaluation. The critical features of a WebQuest project are often overlooked because students have a superficial understanding of the activity. Students may tend to focus on simply retelling information found on the web. For example, March (2003) suggests, instead of asking students to make PowerPoint slides on facts such as natural resources, social policy, business, climate and history of U.S. states, a WebQuest might ask students to study U.S. states and predict which state that they have studied is most likely to be successful in the twenty-first century. With this revised approach, students need to first acquire factual information of U.S. states from the Internet, and then define what “being successful” means to them. They would then decide which state is going to be the most successful through reasoned analysis followed by a justification of their decision. Higher order skills of analysis, synthesis and evaluation are required in the WebQuest process.

In order to help students build a quality WebQuest that promotes learners’ higher-order thinking skills instead of just simple comprehension of factual information from the Internet, this peer assessment project asked students to develop a proposal, in which basic elements of a quality WebQuest were addressed.

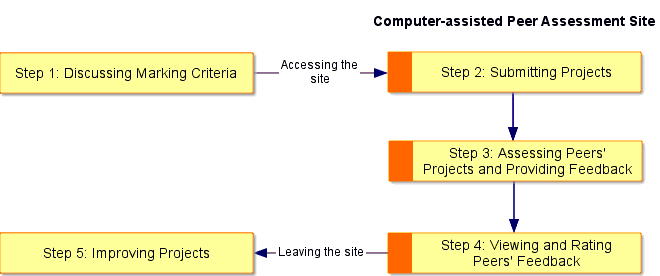

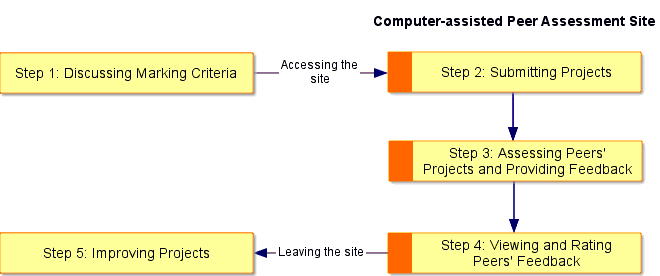

Students followed a five-step procedure in this peer assessment process (Figure 1).

Step 1: Discussing Marking Criteria

After thoroughly studying the structure and basic elements of WebQuest, students were presented with the marking criteria provided by the instructor. The marking criteria, replicated from a rubric published in the San Diego State University WebQuest site (A rubric for evaluating WebQuest, n.d.), included eight statements. Students were asked to rate the performance of their peers on a five-point scale for each of the eight dimensions. Detailed discussions of each category were provided in class. Afterwards, students assessed a sample project and provided their feedback. Students compared their assessments and feedback with an exemplar provided by the instructor. Comparison allowed students to identify discrepancies and refine their understanding of the criteria.

Figure 1. Peer assessment procedure

Step 2: Submitting Projects

After students completed their projects, they logged onto the website and uploaded their projects. Projects were stored in the peer assessment site and randomly assigned to students for review. The random allocation was automatically made by the peer assessment site as scripts were built in when the site was designed.

Step 3: Assessing Peers’ Projects and Providing Feedback

Each student was assigned to two projects. Students were asked to rate and provide constructive comments/suggestions on their peers’ work through a web form. Once the feedback was submitted, students could access the feedback for their project. Scores from student assessors were only provided to give assessees information on how their peers rated their projects.

Step 4: Viewing and Rating Peer Feedback

After reviewing the peer feedback, each student was asked to rate and justify the helpfulness of that feedback as it related to the improvement of their projects.

Step 5: Improving Project

Each student was asked to use the summary feedback to improve his or her project. Students were advised that the quality of peer feedback they received might vary and they did not have to make all revisions suggested by their peers. If students had different thoughts or if they disagreed with their peers’ comments at certain points, they were directed back to study the structure and content of WebQuests, and the rubric before they made any revisions. This step engaged students in critically looking at the elements of a WebQuest and facilitated students in diagnosing misconceived concepts.

Post-assessment Questionnaire

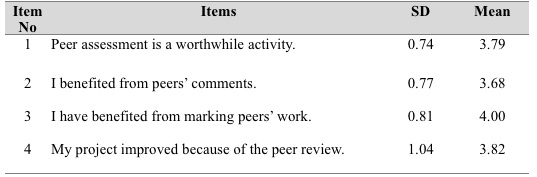

After students submitted their final projects, they responded to a post-assessment questionnaire, which solicited students’ general conceptions of peer assessment. The first part of the questionnaire (see Table 1) included four 5-point Likert Scale items (ranging from 1/strongly disagree to 5/strongly agree) adapted from a previous study (Lin, Liu, & Yuan, 2002). The second part of the questionnaire invited students to respond to three open-ended questions concerning their best and least liked features in the computer-assisted approach, and how this particular assessment approach facilitated their learning process.

Statistical data such as means and standard deviations of students’ rating in the four 5-point Likert Scale items were calculated (Table 1).

Table 1. Statistical description of student perceptions toward peer assessment (1 representing “strongly disagree”, 3 representing “neutral” and 5 representing “strongly agree”)

Students’ responses to these four 5-point Likert Scale items suggest that students generally held a fairly positive attitude toward this peer assessment approach, as mean scores of these items ranged from 3.5 to 4 (3 representing “neutral” and 4 representing “agree”). There was considerable variability in student responses to the items, particularly on item four, which asked whether their project was improved because of the peer review.

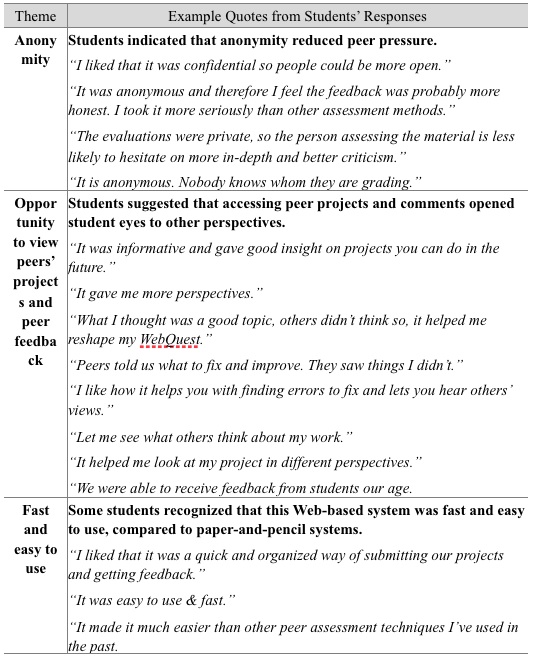

Students’ responses to the open-ended questions (What are your best-liked features in this computer-assisted peer assessment process? What are your least liked features in this computer-assisted peer assessment process? And how do you think this computer-assisted peer assessment model facilitated your learning process?) were coded and analyzed for themes (Table 2). Two independent coders analyzed the qualitative data. Two stages of coding were employed. In the first stage, labels were added to sort and assign meaning to text. In the second stage, labels were reorganized. Similar labels were combined together to form bigger categories, and larger groups of related labels were reviewed to see whether they should be divided into smaller groups. Repeating ideas and themes were identified during this process.

Table 2. Themes and supporting quotes from the post assessment survey for students’ best-liked features in the Web-based peer assessment model

The formula to test coding reliability suggested by Miles and Huberman (1994) was utilized to ensure that the coding was clear, valid and reliable: Reliability = number of agreement / (number of agreement + number of disagreement). A score of 91 percent of agreement was achieved from two coders for coding reliability of all data. All disagreements were resolved.

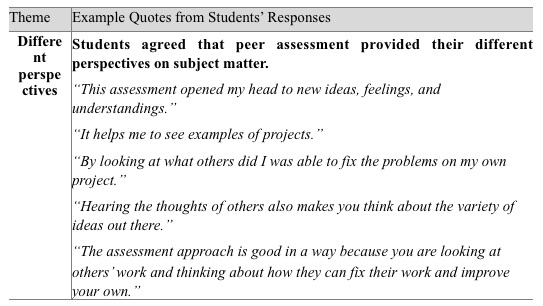

Three themes were identified for the best-liked features in this web-based peer assessment system: anonymity, opportunity to view peers’ project and peer feedback, and fast and easy to use.

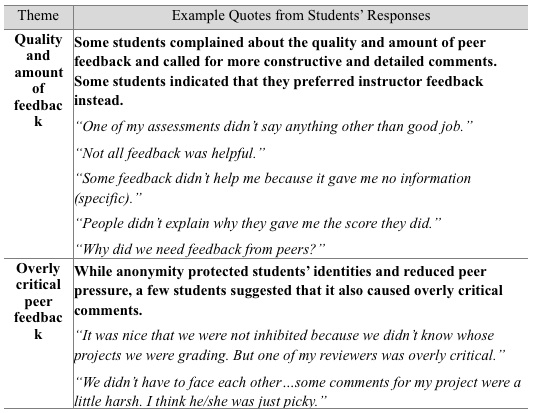

Two themes emerged from students’ comments for the least liked features of this peer assessment system: quality and amount of feedback and overly critical peer feedback (See Table 3).

Table 3. Themes and supporting quotes from the post assessment survey for student least-liked features in the Web-based peer assessment model

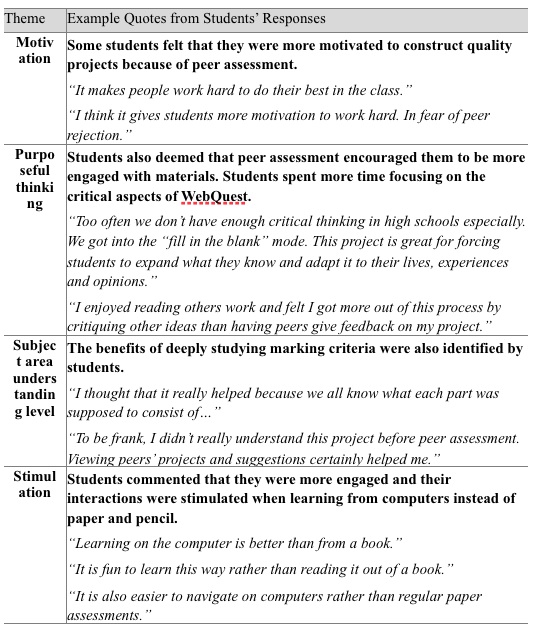

In the open-ended question on how students perceive that this peer assessment model facilitated their learning process, the acknowledged themes included: motivation, subject area understanding level, stimulation, and different perspectives (See Table 4).

Table 4. Themes and supporting quotes from the post assessment survey on how Web-based peer assessment model facilitated learning process

This study explored student perceptions of how a web-based peer assessment system facilitated their learning process, and identified student best- and least-liked features. The web-based peer assessment system addressed common concerns in peer assessment, such as: peer pressure, time issues (management workload and student focus), and training students in interpreting marking criteria and gaining critical assessment skills. Findings of this study suggested that students were generally positive about peer assessment when a) peer pressure were controlled, b) feedback was accessed in a timely manner and, c) training to help them understand what was required for a quality WebQuest was provided.

Students in general recognized the value of peer assessment. Most students felt that they benefited from reviewing their peers’ work (M = 4.00; SD = .81). However, 21% of students (eight of thirty-eight) expressed their dissatisfaction with the quality and amount of peer feedback in the least-liked feature question. These students urged for more detailed and constructive feedback. We evaluated both projects and peer feedback for those students in order to gain a better understanding. Surprisingly, we discovered that five out of these eight students did excellent work on their projects. These students’ initial high performance may explain why they didn’t receive much feedback beyond the statement “good job.” This result may mean that the feedback portion of peer assessment may contribute less to students who initially perform well on the task. This result may also explain the variability in responses to items 1-4 that addressed the perceived benefit of the peer assessment activity (Table 1). This possible explanation was echoed in one of student comments, “Sometimes peer assessment isn’t helpful if you already did a good job.” Future studies should investigate the differential impact of peer assessment on students at different initial performance levels.

Positive comments regarding anonymity in the post-assessment questionnaire suggest that peer pressure was better controlled with this web-based approach. In fact, peer pressure was reduced to a level that students even felt the feedback was “overly critical.” In order to better understand this finding, we reviewed those comments and regarded most as insightful and constructive although we agreed that some comments could have been handled more tactfully. For example, one assessor commented on his peer’s WebQuest: “I don’t think you really understand what WebQuest is … I wouldn’t call your project a “WEB” Quest as there were no web links provided.” While we seconded the assessor’s opinion, we could understand how the assessee might be disturbed by these blunt comments from his/her peer. Preparation on how to provide constructive feedback in a more supportive way may be needed in future peer assessment studies to avoid student frustration.

In this peer assessment activity, students’ projects and feedback were managed by the scripting underlying the Website. Unlike paper-based peer assessment administration, students in this study could access feedback as soon as it was submitted. We speculate that immediate feedback makes it easier for students to focus on the assignment and follow through on using the feedback. Students appreciated the “quick and organized way of submitting our projects and getting feedback”. One of the drawbacks of peer assessment noted in the literature was the excessive amount of time required to manage the process. The use of a web-based approach allowed this portion of the task to be automated and streamlined.

Training of students in how to peer evaluate proved to be effective. Most students agreed that peers were able to identify weaknesses in their projects and that peer comments helped them to find and fix the problems. However, several students still expressed their doubts of the ability of peers to provide critical assessment and stated they preferred teacher feedback. This issue of students preferring teacher assessment to peer assessment was also reported by other studies. For example, in Orsmond and Merry’s study (1996), students were sceptical about the meaningfulness of peer comments. Some students believe that teachers should be the only judges of their performance and teachers are the only ones who should award grades; what matters is how teachers (not peer students) think about their projects. However, we believe students need to understand that the benefits of peer assessment are not limited to receiving peer comments. As suggested by Orsmond, et al. (2000), peer assessment is a process “to have meaningful dialogue with the student” and “to think about the process of carrying out the assignment rather than just the product.” (p. 24).

Various aspects of this approach may contribute to student learning. For example, students were trained to understand what was required and how they should assess their peers’ work in this study, which may lead to an improved awareness of one’s own performance (Freeman, 1995; Mehrens, Popham & Ryan, 1998). Moreover, viewing peer work tends to help students to identify the strengths and weaknesses of their own work. Peer comments and suggestions may assist students in identifying misconceived knowledge and re-evaluating their projects; these aspects include a series of cognitive restructuring processes such as "simplification, clarification, summarizing, and reorganization" (Topping & Ehly, 1998, p. 258). Informing students of the educational purpose of peer assessment may help them to not only focus on comparing the quality of peer and teacher comments but open their minds to other aspects of this learning process. Furthermore, with this group of student teachers, peer assessment is a formative evaluation method to use with their own students.

In this study, participant responses to survey items and open-ended questions tended to suggest that students generally recognized the merits of peer assessment in learning and agreed that they were motivated for better personal performance by examining peers’ work more closely. Students commented that peer assessment facilitated their critical thinking skills. We interpret this to mean that students were more purposeful in how they completed the WebQuest project. The peer assessment process promoted a more careful and systematic review of the project and supported greater understanding of more complex aspects of the assignment. Students reported that the peer assessment process not only deepened their understanding of the subject matter but also provided opportunities for viewing different perspectives, thoughts and ideas. In addition, students acknowledged that this web-based system stimulated their interactions with other students and facilitated learning.

One of the limitations of this study was the relatively small number of participants (n=38). Future studies involving larger numbers of students are warranted. In addition, participants were undergraduate students enrolled in a face-to-face technology application course. It would be interesting to see whether findings would be similar if different groups of students and instructional settings were employed, for example, graduate students enrolled in online classes. Moreover, participants in this study were experienced with this specific technology-assisted peer assessment system. As suggested by the literature (Topping, 1998), peer assessment models may vary dramatically. Peer assessment can be formative or summative, web-based or paper-based. It can also be one way (students only play the role of assessors or assessees) or two ways (students act in both roles—assessors and assessees). Peer-assessed products may also differ in type, from posters to written essays, from medical practices to oral presentations. Since this study does not account for all peer assessment practices, further studies with other peer assessment models must be considered. It is not currently known whether this web-based peer assessment process will promote the learning of assessment skills or improve the quality of students’ projects. Therefore, while this study mainly focused on exploring students’ perceptions, future studies providing empirical data are needed to examine the influence of this technology-facilitated peer assessment module on students’ performance.

Conclusion

In conclusion, this exploratory study of students’ perceptions revealed that students were generally positive toward this technology-facilitated peer assessment module. Some students agreed that this anonymous system protected their identities, which made it easier for them to provide honest and sincere comments. Students also acknowledged that this web-based model was fast and easy to use, as compared to paper-based systems. In addition, students recognized the positive influence of training on assessment as they commented that they benefited from learning how to conduct critical assessment, reading peer feedback, and viewing peer projects. Findings of this study suggested a technology-facilitated peer-assessment model may be effective in addressing three common issues in peer assessment: peer pressure, time of engagement, and students’ capacity for critical assessment.

References

A rubric for evaluating WebQuest. (n.d.). Retrieved February 14, 2008, from http://webquest.sdsu.edu/webquestrubric.html

Bruning, R. H., Schraw, G. J., Norby, M. N., & Ronning, R. R. (2004). Cognitive psychology and instruction (4th ed.). Upper Saddle River, NJ: Pearson.

Cheng, W., & Warren, M. (1997). Having second thoughts: Student perceptions before and after a peer assessment exercise, Studies in Higher Education, 22, 233-239.

Cheng, W., & Warren, M. (1999). Peer and teacher assessment of the oral and written tasks of a group project. Assessment & Evaluation in Higher Education, 24(3), 301-314.

Davies, P. (2002). Using student reflective self-assessment for awarding degree classifications. Innovations in Education and Teaching International, 39(4), 307-319.

Dochy. F., Segers, M., & Sluijsmans, D. (1999). The use of self-, peer and co-assessment in higher education: a review. Studies in Higher Education, 24(3), 331-350.

Falchikov, N. (1995). Peer feedback marking: developing peer assessment, Innovations in Education and Training International, 32(2), 175–187.

Freeman, M. (1995). Peer assessment by groups of group work. Assessment & Evaluation in Higher Education, 20(3), 289-300.

Hanrahan, S. J., & Isaacs, G. (2001). Assessing self- and peer-assessment: The students' views. Higher Education Research & Development, 20(1), 53-70.

Li, L., & Steckelberg, A. L. (2004, October). Using peer feedback to enhance student meaningful learning. Paper presented at the Association for Educational Communications and Technology, Chicago, IL.

Li, L., & Steckelberg, A. L. (2005, October). Impact of technology-mediated peer assessment on student project quality. Paper presented at the Association for Educational Communications and Technology, Orlando, FL.

Lin, S. S. J., Liu, E. Z. F., & Yuan, S. M. (2002). Student attitudes toward networked peer assessment: case studies of undergraduate students and senior high school students. International Journal of Instructional Media, 29(2), 241-254.

March, T. (2003). The learning power of WebQuests. Educational leadership. 61(4), 42-47.

Mehrens, W. A., Popham, W. J., & Ryan, J. M. (1998). How to prepare students for performance assessments. Educational Measurement: Issues and Practice, 17(1), 18-22.

Miles, M. B., & Huberman, A. M. (1994). Qualitative data analysis (2nd ed.). Thousand Oaks, CA: SAGE

Orsmond, P., & Merry, S. (1996). The importance of marking criteria in the use of peer assessment. Assessment & Evaluation in Higher Education, 21(3), 239-250.

Orsmond, P., Merry, S., & Reiling, K, (2000). The use of student derived marking criteria in peer and self assessment. Assessment & Evaluation in Higher Education, 25(1), 23-28.

Orsmond, P., Merry, S., & Reiling, K. (2002). The use of exemplars and formative feedback when using student derived marking criteria in peer and self-Assessment. Assessment & Evaluation in Higher Education, 27(4), 309-323.

Pope, N. (2001). An examination of the use of peer rating for formative assessment in the context of the theory of consumption values. Assessment & Evaluation in Higher Education, 26(3), 235-246.

Robinson, J. (1999). Computer-assisted peer review, in Brown, S., Bull, J., & Race, P. (Eds), Computer-assisted assessment in higher education (pp. 95-102), London: Kogan Page.

Topping, K. (1998). Peer assessment between students in colleges and universities. Review of Educational Research, 68(3), 249-276.

Topping, K. & Ehly, S. (1998), Peer assisted learning, Mahwah, NJ: Lawrence Erlbaum Associates .

Topping, K. J., Smith, E. F., Swanson, I., & Elliot, A. (2000). Formative peer assessment of academic writing between postgraduate students. Assessment & Evaluation in Higher Education, 25(2), 149-169.

Vygotsky, L.S. (1978). Mind in society. Cambridge, MA: Harvard University Press.

WebQuest Overview. (n.d.). Retrieved May 25, 2007, from http://webquest.org/ index.php