Authors

Alan Bain is a Senior Lecturer in Inclusive Education at Charles Sturt University, Australia. Correspondence regarding this article can be sent to: abain@csu.edu.au

Robert John Parkes is a Lecturer in Learning and Teaching at Charles Sturt University, Australia.

Abstract: In this position paper, reservations are presented regarding the potential of knowledge management (KM) as it is currently applied to the learning and teaching activity of schools. We contend that effective KM is contingent upon the explication of a deep and shared understanding of the learning and teaching process. We argue that the most important transactions in schools, those related to learning and teaching, are frequently the least explicated. Further, where such explication does occur, it is rarely specific enough to generate the kind of meaningful data required to make timely improvements in the learning experience of individual students. Our intent is to inject a cautionary note regarding current conceptualizations of KM in education and to focus the KM discussion on potentially more valid applications in school settings. We offer strategy and examples that can be employed to address the reservations described herein as well as build the kind of professional culture of practice in schools that is more conducive to effective KM.

Résumé: Dans cet article d’opinion, nous faisons part des réserves relatives au potentiel de la gestion du savoir comme elle est présentement exercée dans les activités d’apprentissage et d’enseignement des écoles. Nous croyons qu’une gestion efficace du savoir relève de l’explication d’une compréhension profonde et conjointe des processus d’apprentissage et d’enseignement. Nous prétendons que les transactions les plus importantes dans les écoles, celles liées à l’apprentissage et à l’enseignement, sont souvent celles qui sont le moins expliquées. De plus, lorsqu’une explication est donnée, elle est rarement suffisamment précise pour générer le type de donnée importante nécessaire pour apporter des améliorations appropriées dans l’expérience d’apprentissage de chaque étudiant. Notre objectif consiste à faire une mise en garde à propos des conceptualisations actuelles de la gestion du savoir dans l’éducation et de faire en sorte que la discussion sur la gestion du savoir mette l’accent sur des applications qui pourraient être plus valides dans le contexte des écoles. Nous faisons part de stratégies et d’exemples qui peuvent être utilisés pour aborder les réserves décrites plus tôt et pour élaborer le type de culture professionnel qui sert mieux une gestion efficace du savoir.

Over the last five years there has been a fast developing interest in the application of Knowledge Management (KM) principles and practices to the field of education (e.g., Petrides, Guiney & Zahra 2002; Petrides & Nodine, 2003; Protheroe, 2001; Sallis & Jones, 2002; Thorn, 2001). Petrides and Nodine describe the widespread use and potentials of KM in mission building and strategic planning, enrolment planning, academic counselling and student learning and behaviour management. These authors provide a representative example of the latter that is particularly informative given the present discussion. Petrides and Nodine describe the way a system employed to track suspensions can be used to identify the antecedents to a student’s behavioural difficulty. In the example, data on attendance, time and class are articulated in a KM system and combined with a collaborative review process to identify a relationship between student behaviour and a beginning teacher experiencing difficulty with classroom management. In another example, Celio and Harvey (2005) describe an interaction between a school superintendent and board members where data on student achievement, and student and faculty retention, is employed to benchmark the performance of local schools against those of other districts and the state. This data is then drilled down to examine both math and reading performance at an individual school.

The proposed benefit of these KM applications is the provision of new or additional insight regarding the potential sources of a behavioural difficulty, or the benchmarking of school and district performance for comparison purposes and self-analysis. There are numerous like examples described in the literature in relation to individual education planning, curriculum evaluation and high stakes testing where state test data, curriculum objectives or educational goals are analyzed in relation to student performance for perceived educational benefit (e.g., Mason, 2003; Thorn 2001). These examples are offered as a way KM can improve school performance by providing a deeper, evidence-based understanding of their functioning. This understanding, it is argued, can be employed for problem-solving in curriculum, instruction, and professional development.

We contend that the conceptualization represented in the examples described, and many others, significantly oversimplifies the KM challenge in and for schools. Further, we believe that such a conceptualization is unlikely to yield the anticipated outcomes of KM and the benefits expected of the technologies that drive it.

In support of this position we pursue three basic ideas in this paper. First, we propose that useful KM is dependent on making the critical work processes and patterns of an organization explicit (Petrides & Nodine, 2003). As such, the utility and ultimate success of any KM approach for schools requires an articulated conceptualization of educational practice that can be meaningfully represented in a KM system. We contend that at present the overwhelming majority of schools do not possess the kind of articulated high efficacy practice required to define the most critical attributes and transactions in their work patterns necessary to realize the potential of KM. Further the data that is produced (described as distal) turns out to be too removed from the core activity of teaching and learning. This kind of distal data (e.g., high stakes testing, attendance, behavioural incidents) is not sufficiently focused on the critical work processes and patterns of schooling to deliver the benefits expected from KM. Schools instead need to provide data that emerges directly from the activity of students and teachers in classrooms and is proximal to the core activity teaching and learning.

Second, for KM to be effective, we argue that feedback systems need to provide data that is available in, or near to, real time if student learning solutions and program modifications are to be developed in a timely fashion. While schools do generate some data in this way (e.g., attendance, behavioural incidents), information about the specifics of learning and teaching (e.g., the way lessons are taught, the way students are grouped within classes, the practices used to implement curriculum and the ongoing, just-in-time performance of teachers as well as students) is rarely available. The generation of such data requires a significant change in the way schools manage and deliver feedback.

Third and finally, we describe steps schools can take to develop the articulation of their core activity required to make KM viable. These steps include building a shared conception of learning and a culture and body of practice. Examples will be used to describe these approaches and the tools that can emerge from taking those steps.

At the core of our position is a question about whether the data that is most likely to appear in contemporary school KM systems is the data necessary to make a difference to learning and teaching in schools. According to Thorn (2001) “the most basic unit of analysis in a KM system (sometimes referred to as an atomistic unit) should drive data collection efforts and processes” (p.35). Thorn describes student data as an example of an atomistic unit. This includes student performance and demographic information and instructional outcome data related to individual students. While this kind of data is essential for any KM system focused on student learning, useful answers to questions about student growth and school performance requires much more detailed process information about how teachers are teaching and students are learning. This includes data on how any given lesson or approach is working with different groups of students at multiple points in time; or data describing whether the research-based characteristics of a particular teaching approach are being implemented effectively in a manner that represents the best choice for a given standard or learner expectation. This kind of information is the atomistic data of the core activity of schools and is ultimately required to problem solve performance on high stakes tests or other summative outcomes of classroom learning (see Smith, Lee & Newmann, 2001, for a discussion of the central importance of instructional choices on student achievement).

Under current conditions schools are much more likely to be employing data gathered at a distal versus proximal level in their knowledge management activity. By distal we mean data that while related to learning and teaching is nonetheless removed from the specific classroom transactions and work patterns that are the critical to the learning process. Distal level data includes such things as student demographics, attendance, curriculum completion, behavioural incidents and suspensions, and the results of high stakes or achievement tests. We view this category of data as distinct from the proximal data that is foundational to the transactions of teaching and learning. Proximal data includes documenting the quality of pedagogical implementation, student academic engagement, the differentiation of day-to-day instruction related to content, instructional process and assessment (Tomlinson, 2001) and the way students respond to that differentiation.

We acknowledge the importance and utility of data gathered at the distal level. Clearly, students need to be present for learning to occur. The timing and location of behavioural incidents may point to ecological factors that contribute to behaviour problems. Variability in student performance in high stakes testing may point to curricular needs, strengths and weaknesses. However, when KM is confined to analysis derived from distal level data, the resultant analysis and subsequent problem-solving is invariably highly speculative. In fact, it is from the space where this speculation is focused (the specifics of how teachers are teaching and students are learning) that the important atomistic or proximal data about teaching and learning needs to emerge.

The KM system capacity required for a fine grained proximal analysis stands in sharp contrast to the Petrides and Nodine (2003) exemplar where demographic and attendance data is lined up with the classroom schedule to identify a needy beginning teacher. This kind of analysis provides information that, in the powerful informal culture of schools, would already seem to be undertaken by insightful teachers in staff and meeting rooms as part of their typical faculty room conversation.

By way of contrast, when detailed information is available about the learning and teaching process many alternative explanations emerge as attributes to student learning needs and difficulties. An example may serve to illustrate this point. Let us assume that a teaching team is experiencing difficulty implementing cooperative learning based upon reflections from teachers and quantitative data derived from ongoing classroom observations represented in its KM system. The teaching team leader may develop one, or preferably more, hypotheses about why this is the case. Some of those hypotheses may be quite obvious. It may be that the team has a number of new or early career teachers, or a disproportionate number of more needy students who require additional support. However, by cross-referencing student assessment performance in cooperative learning lessons with the data on the actual design and implementation of those lessons the team leader may find that while the most obvious explanation for the cooperative learning problem was the experience of the teachers, in point of fact, a deeper problem exists with the design of a given set of lessons. It may be that the problem is more related to the way the curriculum (and specifically features of the design of cooperative learning lessons) is aligned with the needs of a particular group of students. This problem may have been exacerbated by the inexperience of the teachers, but in fact was a curriculum/lesson design issue. A careful analysis could result in some redesign or further development of the curriculum (Bain, 2005).

The point we emphasize here is that KM systems need to be able to store and manage information on instructional design and implementation in order to provide data that allows a deeper analysis (Spector, 2002) . It is at the proximal, classroom level, the place where instruction is implemented and responded to by students that the critical work processes and patterns of schooling occur. It is also from this place that data for KM systems needs to be derived and made explicit.

A first central question to explore is: Where does proximal data on the design of instruction and classroom implementation come from and does such data actually exist in schools? The data mining described in the previous example is only possible when a school has a collective sensibility about such things as cooperative learning, grouping approaches or differentiated instruction explicated in a manner conducive to representation in a database structure. For example, the term cooperative learning can mean anything from breaking students into groups to discuss a given topic to a highly articulated process requiring individual student accountability, planned task structure and interdependence (e.g., Walters, 2000). The presence or absence of any of these characteristics can have a stunning differential effect on student achievement (Slavin, 1990). To provide the kind of analysis of the effects of cooperative learning described in the previous example a school would have to possess a sophisticated knowledge of cooperative learning processes and represent those processes in its KM system.

Herein lies the challenge for schools and the source of a second central question: How do we establish the abstracted notion of learning and teaching required to define what it means to teach, to learn and to be a school? A database is about fields and tables that contain information. In a school context, what we name those fields and tables and how we choose to define them needs a definition established within a school, and based on common values and beliefs about learning and teaching. The data fields need not always be filled with numbers but they do need to be filled with an articulation of what a school believes about its core activity (Bain, 2004). It is important to emphasize that this is not to suggest the absolute “one best way” to teach and learn, rather that there are many possible ways but those ways need to be defined collaboratively in accordance with a school’s shared beliefs and values.

While it may be desirable to assume that this level of articulation currently exists because of its presence in educational theory and research, multi-generational investigations of the professional culture of actual schools strongly indicates that this not the case (Goodlad, 1984; Lortie, 1975; McLaughlin & Talbert, 2001; Sizer, 1984; Tyack & Cuban, 1995). These authors have found decade over decade that, while there are individual teachers with advanced and defined professional knowledge, schools, as a rule, lack the cultures of practice necessary to articulate the critical work processes and patterns in their organizations (Elmore, 1996).

Few schools have decided what they are about with the level of clarity required to make those processes and patterns explicit. In the majority of schools, values and beliefs about learning frequently reside in the philosophical realm (Fullan, 1997), and thus lack the definition that could make them meaningful attributes in a relational database and ultimately serve as tools for KM. Further, the lack of professional cultures of practice in schools makes it unlikely that the majority of teachers will possess the sophisticated and detailed knowledge of practice required to develop the hypotheses and interrogate the data in a KM system at a level required for complete and timely solutions to learning and teaching needs. This precludes the kind of everyday fine-grained analysis of learning necessary to realize the full potential of KM in schools.

Our second contention pertains to the kind of school feedback systems required to make KM data possible. Assuming a school did possess the kind of articulation of learning and teaching required for KM, what kind of feedback system could generate such data in real time or near to it to provide the information necessary for timely solutions? How would we get the data about classroom implementation of pedagogy for our KM system? How would teachers reflect on their practice in a timely fashion? How would curriculum be available to teachers and students for feedback in transparent ways?

Feedback would need to be deeply embedded in the day-to day activity of the school, part of what it takes for every member of the community to do their job. In this kind of emergent feedback system, peers and supervisors would need to be engaged in an ongoing cycle of observation and reflection, and students would need to give frequent feedback to their teachers. Curriculum would need to be available for constant adaptation.

Under these circumstances, feedback is not something we would “do to a school, a teacher, or a student.” Instead, feedback is part of what it takes to be that school, teacher or student. This means that schools would need to find a way to integrate cycles of classroom observation, peer-mediated feedback among teachers and the tools to document and manage this information into their broader designs. The prevailing literature on school feedback, especially as related to teaching, would suggest that while the practices and observation and rating are common (e.g., Manatt, 1997), their detailed integration into valid time dependent feedback systems are rare (e.g., Davis, Ellett & Annunziata 2002; Murnane & Cohen, 1986; Riner, 1992; Tucker, 1997). Even in the most cutting edge school reform models, these kinds of feedback models are uncommon (e.g., Berends, Bodilly & Nataraj, 2002).

There can be no more important or purposeful application of KM than in its use to customize the school learning experience of individual students. When we use a particular teaching approach, teach to a particular curriculum outcome or simply record the instance of a student turning in homework, we create the pre-conditions to turn the data of schooling into metadata that can be used to discover the effectiveness of a given pedagogy, curriculum or learning experience. Given the potential of KM, what steps can schools take to make their critical work processes explicit and transparent and capture those transactions in KM systems? The following section offers three interconnected possibilities in this regard.

A learning statement describes the school’s position on learning and how it can best serve its students. Such a statement articulates a school’s missions and vision in more practical terms and can lead to the adoption of bodies of professional practice. Such a statement can be generated from within the community based upon a needs assessment and values clarification process. Following are examples of inclusions that could appear on a learning statement (Bain, 2004).

These directional statements set the scene for assigning value to specific pedagogies, curriculum characteristics and organizational structures necessary to build cultures of professional practice. They provide a term of reference for how a school will build curriculum, and the way the school will be organized to fulfill its mission.

A learning statement can be used to drive the development of a body of professional practice within a school by using its inclusions to identify those specific approaches to learning and teaching that the school believes will best serve its students. A body of practice represents the compendium of teaching and learning approaches a school believes best represents its learning statement. By identifying and then subsequently clarifying well-researched approaches consistent with the beliefs articulated in the learning statement, every school can put its “stake in the ground” with regard to its approach to learning and teaching. With a learning statement and body of practice a school can begin to capture the potential of KM. For example, if a school believes learning is cooperative, in specifying a commitment to a particular cooperative teaching approach its philosophical commitment to working together can be transformed into a focused pursuit of specific pedagogical approaches and their transactions. This means being able to identify the salient features of the pedagogy, how groups are constituted, how instruction is designed and how growth is represented. When these features become articulated it then becomes possible to represent that articulation in a knowledge management system. For example, the process of managing group grades and scores can be addressed with a database that is built to represent the features of the cooperative learning approach. This kind of tool can be connected to gradebook and curriculum authoring tools for designing cooperative learning lessons and feedback mechanisms that generate meaningful data and metadata that makes KM possible.

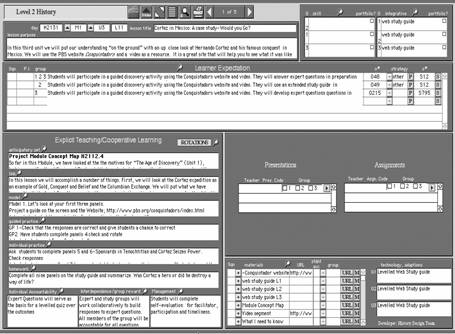

Figure 1 describes a lesson planning tool that includes fields for articulating the essential characteristics of pedagogy, for recording the way in which instruction is differentiated and how different groups will pursue different learner outcomes. These fields are a direct product of the school’s learning statement and body of practice and create the opportunity for teachers and students to design, manage and implement the key work processes of curriculum design and implementation. They also provide the opportunity for the kind of analysis described in our previous example.

For example, the left hand side of the layout entitled “Explicit Teaching/ Cooperative Learning” describes the key attributes of the lesson’s design. The data in these fields provide the kind of specific information necessary to realize in practice the experience of the teaching team described previously. Fields describe the characteristics of the pedagogy and the way in which it will be implemented in a differentiated classroom. Used in conjunction with student and teacher performance data it becomes possible to explore multiple hypotheses about classroom learning based upon a deep explication of the learning and teaching as they are described in the software. A teacher can look at the design of instruction, the extent to which it is adequately differentiated and then place that detailed understanding within the context of the feedback they are receiving from the classroom.

Figure 1 : Lesson Planning Tool

When a school can articulate its learning priorities and its body of practice, it can align those values with the way it gives feedback. For example, skills with cooperative learning can be formally and specifically recognized as a focus in the way teachers reflect upon their own performance, share feedback with peers or receive feedback from students and administrators.

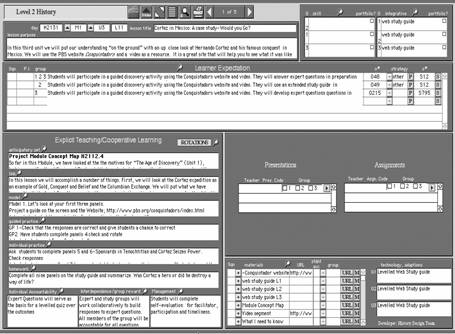

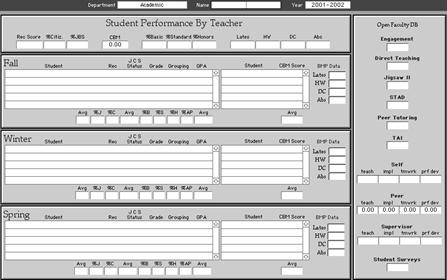

Figure 2 describes a summary layout in a feedback database that enables teachers to look at the quality of their curriculum implementation and relate it to student performance.

The fields on the right described under the heading “Open Faculty DB” include summary data describing teachers’ use of the school’s body of practice. This includes the extent to which those pedagogies have been implemented with integrity and self, peer and supervisor feedback about the teacher’s classroom practice. By clicking on the fields the teacher can drill down to a specific lesson to look at both data and a reflection about its implementation. This information can then be related to the student grade and social growth data described on the left hand side of the layout. The labels Jigsaw and STAD (Slavin, 1990) pertain to cooperative learning approaches. Their representation on the database is an expression of the school’s assignment of value to those pedagogies and its inclusion as an area of focus when delivering feedback.

Figure 2. Student and Teacher Performance Summary

Data gathered at the classroom level on quality of teaching and student performance can be mined at multiple levels in the school (teaching team, lower, upper school, etc.) and across time windows that range from days to years. The result, when intermediated with data from the kind of tools described in Figure 1, is the possibility to look in detail at the way instruction is implemented as well as how students, peers and administrators respond to it. We contend that it is at this level and with this kind of KM system capacity that the core activity of the school can be subjected to the most useful application of KM.

In both examples described in Figures 1 and 2 the relational database tools are part of a total KM system that enables the school in question to acquire information on its key transactions, manage that information and report it for multiple purpose ranging from student self-monitoring to faculty performance appraisal. The fields depicted in the diagrams emerged from the school’s articulation of its learning statement, body of practice and approach to feedback.

The details of the fields described in the layouts represent a sample of what actually constitutes the school’s critical work process. These transactions, because of their explication, can be represented in fields and tables in software tools. Most important, is the recognition that the process of building a learning community capable of articulating its beliefs and values in practice preceded the development of the tools.

A school with such a clear sense of purpose can bring greater clarity to the roles of teachers whom in the contested world of educational practice are frequently placed in the impossible position of having to implement what is an apparently endless rolodex of innovation that is rarely accompanied by the support necessary to do so. The alignment between values, practice, roles and feedback can create the conditions where the use of knowledge management tools need not be seen as an additional responsibility because they are deeply embedded in the school’s overall design (Bain, 2005).

We contend that it is only with the assignment of value to teaching and learning, the alignment of those values with the way feedback happens in schools and the subsequent explication of teaching learning and feedback in KM systems, as described in the examples, that the promise of KM can be realized in schools.

It is important to acknowledge that schools can and do use KM approaches without the clarity about learning and teaching described and advocated for in this paper. Educators can use KM approaches to organize electronic warehouses of content, to mine standardized test results or to look at trends in the business domain of schools (e.g., cafeteria receipts or purchase orders for school supplies; CETIS, 2002). These are not trivial applications, especially to the business managers, policy makers, and those engaged in a host of ex-post-facto analyses of the performance of schools and education in general. However, there is a much more compelling potential here that can assist us to achieve the outcomes expected from or with information technology in schools. To do so requires that schools:

A school capable of realizing these initiatives in practice can make KM a fully integrated part of the day-to-day life of teachers, students and schools. Instead of trying to work out what happened after the fact, doing the post mortem after the big state test, a KM paradigm based on the aforementioned initiatives could permit educators to discover the learning knowledge that emerges daily from the transactions of schooling.

Revisioning schools as more collaborative knowledge organizations requires that we bring sufficient definition to what we believe and do about teaching and learning in order to create the KM tools and approaches that can customize the learning experience in ways that extend far beyond the prevailing definition of these terms. Such definition would also permit much more specific research on approaches and tools that help schools to articulate a shared vision and then collectively use a KM system to support their teaching and learning goals. This would include deeper investigation of the ways in which KM tools, in their design and use, can capture well established research on teaching and learning to strengthen the connection between the educational use of information technology and student achievement.

In summary, the position expressed here recognizes the importance of KM and its potential to impact current practice in schools. However, the success of KM models will depend upon the co-evolution of a school’s capacity to articulate what it means by learning and teaching. We have also considered whether the current representation of KM in the literature will in fact realize the promise of this approach and what needs to be done to move KM into a position where it more adequately represents the core activity of schools.

Bain, A. (2005). Emergent Feedback Systems. International Journal of Educational Reform 14(1), 89–111.

Bain, A. (2004). Secondary School Reform and Technology Planning: Lessons Learned from a Ten Year School Reform Initiative. Australasian Journal of Educational Technology, 20(2), 149–170.

Berends, M., Bodilly, S. J., & Nataraj Kirby, S. (2002). Facing the challenges of whole school reform: New American Schools after a decade. Santa Monica, CA: Rand.

Celio, M., & Harvey, J. (2005). Buried Treasure: developing a management guide from mountains of school data. The Center for Reinventing Public Education. Retrieved May 5, 2006 from http://www.crpe.org/pubs/pdf/BuriedTreasure_celio.pdf

CETIS (2002). Learning Technology Standards: An Overview. The Centre for Educational Interoperability Standards. Retrieved February 20, 2003 from http://www.cetis.ac.uk

Davis, D. R., Ellett, C. D., & Annunziata, J. (2002). Teacher evaluation, leadership and learning organizations. Journal of Personnel Evaluation in Education, 16(4), 287–301.

Elmore, R. (1996). Getting to scale with good educational practice. Harvard Educational Review, 66, 60–78.

Fullan, M. (1997). The Challenge of School Change: A Collection of Articles. Arlington, Heights, Il: ISI/Skylight Training and Publishing. (ERIC Document Reproduction Service ED 409 640).

Goodlad, J. I. (1984). A place called school: Prospects for the future. New York: McGraw-Hill.

Lortie, D. C. (1975). Schoolteacher. Chicago, IL: University of Chicago Press.

Manatt, R. P. (1997). Feedback from 360 degrees: Client-driven evaluation of school personnel. School Administrator, 54, 8–13.

Mason, S. A. (2003). Learning from data: the role of professional learning communities. Chicago, IL: Wisconsin Center for Educational Research. (ERIC Document Reproduction Service No. ED476852).

McLaughlin, M. W., & Talbert, J. E. (2001). Professional communities and the work of high school teaching. Chicago, IL: The University of Chicago Press.

Murnane, R. J., & Cohen, D. K. (1986). Merit pay and the evaluation Problem: Why most merit pay plans fail and a few survive. Harvard Educational Review, 56, 1–17.

Petrides, L. A., Guiney, S., & Zahra, S. (2002). Knowledge management for school leaders: An ecological framework for thinking schools. Teachers College Record, 104(8), 1702–1717.

Petrides, L. A., & Nodine, T. R. (2003). Knowledge management in education: Defining the landscape. The Institute for the Study of Knowledge Management. Retrieved March 27, 2005 from www.iskme.org/kmeducation.pdf

Protheroe, N. (2001, Summer). Improving teaching and learning with data-based decisions: Asking the right questions and acting on the answers. ERS Spectrum, Educational Research Service.

Riner, P. S. (1992). A comparison of the criterion validity of principals’ judgments and teacher self-ratings on a high inference rating scale. Journal of Curriculum and Supervision, 7(2), 149–169.

Sallis, E., & Jones, G. (2002). Knowledge management in education: Enhancing learning and education. London: Kogan Page.

Sizer, T. (1984). Horace’s compromise: The dilemma of the American high school. Boston: Houghton Mifflin.

Slavin, R. E. (1990). Cooperative learning: Theory research and practice. Engelwood Cliffs, NJ: Prentice-Hall.

Smith, J. B., Lee, V. E., & Newmann, F. M. (2001). Instruction and achievement in Chicago elementary schools. Chicago, IL: Consortium on Chicago School Research.

Spector, J. M. (2002). Knowledge management tolls for instructional design. Educational Technology Research and Development, 50(4), 37–46.

Thorn, C. A. (2001). Knowledge management for educational information systems: What is the state of the field? Educational Policy Analysis Archives, 9(47). Retrieved March 31, 2005 from http://www.epaa.asu.edu/epaa/v9n47/

Tomlinson, C. (2001). Differentiating instruction in the mixed ability classroom (2 nd ed.). Upper Saddle River, NJ: Pearson.

Tucker, P.D. (1997). Lake Wobegon: Where all teachers are competent (or, have we come to terms with the problem of incompetent teachers?). Journal of Personnel Evaluation in Education, 11, 103–126.

Tyack, D., & Cuban, L. (1995). Tinkering toward utopia: A century of public school reform. Cambridge, MA: Harvard University Press.

Walters, L. (2000). Putting cooperative learning to the test: Harvard Education Letter Research online. The Harvard Education Letter. Retrieved October 20, 2004 from http://www.edletter.org/past/issues/2000-mj/cooperative.shtml

© Canadian Journal of Learning and Technology