Authors

Mandie Aaron, Ph.D. is an Educational Consultant In Montreal,

Quebec.

Dennis Dicks, Ph.D., is an Associate Professor of Educational

Technology at Concordia University in Montreal. Contact: 1455 de

Maisonneuve W. Montreal, QC, H3G 1M8. Telephone: (514) 848-2424 x 2026.

Email: djd@vax2.concordia.ca

Cindy Ives, Ph.D., is a Faculty Development Associate at McGill

University in Montreal. Contact: cindy.ives@mcgill.ca

Brenda Montgomery, M.A., is a teacher at Selwyn House School.

Contact: rilling@selwyn.ca

Teaching technologies offer pedagogical

advantages which vary with specific contexts. Successfully integrating

them hinges on clearly identifying pedagogical goals, then planning for

the many decisions that technological change demands. In examining

different ways of organizing this process, we have applied planning

tools from other domains - Fault Tree Analysis and Capability Maturity

Modeling- at the school and college levels. In another approach, we

have examined attempts to broadly model the integration process at the

university level. Our studies demonstrate that the use of a variety of

tools and techniques can render the integration of teaching

technologies more systematic.

Résumé: Les technologies

d'enseignement offrent des avantages pédagogiques qui varient en

fonction des contextes particuliers. Pour réussir leur

intégration, il faut clairement cerner les buts

pédagogiques et planifier les nombreuses décisions que

ces changements technologiques exigent. En considérant

différentes manières de mettre en œuvre ce processus,

nous avons utilisé des outils de planification provenant

d'autres domaines - l'analyse de l'arbre de défaillance et la

modélisation de stabilisation des capacités - tant pour

les écoles que les collèges. Dans une perspective

différente, nous avons étudié les tentatives

cherchant à modéliser de façon large le processus

d'intégration au niveau universitaire. Nos études

démontrent que l'utilisation d'une variété

d'outils et de techniques peut rendre l'intégration des

technologies d'enseignement plus systématique.

Supporters claim that the use of information technologies in education will increase communication among students and teachers, provide access to resources that may otherwise not be available, and encourage "authentic" learning as students access "real-world" data not provided by textbooks (Schrum, 1995). Given that most students almost anytime, anywhere can access various forms of information technology - MP3, cell phones, PDAs - it does not make sense to exclude this part of their experience and ability (Tapscott, 1999) from the educational part. Together, these challenges to the instructor's monopoly on sources of learning (Dicks, 2000) can serve as a catalyst for an examination of pedagogy, perhaps moving practice from a didactic to a more collaborative approach (Becker & Ravitz, 1999; Dexter, Anderson, & Becker, 1999).

Attempts to integrate information technologies (IT) into teaching and learning have a history as long as the technologies themselves. They range in scale from simple classroom applications to projects covering entire school systems, sometimes focused on education at a distance, but increasingly promoting a `blended' format: access at school, at work, at home, on the road. To a large degree, the logic driving technology integration has shifted from serving a circumscribed clientele (e.g., Continuing Education) to providing competitive advantage at the institutional level. In any case, as Ives (2001, Chapter Two) points out, the literature suggests that simply adding technology, simply investing in hardware and connectivity, will not produce the promised benefits. Technology is by nature disruptive, and so, demands new investments of time, money, space, changes in the way people do things, new skills and so on. While the classroom innovator may make do, at the level of a school, college or university, technology integration will involve broad groups of stakeholders: faculty, staff, technicians, librarians, administrators. "Make do" will not do. Bates (2000) suggests that project management, instructional design, team-based course development and other academic and administrative techniques perfected in distance education environments, are crucial to the success of technology integration in a broader institutional context. He insists that success requires an appropriate match of educational technologies to strategic mission. Change on this scale requires coordinated planning.

In this paper we will examine several different approaches to

planning for the integration of teaching technologies, drawing on

literature from a wide range of disciplines. We adopt a very broad

sense of teaching technology to include any computer application

intended to facilitate teaching or learning. Our objective is to use

our research findings to inform planners, particularly at the school,

college or university level, about specific techniques for analyzing

institutional needs, and about broader perspectives for modeling the

change process.

Technology planning approaches generally fall into one of three categories: top-down, initiated by administrators; bottom-up, driven by the people delivering a product or service; or mixed, involving a bit of both. Top down approaches may ensure adequate resources, but risk involving the grass-roots only superficially or even engendering resistance. In a bottom up approach, the grass-roots may be better placed to understand and implement innovation, but enthusiasm may not compensate for a lack of physical and political support.

Instructors determine to a large extent what happens in the classroom (Hooper & Rieber, 1995). Fuller's (2000) research suggests that instructor acceptance plays a critical role in the successful use of computers in the classroom. However, other players - school boards, university administrators, government agencies - tend to control goal -setting, working conditions, performance evaluation, and the resource allocation that shapes these activities. By compelling instructors to collaborate with people outside the classroom, technology can be perceived as a threat to the private practice of pedagogy. Effective planning means getting the top and bottom to communicate across their subcultures to build a shared set of ends and means.

Effective planning combines the advantages of the top-down and bottom up approaches, where leadership provides the mandate, the resources, and the coordination, yet recognizes the importance of local acceptance and empowerment. In one form of this approach, leadership provides the structure of reform, the grassroots work out the details. The State of Indiana presents an example of this type of planning, by dictating the general guidelines for the technology plan, but leaving the details to each school (Indiana Department of Education, 2002). Similarly, the California K-12 Education Technology Master Plan for Educational Technology lists recommendations for schools including acquisition of resources, equitable distribution, professional development, and evaluation of the plan (California Department of Education, 2004).

A limited body of evidence indicates that planning for teaching

technology adoption does lead to better results, beyond funding,

equipment acquisition and other resource allocations (Brush, 1999).

Strategic planning tends to be limited to these resource issues, so it

needs to be broadened to include adjustments to organizational culture

(Ives, 2002, p.148), thereby encompassing a broad range of factors

which potentially affect the success of integration initiatives.

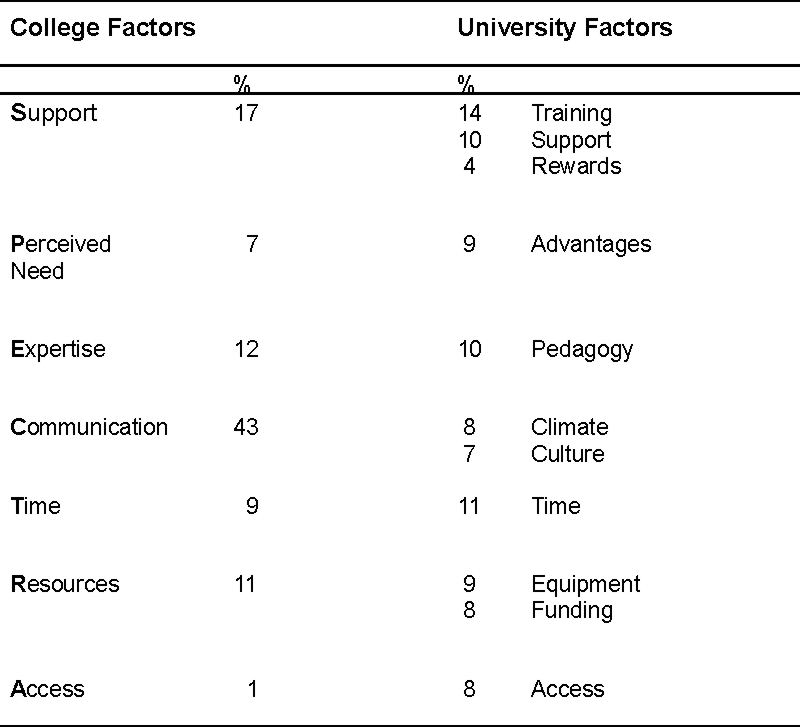

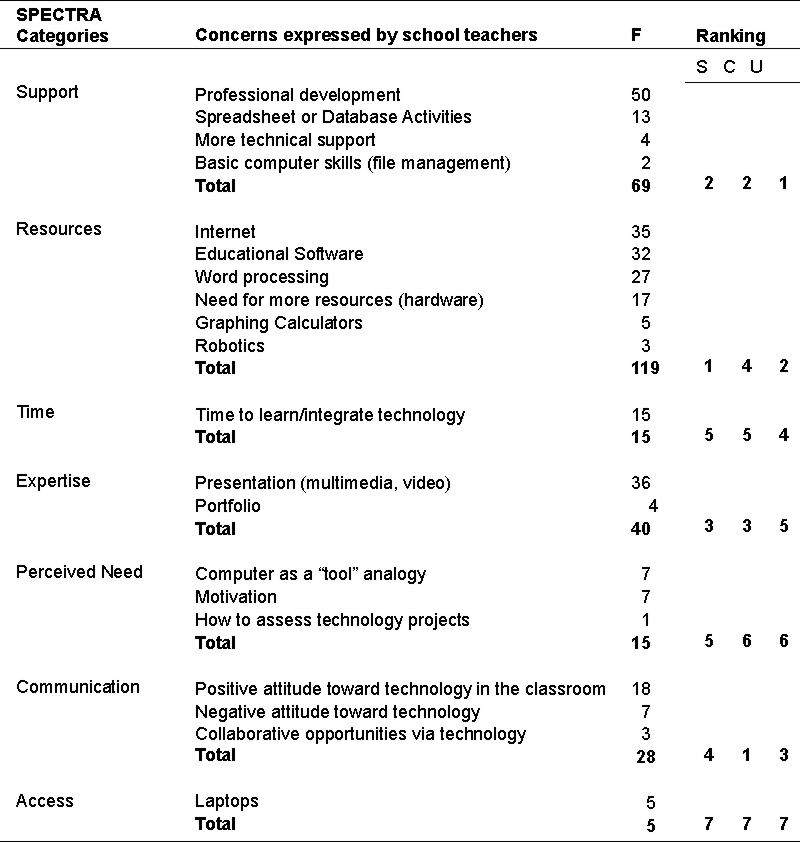

In summarizing research on attempts to introduce teaching

technologies in schools, Leggett and Persichitte (1998) and others have

identified sets of factors (TEARS: Time, Expertise, Access, Resources

and Support) which reportedly have influenced the adoption of teaching

technology. Aaron (2001), in research at the college level, has

modified this to the "SPECTRA" list (Support, Perceived need,

Expertise, Communication, Time, Resources, Access) by adding the key

ingredient, communication. In a rigorous review of the literature on

the integration of teaching technologies in universities, Ives (2002,

p.45) identified a long list of factors. Table 1 reproduces the data

from Aaron and Ives.

Table 1.

Factors affecting teaching technologies integration in college and

university contexts.

All of this information derives from qualitative reports on respondents' concerns, so the relative importance of these factors can only be assessed by frequency of citation, rather than a quantitative metric. Thus, the percentages indicate the relative frequency of responses for each type of factor. The college data comes from a study conducted by Aaron (2001) discussed in the Fault Tree Analysis section later in the text. The university data comes from an analysis of 136 articles published in the period 1995 - 2001 (Ives 2002, p.45). The factors have been listed according to Aaron's "SPECTRA' categories, on the left side of the table. Generally, the college and university samples tend to express similar types of concerns. This issue will be taken up again in Table 6.

Identifying these factors in a particular institution should help planners prepare for the integration of IT in that context. Ideally a planner would like to know not only which factors concern the target group, but also orders of priority, so that resources could be optimally marshaled to effect change. How might a planner obtain this type of information?

We have experimented with two different types of analytic tools for

determining the factors likely to influence adoption of IT in a

specific context: Fault Tree Analysis and Capability Maturity Modeling.

Fault Tree Analysis (FTA) originated in safety engineering as an analytical tool for examining systems in terms of events and other types of impediments likely to prevent the attainment of the system's goals (Wood, Stephens & Barker 1979). Understanding possible sources of failure within a system provides opportunities for pre-emptive action to increase the likelihood of success.

Early on, educators adapted the FTA approach for the examination of educational systems (Stephens, 1972) and several such applications followed (Jonassen, Tessmer & Hannum 1999), but only on a modest scale. In this context, FTA offers two types of advantages: a) the process facilitates communication about proposed innovations in terms all stakeholders can understand, and b) FTA provides a practical outcome in the form of a graphical display of the people and events involved in the proposed innovation, and the relationships among them. Both these features should contribute to the accuracy and authenticity of the data collected on the factors influencing the adoption of significant change. On the down side, FTA demands substantial time and effort, such that the validity of the analysis will be threatened if the scale of the system limits participation by the stakeholders.

FTA thus holds promise as a way of involving stakeholders in the

planning of technological innovation, identifying where barriers to

change may arise, and indicating which players could be engaged in

seeking alternatives to overcome these barriers.

Aaron has conducted a major field trial of the FTA method in a college contemplating teaching technology initiatives (Aaron, 2001). The College, part of Quebec's province-wide network of first-level post-secondary institutions, offers a mix of pre-university and professional programs to 7500 full-time and 3500 part-time students, in an urban setting. It describes its mission in terms of attaining excellence through both innovative educational approaches and the application of appropriate technology. At the time of the study, information technology took the form of centralized faculty resource rooms (5 for 543 faculty) equipped with a few networked PCs. In addition, the College provided about 150 administrators, program directors and faculty with personal network access. Academic and IT administrators expressed interest in moving faculty towards web-based delivery of courses on campus, and in establishing a presence in distance education. They thought an FTA might serve this cause. Subsequently, Aaron and the College established an FTA Team consisting of two representatives of the academic administration, two representatives of technical services, 4 faculty, and Aaron, the FTA researcher.

FTA procedures formulated by Stephens (1972) have been elaborated by

others, including Jonassen et al. (1999). Aaron further modified the

procedures to fit the time constraints of the FTA team members and to

permit the participation of faculty members (the focal system). The

next section summarizes the steps followed in this particular Fault

Tree Analysis.

As its first task, the FTA Team agreed upon a mission statement: briefly, that by the end of the academic year, the college would develop and implement a small set of web-based credit courses, and increase faculty awareness of potential uses of new teaching technologies.

Following the steps outlined below, the FTA Team then speculated about events which might prevent the achievement of this goal, and, through a long series of meetings and e-mail exchanges, structured their ideas in the form of a Fault Tree. Towards the end of this work, the Team invited all 543 faculty members (the focal system) to evaluate, by means of printed survey, a draft version of the Fault Tree (response rate 14%). Throughout this study, over roughly 8 months, the researcher collected several other types of qualitative data regarding the activities and attitudes of the FTA Team:

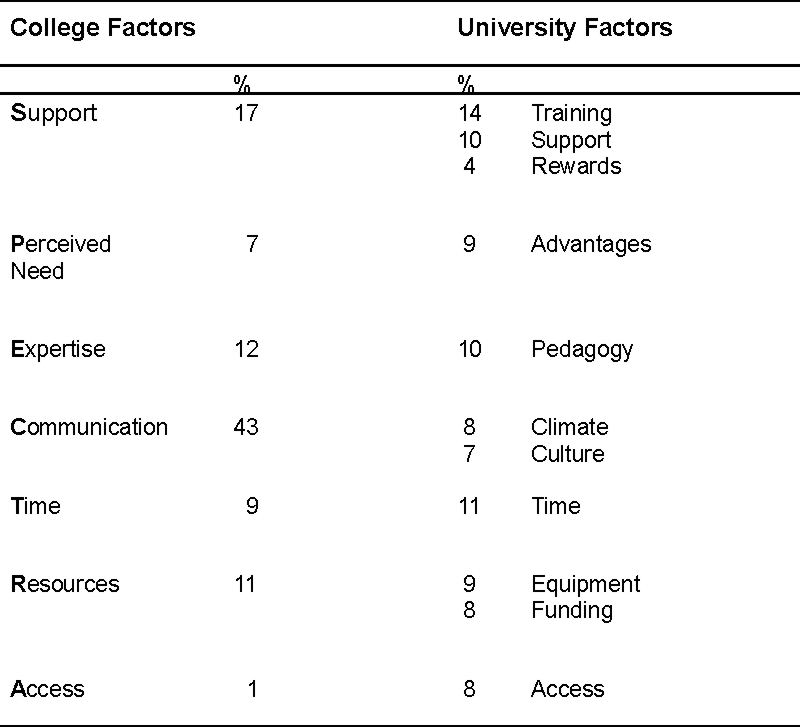

The Fault Tree constructed by the FTA Team identified a total of 228

events that might result in failure to reach the goals of the teaching

technology initiative. Generally, the 75 faculty responding from the

focal group endorsed the failure events in the FTA Team's prototypic

tree. Figure 1 illustrates how a Fault Tree inter-relates failure

events, for a small sample from this very large set. Each of the boxes

in the figure represents a failure event identified by the FTA team.

Each event is seen as leading to the occurrence of events higher in the

hierarchy. In more formal practice, the events would be joined by

logical operators (e.g., and/or gates). Inserting gates was judged too

complex a task for the population in this field trial.

Aaron has coded the 228 failure events into what she has called the SPECTRA categories (Table 1). Basically her analysis takes the list of factors identified by Leggett and Persichitte (1998) and others, then adds Communication to reflect her finding that this element tends to be under emphasized in technology integration studies.

Because the college and university factors have been derived in very

different ways, from different types of sample, comparing them

stretches the imagination. However, the samples weigh the factors

similarly, except for communication, which ranks far above others in

the college data, and figures much more importantly than in the

university data. Even if the climate and culture factors in the

university data are combined, this "communication" category (15%) still

ranks third, well below the first two, and comparatively lower than

"communication" in the college data. Apparently people working in this

college have high expectations

regarding communications about impending change.

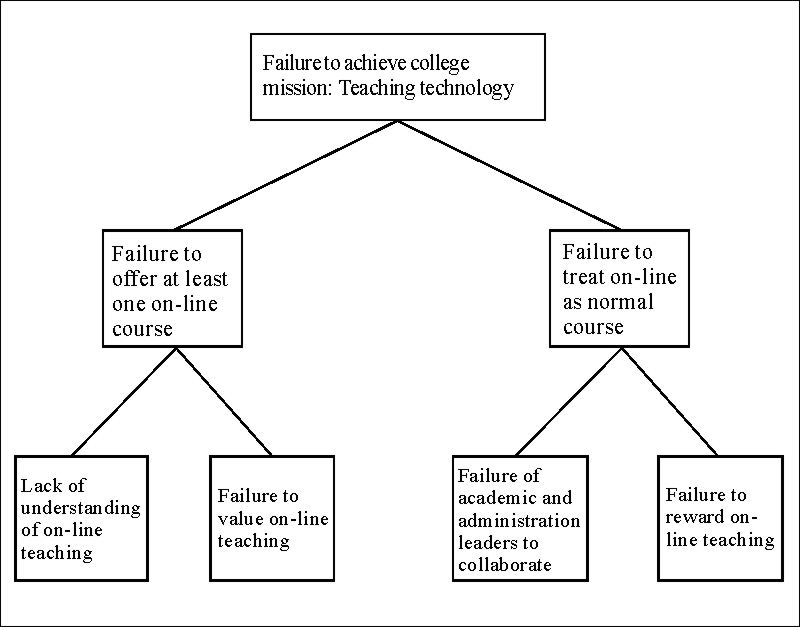

We have also experimented with Capability Maturity Modeling (CMM) as a tool for exploring how an organization might react to innovative change. Here again, we borrow from engineering practice, in this case from the software industry (Carnegie Mellon Software Engineering Institute, 2002). CM models have been used in other domains, such as systems engineering and human resources. In this case, we have used the CMM concept to examine the readiness of a set of schools to embark on eaching technology initiatives.

A Capability Maturity Model assesses the readiness of an organization to assimilate change by evaluating its practices within a defined and standardized framework. Each of the five maturity levels of this hierarchical framework includes methods for determining the status quo and for making a transition to the next level. These methods focus on the organization's mastery of different Key Process Areas (KPAs) at the respective maturity levels.

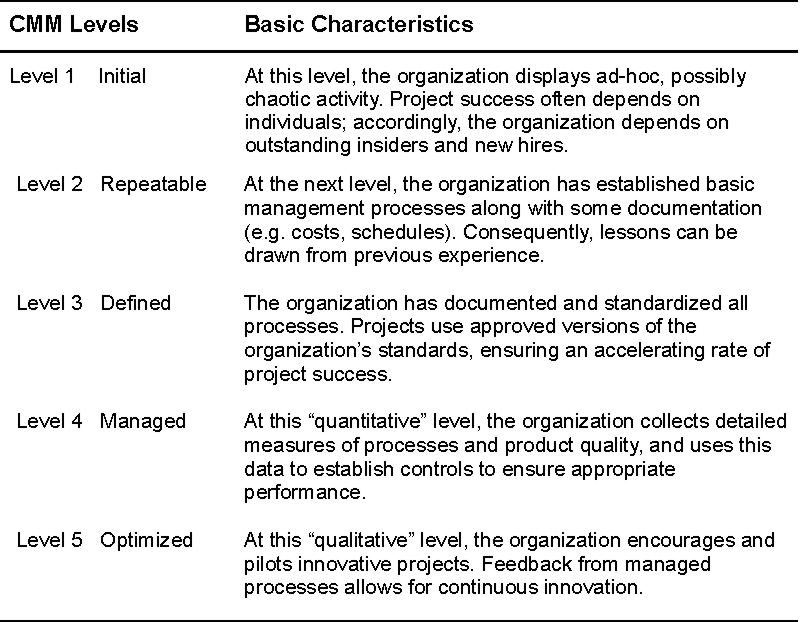

Table 2 displays the five levels of a generic CMM and their basic characteristics, adapted by Montgomery (2003) from Carnegie Mellon Software Engineering Institute (2002). As an organization advances through levels 2 to 5, the characteristics generally accumulate. Each level, except the first, has a set of KPAs defined in terms of goals and "best practices" (see Table 4) which govern the organization's ability to successfully manage change.Table 2.

The five levels of a CMM (Capability Maturation Model) and their

basic characteristics

In education, many factors complicate the process of innovation.

Technological, social and pressures from the work world encourage

educational institutions to evolve. Not a domain that readily accepts

change, education reacts gradually to these external pressures. We can

see this gradual approach in the way successful instructors adopt a

reflective outlook on their own practices (Becker & Ravitz, 1999).

This suggests that educational cultures can pass through different

phases of maturity regarding change, ready to move forward, backward,

or maybe not at all. A Capability Maturity Model attempts to document

these phases.

Whereas Fault Tree Analysis examines possible areas of resistance to change in an organization in an open-ended process, CMM compares the organization's readiness for change with the set of guidelines outlined in Table 2. Like FTA, the CMM approach offers the advantage of allowing all stakeholders to participate in the assessment exercise. Accordingly, Montgomery (2003) has conducted a collective case study of six K-12 schools in various stages of planning for teaching technology integration, using CM modeling with a view to developing a TI-CMM (a "technology integration" version of the CMM tool). Three private, one private parochial, and two public schools agreed to participate.

By means of structured interviews, examination of plans and records, and teacher surveys, Montgomery gathered data in all six schools regarding the technology planning process, availability of equipment, network infrastructure, teachers' attitudes and skill levels, and examples of technology use. She examined each school's technology plan, the teachers' attitudes toward technology use, and samples of technology projects; interviewed the Principal or Head of school, and the technology coordinator or IT Director. She analysed this information within the generic CMM framework in order to develop descriptions for the KPAs, goals, and best practices at each level of the TI-CMM. A central premise of the TI-CM Model is that the purpose of technology in schools is to support teaching and enhance the learning process.

Separate protocols for the Principal and for the technology coordinator guided the interviews. The questions dealt with issues that define each of the maturity levels, including documentation, use of technology, professional development, availability of resources and infrastructure.

Teacher interviews employed two instruments. The Technology Implementation Questionnaire (TIQ) (Wozney, Venkatesh, and Abrami, 2001) examines instructors' use of technology and their reasons for integrating or not integrating technology into their classrooms. Montgomery used the TIQ to determine the extent to which instructors actually use technology and whether or not they see it as beneficial and cost-effective. The TIQ provides a benchmark to assess the state of technology integration in each school at an instant in time in comparison with the plans that were developed and implemented by each school's administrator.

The second instrument, the Teacher Technology Activities

Questionnaire designed by Montgomery, asks instructors for examples of

how they use technology in the classroom. Montgomery also asked each

school to provide copies of its technology plans or relevant documents.

Five of the six schools could comply with this request.

In her study, Montgomery modified an established planning process to create a Technology Integration Capability Maturity Model for K-12 schools. While no model can perfectly represent the complexities of an educational institution as it adopts new technology, the TI-CMM provides precise goals and guidelines for a staged implementation plan, grounded in research by educators with principles differing from those of commercial organizations.

Use of the case study method permitted a grounding of the TI-CMM in data collected from real schools on the goals, key process areas, and practices which define each level of the maturity model. Further, the collective case study sampled a range of schools in the expectation that each school would represent differences in practice, planning methods, and in the amount and use of available technology. This provided insights into issues at each level of the TI-CMM and allowed for the development of a generic TI-CMM useful for all K-12 schools.

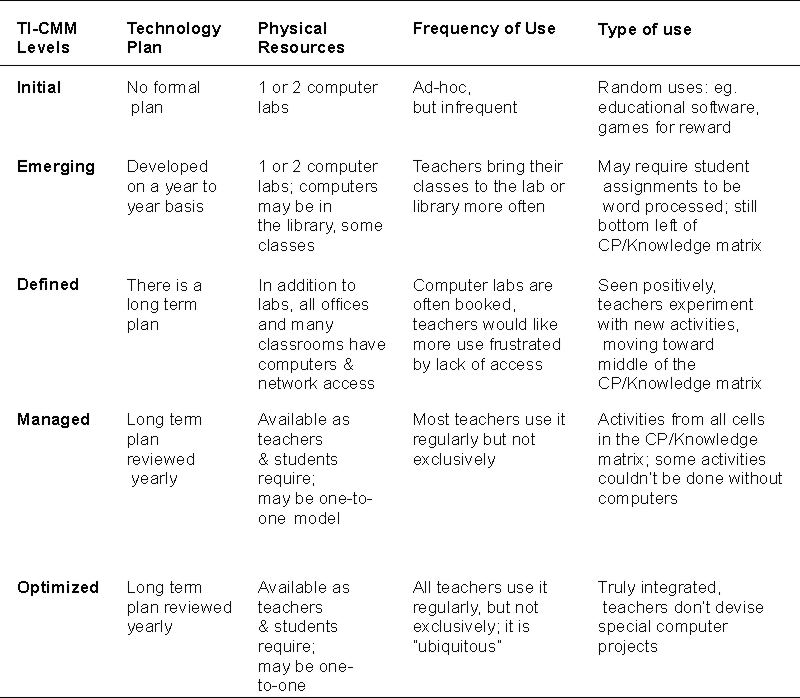

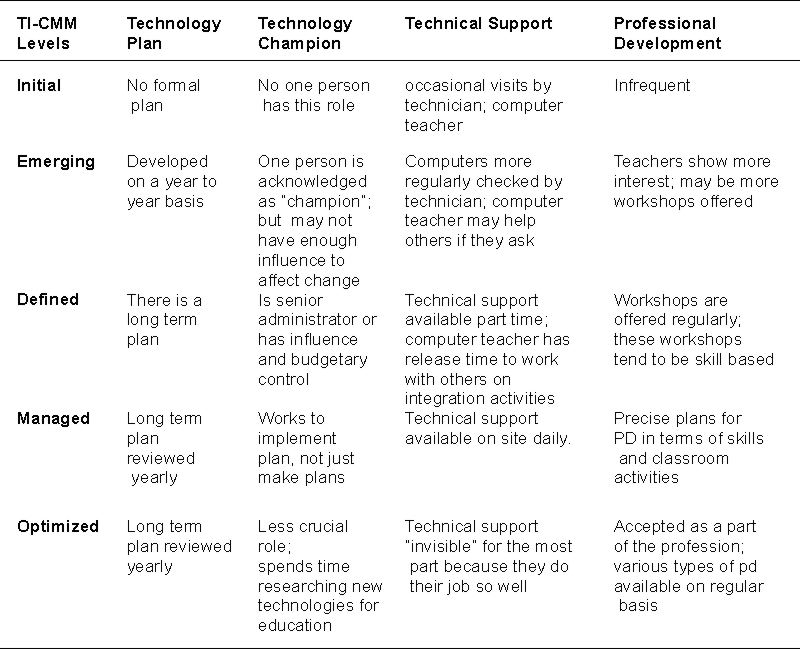

Table 3 displays examples of how the five levels of a generic TI-CMM

might be manifest in the context of teaching technology integration.

Table 3(a) shows changes in teacher/student access to technology, and

how they use it, as an organization progresses through the `capability'

levels. Table 3(b) shows changes in the roles of the project champion

and technical support personnel and in professional development

activities for teachers as an organization progresses through the

`capability' levels. Each level except the first has a set of Key

Process Areas (KPAs) defined in terms of goals and "best practices"

which govern the organization's ability to successfully manage change.

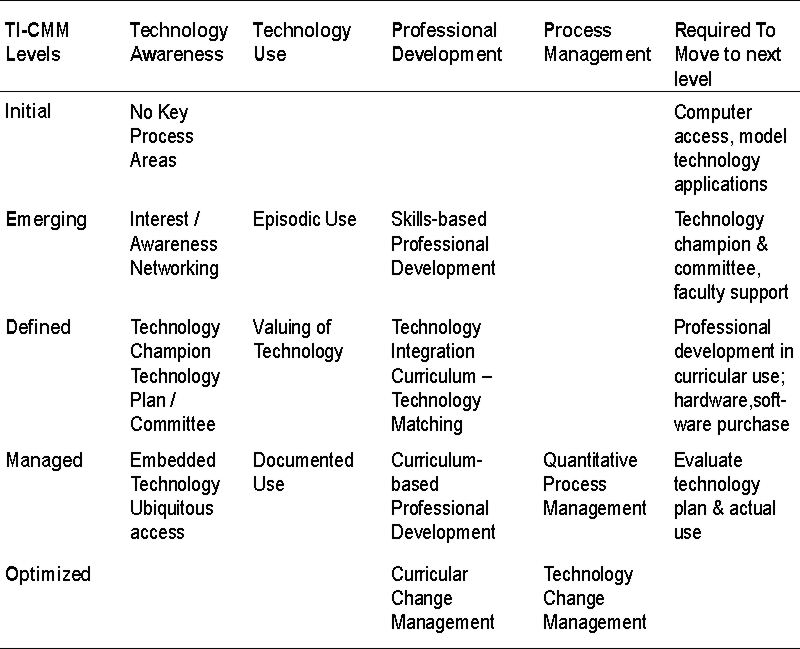

Table 4 provides examples of KPAs for each level in the TI-CMM in the

context of teaching technology integration. The column headings

indicate broad types of process; the last heading indicates the types

of process which have to be developed to move from a given level to the

next one. Generally, the higher levels incorporate the key processes

already present in lower ones.

Table 3 (a).

Levels of the TI-CMM with regard to physical resources

Table 3(b)

Levels of the TI-CMM with regard to

human resources

Table 4.

Examples of key process areas for each level in the TI-CMM

Data from the sample schools embarking on teaching technology

projects indicate the TI-CMM can be useful in identifying the strengths

and weaknesses in the schools' planning activities, and suggests

concrete steps schools can take to move forward.

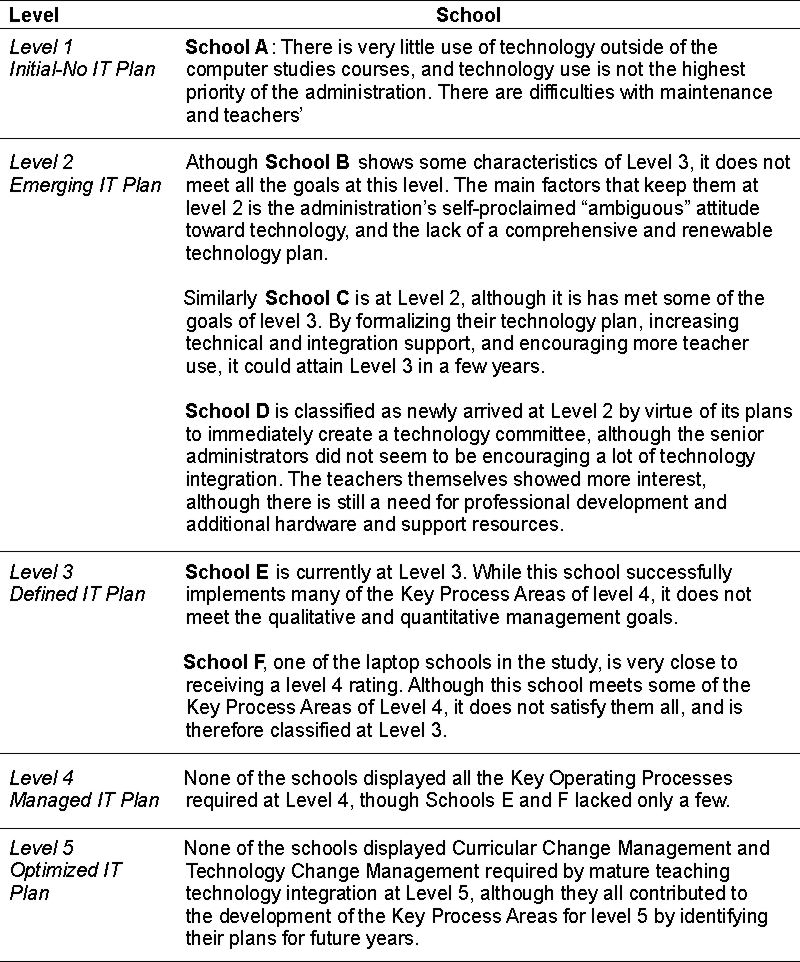

Table 5 indicates where the six schools in Montgomery's sample would

fit in her TI-CM model, based on the data she collected. The table

shows examples of how technology has been integrated into teaching

activities. None of the schools would place at the highest levels of

the maturity hierarchy, though two displayed most of the

characteristics of the second highest level. The concentration of the

schools in the lower TI-CMM ranks should not surprise us since they

have only recently embarked on programs that entail pervasive change.

Table 5.

Positions of the six sample schools on the TI-CMM

We can get an idea of the issues these schools face from the data

that Montgomery's survey collected from the 117 participating teachers.

Response rates varied from 18% to 58% across schools. The responses,

coded by Montgomery (2003), have been grouped according to Aaron's

(2001) SPECTRA

categories in Table 6. The last three columns compare the relative

frequency of responses for each SPECTRA category in this school data

[S]; and in the college [C] and university [U] data presented earlier

in Table 1.

Table 6.

Teacher comments on 117

questionnaires on teaching technology

integration

Teachers in schools, colleges and universities broadly agree in ranking Support near the top in importance, Time, Perceived Need and Access near the bottom, and Expertise in the middle. The college sample ranks Resources lower, and Communication higher than the school and university samples.

The case study data provides further insight into the relative importance of varios factors favouring technology integration. One is the vital role the technology "champion" plays in schools. In the SoftWare-CMM, and the Human Resources-CMM, too much reliance on an individual person relegates an organization to a level 1 rating. Similarly, schools relying on individual teachers to encourage technology use will rank at level 1 in the TI-CMM. However, promoting an innovation within a culture typically resistant to change requires a person appropriately placed within the administrative structure and with personality traits that allow him or her to effect change. So reliance on an influential `champion' does become an important factor in the middle levels of the TI-CMM, as formalized plans and processes take shape.

Presence of a comprehensive implementation plan constitutes another key factor. Although five of the six schools in this study had some form of a technology plan, even if provided by the School Board, none of them included detailed plans for professional development, discussion of needs assessments, the format of potential training, or the desired outcomes.

Secondly, the technology plans lacked overall pedagogical goals for technology integration. Most respondents agreed that technology could be useful, but could not list its advantages in the classroom. At all levels, the TI-CMM points out the need for specific goals to direct activities and provide benchmarks for measuring progress.Fault Tree Analysis and Capability Maturity Modeling can serve as formal methods of conducting the `Needs Analysis' phase of institutional planning for technology integration, to answer the questions, "What are current conditions?" and "What has to change?" The answers should help planners chart a course of action to meet their objectives. Unfortunately, in our experience, planning typically proceeds on the basis of assumptions, rather than firm data on needs. Further, planners may not be in a position to choose wisely among the variety of approaches available.

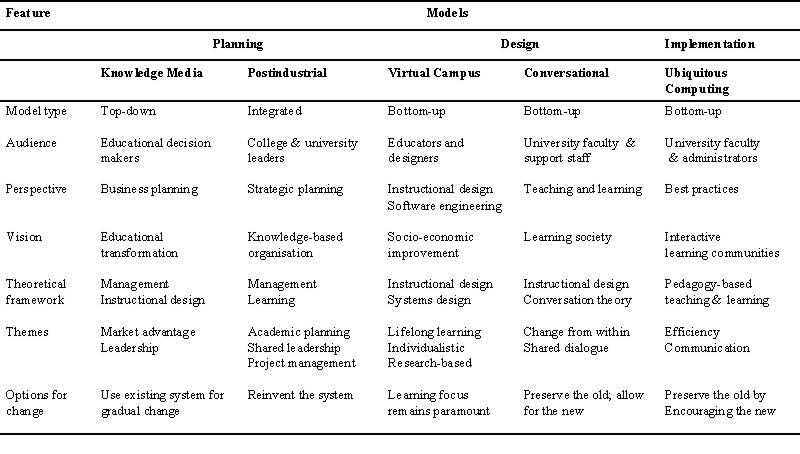

In another line of research, we have examined alternative ways of conceptualizing and dealing with the task of integrating teaching technologies. Ives' (2002) review of the research on efforts to integrate teaching technology in universities revealed several models of how instructors behave in this context, including the Barriers model (Ertmer, 1999), Facilitating Conditions (Surry & Ely, 1999); the Technology Acceptance model ( Davis, 1989), the Technology to Performance Chain model (Goodhue et al. 1997), and Diffusion models (e.g., Rogers, 1995). She suggests that this work points to the need for integration initiatives to be carried out on an institution_wide scale. She has found five different approaches to institution-wide planning, and categorized them into three broad types: Planning Models, Design Models and Implementation Models. Table 7 briefly characterizes the three types, with specific examples of each type.

Planning models try to create efficient business plans, largely through top-down actions intended to manage resources and change work-culture, e.g., Knowledge Media (Daniel, 1999) and Postindustrial (Bates, 2000). Design models work toward learner-centred instruction through a mix of top-down / bottom-up actions designed to change the operating environment and change instructors' goals, e.g., Virtual Campus, (Paquette, Ricciardi-Rigault, de la Teja, & Paquin, 1997) and Conversational (Laurillard, 2002). Implementation Models seek competitive advantage by distributing resources to enable grass-roots instructors to change the way they teach, e.g., Ubiquitous Computing, (Brown, 1999).

Table 7 shows that the different planning models focus on different

types of goals, different types of strategies and tactics, and

therefore, lead to different degrees of completeness in attending to

the SPECTRA factors. Consequently they tend to favour some members of

the participant organizations over others (Ives, 2002, Table 20).

Table 7.>

Brief characterizations of the three types of technology integration

models

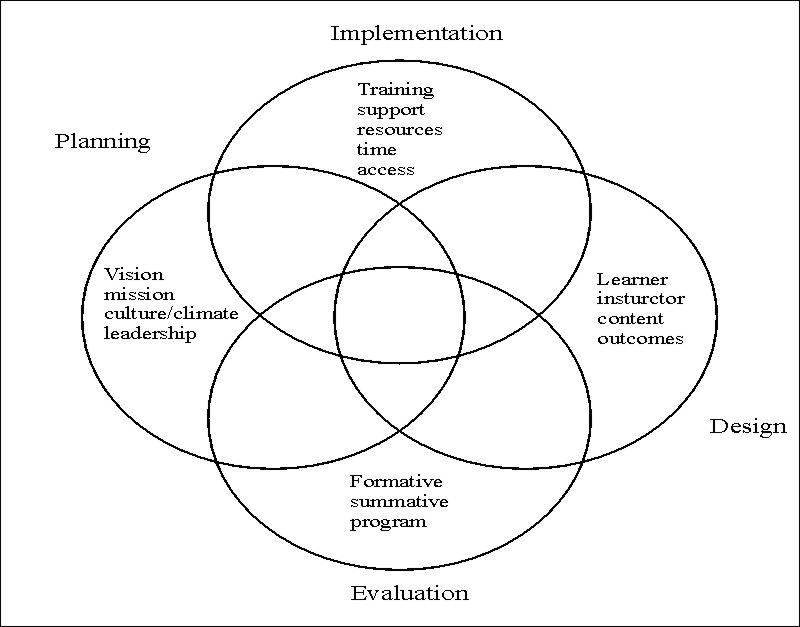

To remedy this, Ives has proposed a modification of Reigeluth's Educational Systems Development approach (ESD) (Reigeluth, 1995) as a means to effectively integrate the perspectives of three key subcultures in educational institutions: faculty, support staff and administrators. Her ESDesign model focuses on designing for learning as a process that all participants can share, offering them a methodology to address two central questions: Why do we need teaching technologies? What choices do we have for fulfilling this need?

In extending the scope and scale of ESD, Ives adds the key element

of strategy formation, so that her ESDesign model consists of four

spheres of planning activity: Strategy, Design, Implementation and

Evaluation (Figure 2). To emphasize how they interact and overlap, the

spheres can be imagined as rising off the page in three rather than two

dimensions. Key processes are indicated within each of the spheres.

Figure 2. The ESDesign

model displayed as four spheres.

Figure 2. The ESDesign

model displayed as four spheres.

In the context of teaching technology integration, this model of change management offers special advantages in that it deals explicitly with the design, development, and evaluation of activities intended to improve learning. Consequently, the model directly addresses the factors that, the literature shows, affect attempts to integrate information technologies into teaching and learning.

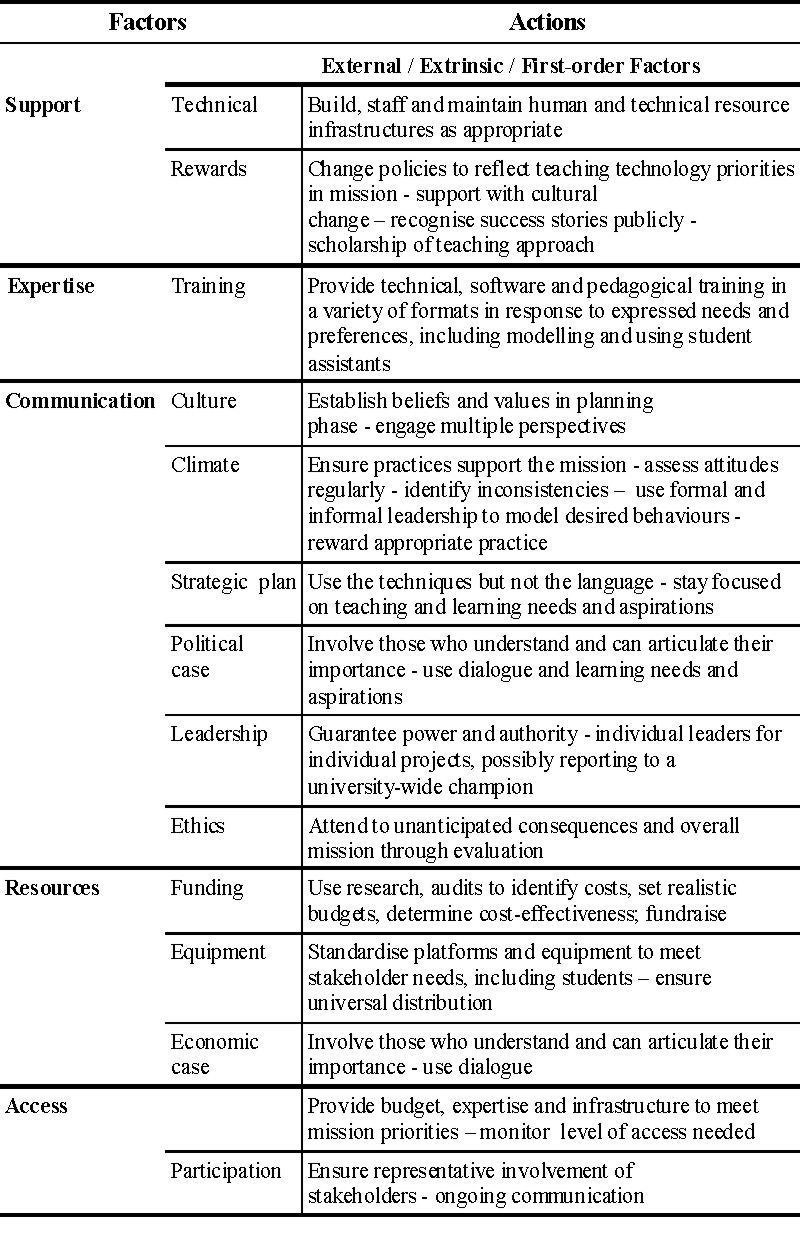

The ESDesign model deals with factors affecting technology

integration in the ways outlined in Table 8. They emerged as

recommendations in Ives' literature search and case studies (modified

from Ives, 2002, Table 22, p.146). Ives has organized the factors

according to the level of control or "degree of freedom" that a faculty

member can exert in dealing with each factor. The simple entries in the

Actions column of the table disguise a very complex, integrated

approach to institutional change. Table 8 (a) lists actions that can be

to address first order factors, the "extrinsic" ones largely under the

organization's control.

Table 8 (a).

Actions that can be taken within the ESDesign model to address

extrinsic factors

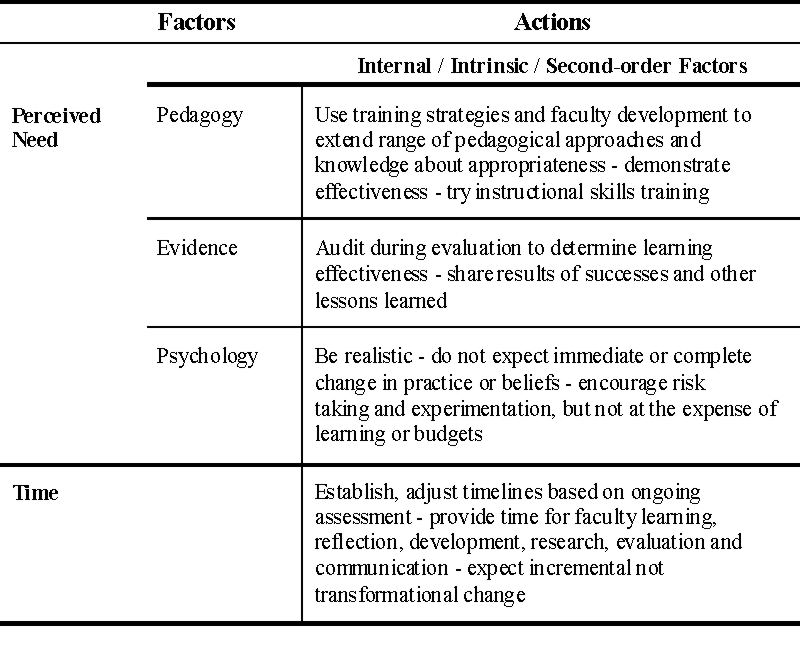

Table 8 (b) lists actions that can be taken to address the respective

second order factors, the "intrinsic" ones largely under the control of

an individual faculty member. Most of the key factors lie outside the

direct control of instructors yet profoundly affect the amount and

quality of support they will need to change their way of teaching.

Providing this support requires coordinated activity among the many

parts of the institution which have grown up around the basic teaching

enterprise: IT services, physical plant, libraries, academic

management, and so on. Even with this support in place, changing the

relationship between the instructor and the instructed requires new

actions and expectations on both sides.

Table 8 (b).

Actions that can be taken within the ESDesign model to address

intrinsic factors

ESDesign can be used to constantly focus and re-focus the attention of stakeholders in an educational system on its core business because it is inspired by and structured around the design of environments for effective learning. Instructional objectives therefore drive the choice of technologies, whether for use in the classroom or for institution-wide integration. Decisions about design, implementation and management of technology thus keep the learners' and teachers' needs in mind, at the course, program, department or institutional level.

In this respect, the ESDesign model provides a useful framework for planning and monitoring change, because it focuses on improving learning, not technology per se; and because it outlines the roles of all the major stakeholders in the academic community, thus encouraging them to enter the dialogue that must underlie matching of their respective means and ends.

One advantage of having actively teaching participants in the

ESDesign process is that they more likely to be familiar with the

design of instruction than other stakeholders, and have the potential

to apply that experience to macro and meta-level planning activities.

As in teaching, there is a formative component to the ESDesign process.

By explicitly addressing learning needs in all spheres of activity, a

process of continuous improvement of learning is possible, at least

theoretically, as a potential application of double loop learning. What

instructors learn by supporting the learning of their students at a

class or course level, they should be able to apply to program or

curriculum design, either directly or by sharing with their

collaborators. This should help the entire organization learn to

monitor and improve its performance. By nature of its cost in time,

money and other resources, technology requires us to move to a steeper,

more persistent learning curve.

As the modeling literature shows, when the integration of technology into teaching enters the academic mission, the task becomes highly complex, far beyond the compass of individual instructors. Studies of actual integration efforts indicate that a large number of factors come into play, their role varying with the specific features of the educational institution. What can we draw from experience to try to prevent an organization that hopes to harness technology from re-inventing the wheel?

We have explored a number of different approaches that might set beginners off on the right path. They could start by applying one of the tools borrowed from engineering disciplines, Fault Tree Analysis and Capacity Maturity Modeling, to conduct an analysis of the particular needs of their institutions.

In this context, Fault Tree Analysis uses a brain-storming type methodology to try to determine ahead of time what sorts of barriers a technology implementation plan might encounter (Aaron, 2001). Our experience with an application of FTA on a large scale (over 550 potential participants) indicates it can provide information which reflects general findings in the literature, but adds detail on how members of the institution expect various factors to influence technology integration in their specific context.

In our field trial, three of the four active members of the FTA Team reported that the process met their expectations and that it did not consume an excessive amount of time. However, they remained "undecided" about using FTA again. Roughly half the 75 responding members of the focal group thought the process useful. Significantly, about two thirds of them agreed that the teaching technology goal set by the FTA Team was worth achieving, indicating that the process helped in establishing consensus. However, the great majority (83%) of the focal group judged their part in the analysis too complicated, and the remainder suggested some method other than a long survey be used. Indeed, even in our simpler FTA application (avoiding the use of AND and OR gates), verifying faults and the relationships among them posed a challenge for the average respondent, undoubtedly contributing to a low level of response.

Given the reservations expressed by our sample, use of FTA on a large scale might not be an optimal approach. Rather, the technique might be better applied in small but representative task forces, perhaps as a prelude to evaluation of specific issues by focus groups. FTA might thus be a useful way of prioritizing areas of concern before planning begins. Further, Aaron (2001) recommends that fault analysis be supplemented by examining the state of mind of the participants before and during the technology implementation. Aaron did enhance this application of FTA by obtaining feedback from the end users. She suggests the level of involvement could be extended by the application of tools like the Stages of Concern questionnaire (Hall, George and Rutherford 1977), the Technology Adoption and Diffusion Model (Sherry, Billig, Tavalin and Gibson, 2000) and the Technology Acceptance Model (Davis, 1989), to guide planning actions throughout the implementation.

Capacity Maturity Modeling involves intuitively simpler methods, basically interviews and observations to determine whether an organization meets an established set of criteria. In Montgomery's field test of the technique in six schools, a supporting survey indicated participants' concerns for the same group of factors reported in the literature. However, her TI-CMM method itself examined whether the schools possessed the characteristics - the "key processes" in Table 4 - required to deal with these factors. In short, the proposed TI-CMM tests the readiness of an organization to integrate teaching technologies, we might say from the opposite perspective of Fault Analysis.

Though developed with data from a small sample of schools, Montgomery's TI -CMM appears to be a useful and manageable tool for preparing a technology integration plan. For one thing, the method does not consume a lot of resources - neither time nor personnel. Secondly, the data it requires are neither difficult to comprehend nor to access. Finally, its validity appears to be borne out in this sample by the finding that the two schools ranking highest on the TI-CMM scale (those with laptop programs) scored higher on the attitudinal scales, integrated technology into classes more often, and rated themselves higher in terms of their stage of integration and proficiency level (Montgomery, 2003, p.45). Apart from some reticence regarding their technology plans and their pedagogical goals, the respondents expressed no difficulties with the research method. Accordingly, TI-CMM offers promise as a way of determining which organizational needs should be addressed in a technology integration plan.

Ives' examination of technology integration models reveals the range of activities that can be called "technology planning". Her analysis indicates, for example, that universities can spend an enormous amount of time on plans, but still omit some of the "key processes", like implementation protocols and evaluation activities (Ives, 2002 p.161). The modeling approach offers the great advantage of exposing the different types of goals that planners can attempt to address (for example, the focus on strategy, on instructional design, or on implementation in Ives' sample). This in turn indicates what sort of factors have to be considered in the plan, and which stakeholders have to be involved. Given the special nature of educational institutions, especially their typically slow rate of change, inexperience with collective action, and low level of technological applications at the workface, Ives' development of the ESDesign model provides a more comprehensive approach to the means and ends of education.

Aaron's work extends the application of Fault Tree Analysis to planning issues in education. Montgomery develops a version of a Capability Maturity model for integrating technology in teaching. Ives' critique of different approaches to planning for technology integration at the institutional level leads her to a more comprehensive Educational Systems Design model.

Taken together, our studies demonstrate that planning for the integration of teaching technologies can become more systematic through a variety of tools and techniques. As an intensely interdisciplinary activity, this sort of planning involves many players and processes acting simultaneously, interdependently. Any attempt to model it must envision a dynamic, even cyclical process of planning, implementation, evaluation and revision. This should not surprise us, because it mirrors education itself.

The authors sincerely thank the panel of anonymous reviewers who contributed to significant improvements in the original draft of this paper.

Aaron, M., (2001). An exploration of the applicability and utility of fault tree analysis to the diffusion of technological innovation in educational systems. Unpublished doctoral dissertation. Concordia University, Montreal.

Ainley, J., Banks, D., & Fleming, M. (2002). The influence of IT: perspectives from five Australian schools. Journal of Computer Assisted Learning, 18(4) 395-404.

Bates, A. W. (2000). Managing technological change. San Francisco: Jossey-Bass Inc.

Becker, H. J., & Ravitz, J. (1999). The influence of computer and internet use on teachers' pedagogical practices and perceptions. Journal of Research on Computing in Education, 31(4), 141-157.

Brown, D. G. (1999). Always in touch: A practical guide to ubiquitous computing. Winston-Salem, N.C.: Wake Forest University Press.

Brush, T. (1999). Technology planning and implementation in public schools: A five-state comparison. Computers in Schools, 15(2), 11-23.

California Department of Education., (2004). The California K-12 education technology master plan recommendations. Retrieved April 27, 2004 from http://www.cde.ca.gov/la/et/rd/ctlmain.asp

Carnegie Mellon Software Engineering Institute. (2002). Capability maturity models. Retrieved April 27, 2004 from http://www.sei.cmu.edu/cmm/cmms/cmms.html

Daniel, J. S. (1999). The mega-universities and knowledge media: Technology strategies for higher education (2nd ed.). London: Kogan Page.

Davis, F. D. (1989). Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Quarterly,13, 319-340.

Dexter, S., Anderson, R., & Becker, H. (1999). Teachers' views of computers as catalysts for changes in their teaching practice. Journal of Research on Technology in Education, 31(3), 1-26.

Dicks, D. J. (2000, November). Changing how we teach. Plenary presentation, Educational Technology Conference. Concordia University.

Ertmer, P. A. (1999). Addressing first- and second-order barriers to change: strategies for technology integration. Educational Technology Research and Development, 47(4), 47-61.

Fuller, H. L. (2000). First teach the teachers: Technology support and computer use in academic subjects. Journal of Research on Computing in Education, 32(4), 511 _ 537.

Goodhue, D., Littlefield, R., & Straub, D. W. (1997). The measurement of the impacts of the iic on the end-users: the survey. Journal of the American Society for Information Science, 48(5), 454-465.

Hall, G. E. (1976). The study of individual teacher and professor concerns about innovations. Journal of Teacher Education, 27(1), 22-23.

Hall, G. E., George, A. A., & Rutherford, W. L. (1977). Measuring stages of concern about the innovation: a manual for the use of the SoC Questionnaire. (ERIC Document Reproduction Service Number ED 147 342).

Hasselbring, T., Barron, L., & Risko, V. (2000). Literature review: Technology to support teacher development. Washington, D.C.: National Partnership for Excellence and Accountability in Teaching.

Hooper, S., & Rieber, L. P. (1995). Teaching with technology. In A. C. Ornstein (Ed.), Teaching: Theory into practice. Needham Heights, MA: Allyn and Bacon.

Indiana Department of Education, Office of Learning Resources (2002) Projects. Retrieved April 27, 2004 from http://doe.state.in.us/olr/projects.html

Ives, C. (2002). Designing and developing an educational systems design model for technology integration in universities. Unpublished doctoral dissertation, Concordia University, Montreal.

Jonassen, D. H., Tessmer, M., & Hannum, W. H. (1999). Task analysis for instructional design. Mahwah, NJ: Lawrence Erlbaum Associates.

Laurillard, D. (2002). Rethinking university teaching: A Conversational framework for the effective use of learning technologies. London: Routledge Falmer.

Leggett, W. P., & Persichitte, K. A. (1998). Blood, sweat, and TEARS: 50 years of technology implementation obstacles. Techtrends, 43(3), 33-39.

Montgomery, B. (2003). Developing a technology integration capability maturity models for K-12 schools. Unpublished M.A, thesis. Concordia University, Montreal.

Paquette, G., Ricciardi-Rigault, C., de la Teja, I., & Paquin, C. (1997). Le Campus virtuel: un réseau d'acteurs et de resources. Journal of Distance Education, XII(1-2), 85-101.

Reigeluth, C. M., (1995). Educational systems development and its relationship to ISD. In G. J. Anglin (Ed.), Instructional technology: Past, present, and future (2nd ed., pp. 84-93). Englewood CO: Libraries Unlimited, Inc.

Rogers, E. M. (1995). Diffusion of innovations (4th ed.). New York: The Free Press.

Rogers, P. L. (2000). Barriers to adopting emerging technologies in education. Journal of Educational Computing Research, 22(4), 455-472.

Schrum, L. (1995). Educators and the Internet: A case study of professional development. Computers and Education, 24(3), 221-228.

Sherry, L., Billig, S., Tavalin, F., & Gibson, D. (2000). New insights on technology adoption in schools. T.H.E. Journal, 27(7), 42-46.

Stephens, K. G. (1972). A fault tree approach to analysis of educational systems as demonstrated in vocational education. Unpublished doctoral dissertation, University of Washington, Seattle.

Stephens, K. G., & Wood, R. K. (1977-78). Thou shalt not fail: An introduction to Fault Tree Analysis. International Journal of Instructional Media, 4(4), 297-307.

Surry, D. W ., & Ely, D.P. (1999). Adoption, diffusion and implementation and institutionalization of educational technology. Retrieved January 29, 2001, from http://www.coe.usouthal.edu/faculty/dsurry/papers/adoption/chap.htm

Tapscott, D., (1999). Growing up digital: The rise of the net generation. Toronto: McGraw Hill College Division.

Wood, R. K., Stephens, K. G., & Barker, B. O. (1979). Fault Tree Analysis: An emerging methodology for instructional science. Instructional Science, 8, 1-22.

Wozney, L., Venkathes, V., Abrami, P.C. (2001). Technology

implementation questionnaire (TIQ): Report on results. Centre for

the Study of Learning and Performance, Concordia University.

© Canadian Journal of Learning and Technology