Author

Joi L. Moore, Ph.D., is an Assistant Professor in the School of Information Science & Learning Technologies at the University of

Missouri-Columbia. Her research interests include the design and

development of interactive computer environments that support learning

or improve a performance. Correspondence can be sent to 303 Townsend

Hall, Columbia, MO, 65211 or email: moorejoi@missouri.edu

This paper synthesizes research findings

from a performance analysis of teacher tasks and the implementation of

performance technology. These findings are aligned with design and

implementation theories to provide understanding of the complex factors

and events that occur during the implementation process. The article

describes the necessary elements and conditions for designing and

implementing performance tools in school environments that will

encourage usage, efficient performance, and positive attitudes. Two

models provide a visual representation of causal relationships between

the implementation factors and the technology user. Although the

implementation process can become complex because of the simultaneous

events and phases, it can be properly managed through good

communication and strategic involvement of teachers during the design

and development process. The models may be able to assist technology

designers and advocates with presenting innovations to teachers who are

frequently asked to try technical solutions for performance support or

improvement.

Résumé: Cet article fait la synthèse

des résultats de recherche d'une analyse de performance des

tâches des enseignants et de l'application de la technologie des

performances. Ces résultats sont mis en parallèle avec

des théories conceptuelles et d'application afin de mieux

comprendre les facteurs et les événements complexes qui

se produisent au cours du processus de mise en place. Cet article

décrit les éléments et les conditions

nécessaires à la conception et à l'application,

dans le milieu scolaire, d'outils de performance qui vont favoriser

leur utilisation, l'efficacité des performances et une attitude

positive. Deux modèles proposent une représentation

visuelle des relations de cause à effet entre les facteurs de

mise en place et l'utilisateur de la technologie. Bien que le processus

de mise en place puisse devenir complexe à cause des

événements et des phases simultanés, il peut

être correctement géré avec une bonne communication

et une participation stratégique de la part des enseignants au

cours du processus de conception et de développement. Les

modèles peuvent aider les concepteurs et les défenseurs

des technologies à présenter des innovations aux

enseignants à qui on demande fréquemment d'essayer des

solutions techniques pour appuyer et améliorer les performances.

In today's educational environment, educators use computer applications to perform many rudimentary tasks, such as preparing instructional materials, reporting student progress, and delivering instruction. Specifically, teachers may not have a choice in the applications they use at school because administrators and technology decision-makers select the software. Frequently, school personnel invest funds for software marketed to solve teachers' information and performance needs, without strategically determining if these software applications will fit well into the work environment. As a result, teachers are often dissatisfied with software tools' capabilities because they are not applicable to their specific needs. Commercially-developed applications can satisfy some users' needs. However, teachers may have to adjust their work processes to the capabilities of the software created for the general population and not for the unique complexities and specific variables encountered in each school setting. The potential power of any software application is directly correlated to the degree with which it meets the user needs (Jones, 1994). The "one size fits all" approach rarely works when addressing performance needs with technology.

The objective of most performance technology introduced into a work environment should be to assist the teacher with completing tasks. Some technology, however, can make the teacher's work environment more difficult if it unexpectedly creates additional tasks (Whitney & Lehman, 1990). Software designers could reduce some of the problems that teachers encounter by understanding the performance of the users within their work environment. Similarly, having teachers become decision-makers in the technology design should ease the adoption process as well. Research indicates that teachers participate more eagerly in the technology change and adoption process if they have an active involvement in the development or selection of the intended change (Berry & Ginsberg, 1991).

The purpose of this article is to describe the essential elements and conditions for technology to be successfully designed, implemented, and integrated in school environments. The paper presents theories related to the implementation and diffusion process and human performance technology (HPT) principles. The context for the paper is based on research regarding performance analysis of teacher tasks (Moore, Orey, & Hardy, 2000) and the implementation process for performance technology (Moore & Orey, 2001). Based on the implementation research, two models are used to describe the design and implementation process that encouraged usage, efficient performance, and positive attitudes toward the tools. The author contends that the models may provide direction for technology designers and advocates of technology innovations when they are integrating a new technology in school environments.

Common design philosophies focus on creating the best technical solution and on requiring users to adapt to the technological system. A current trend is to design usable systems that adapt to the requirements of the user and their performance needs (Robertson, 1994). Gould (1988) describes the four basic principles of system design for producing a useful and easy to use computer application:

Gould's principles (1988) encompass many of the concepts and goals of popular design theories. Theories such as user-centered (Karat, 1997), performance-centered (Gery, 1995), and participatory (Schuler & Namioka, 1993), reflect some or all of Gould's principles. In addition, the rapid prototyping design model is similar to the iterative design and development process wherein prototypes are continuously developed and changed based on user performance and suggestions during the process (Tripp & Bichelmeyer, 1990). All of these theories emphasize some degree of designer efforts to include the user as a contributor to the desired functions and capabilities of the technology innovation. Despite these efforts, users may still exhibit little enthusiasm for potential adoption.

Although different in orientation and methodology, most design theories can be linked to the field of human performance technology (HPT). The International Society for Performance Improvement (ISPI, http://www.ispi.org) provides a cohesive description that states, "[HPT] stresses a rigorous analysis of present and desired levels of performance, identifies the causes for the performance gap, offers a wide range of interventions with which to improve performance, guides the change management process, and evaluates the results" (ISPI, 2003, What is Human Performance Technology section, ¶1). The HPT process links training, environmental design, feedback systems, and incentives to measure performance and build credibility for the applied interventions (Stolovitch & Keeps, 1992). In reality, it is a combination of three systematic processes: performance analysis, cause analysis, and intervention selection.

A human performance technologist views the organization as an adaptive system that takes in various inputs and produces valued products and services for users (Rummler & Brache, 1992). In order to uncover root causes of performance inadequacy, it is essential to identify and analyze elements within the organizational system that may affect performance. Therefore, a technologist examines performance problems at the organization, task process, and user levels. Within school environments, media specialist and technology coordinators assume many of the roles and responsibilities of a human performance technologist.

Implementation encompasses a systematic process combining theories that relate to communication and diffusion. In general, the implementation process is an open system that connects to the environment and controls its output by adjusting its input. When actual performance does not measure up to user and system expectations, a technology advocate communicates with all individuals in the process and makes decisions intended to produce positive outcomes. Based on Schramm's (1954) basic model of communication, the sender's and receiver's fields of experience can influence how the signal is encoded and decoded. In addition, the signal can also be distorted by noise, such as attitudes. The model includes loops between the sender and receiver that represent the interaction and feedback between the team members involved in the implementation process. The team comprises a change agent, innovation designers, developers, technology support personnel, and teachers.

Rogers (1995) defines diffusion as "the process by which an innovation is communicated through certain channels over time among the members of a social system" (p. 5). According to Rogers' Innovation Decision Process theory, potential adopters must become familiar with the innovation, perceive the innovation attributes as desirable and/or advantageous, decide to adopt the innovation, implement the innovation, and proclaim (reaffirm or reject) the decision to adopt the innovation. The communication between users and non-user about their involvement in the design process and how the innovation is supporting their performance can foster the social change. Essentially, the technology innovation users may become advocates and role models for non-users. Within school environments, teachers are the best role models to encourage their peers who are not using the technology innovation.

Based on the philosophical view of technology, diffusion research within the instructional technology field of study can be subdivided into developer-based or adopted-based theory (Surry and Farquhar, 1997). Developer-based theories focus on technical characteristics by maximizing the efficiency, effectiveness, and elegance of an innovation. The designer or developer is perceived as the primary force for changes. The assumption is that the user will intuitively recognize the relative advantages of the innovation. In contrast, the adopter-based theories focuses on human, social, and interpersonal aspects of the innovation. Adopter-based theories view the end user as the individual who will ultimately implement the innovation in a practical setting, and as the primary force for change. These theories reject the assumption that high-quality innovations will automatically attract potential adopters.

The Rate of Adoption theory indicates that the relative speed with which an innovation is diffused by members in a social system will reflect a slow, gradual growth before experiencing a period of dramatic and rapid growth (Rogers, 1995). During the rapid growth period, there is a social and environmental change that non-users experience and observe in the work environement. This leads to the planned and sometimes unprompted adoption by non-users. Following the period of rapid growth, the rate of adoption will gradually stabilize and eventually decline. Eventually, the pattern of adoption will resemble an s-shaped curve.

A key person who can influence the rate of adoption is the change agent. A change agent is the technology advocate who encourages and sustains usage of innovation. This person must be able to systematically adapt their interventions during the iterative implementation process to influence desired changes or outcomes (Hall & Hord, 1987). Interventions are based on communication with teachers, the results of their performances, and the effect of current and past interventions. An effective change agent will continue to probe, adapt, intervene, monitor, and listen until the implementation goals have been met (Hall & Hord, 1987). The change agent facilitates the implementation of an innovation by establishing a positive relationship with teachers that reflects cooperation, support, and respect during the information exchange. Media specialist, technology coordinators, and technology support personnel can all be considered change agents during the implementation process when they have a key role in the integration of the technology innovation. Although teachers can encourage others to use the innovation, the responsibility to monitor usage would require extra time in addition to their current responsibilities.

The Perceived Attributes theory states that potential adopters evaluate an innovation based on innovation attributes (Rogers, 1995). Innovation attributes, such as relative advantage, compatibility, complexity, trialability, and observability (Rogers, 1995), adaptability, riskiness, and acceptance (Meyer, Johnson, & Ethington, 1997) are described as follows:

All of the attributes described above can affect the adoption rate of an innovation. Change agents can effectively manage resistance to the technological innovation by continuously interacting with the teachers, determining their needs and attitudes, and then creating strategies to decrease their fears about using the technology. In addition, the innovation attributes may have a role in improving the innovation.

Along with two researchers, the author applied a HPT approach to the design of Teacher Tools, an electronic performance support tool for teachers (Moore, Orey, & Hardy, 2000). We analyzed the work environment and task performances by recording the occurrence of tasks, such as grading, lesson planning, and reporting, and the amount of time performing the tasks. We were able to determine tasks that were performed incorrectly and/or inefficiently. In addition, we interviewed the teachers to gather information regarding the desired work related functions to be supported by computer technology.

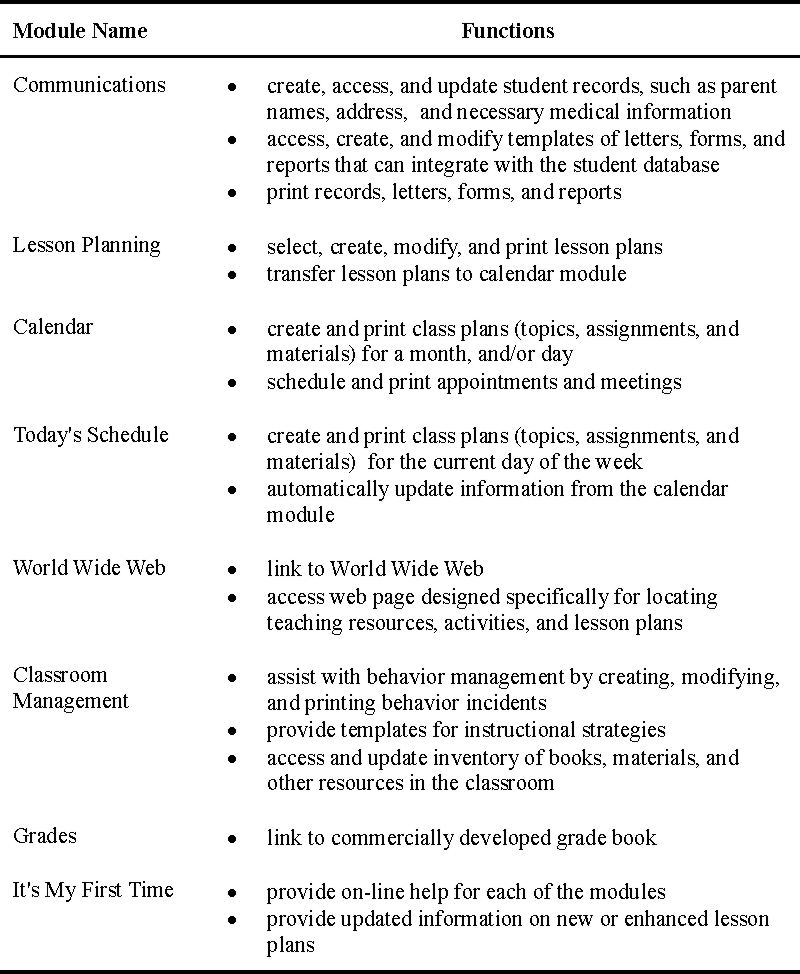

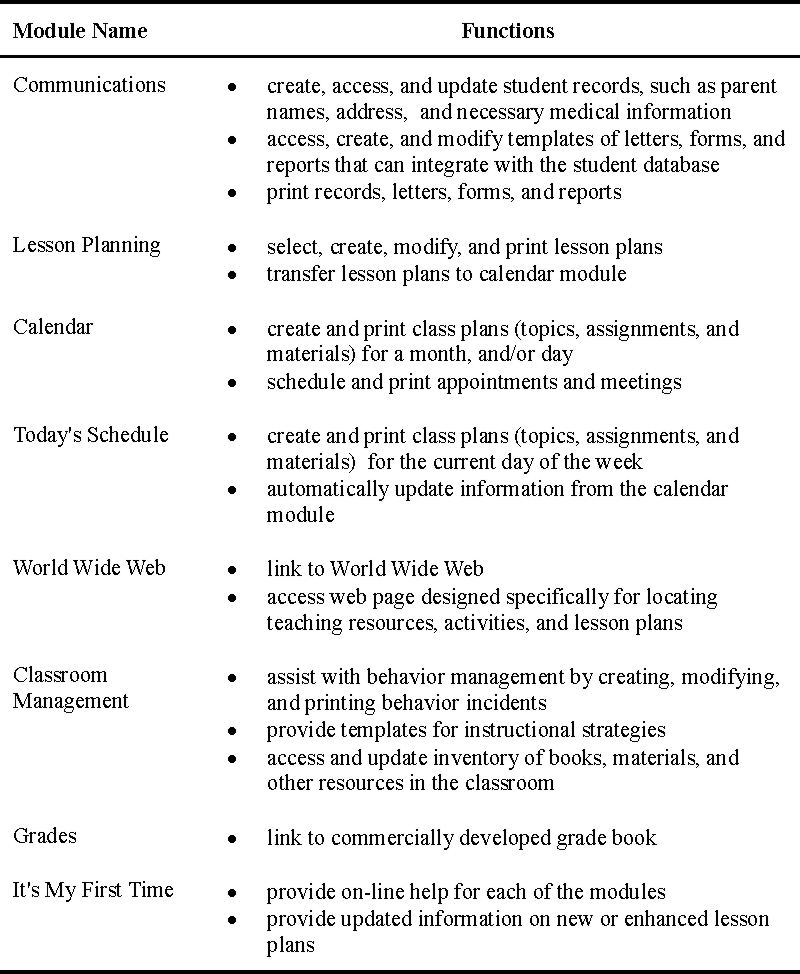

Teacher Tools is an electronic performance support tool designed and developed to help teachers perform many of their daily tasks. FileMaker Pro, a database application, was used to create this program. The basic structure of Teacher Tools is storage and retrieval of information from several integrated databases that appear as one cohesive program. Based on the performance analysis data (Moore et al., 2000), we created the following main modules: Calendar, Communications, Lesson Planning, Classroom Management, Grades, World Wide Web, and It's My First Time. The functions of the Teacher Tools modules are presented in Table 1. Each of these modules can be accessed from the main menu of the Teacher Tools program (See Figure 1).

Table 1.

Teacher Tools Modules

Figure 1. Teacher Tools Main Menu

After the Teacher Tools was developed, a collective case study of four teachers was implemented for exploring usage, performance, and attitudes (Moore & Orey, 2001). Yin (1994) defines case study as an "empirical inquiry that investigates a contemporary phenomenon within its real-life context" (p. 13). The case study methodology was appropriate for capturing a holistic perspective of the implementation process. Reichardt and Cook (1979) have two views of process: a) the monitoring of activities, which includes formative feedback, to describe the extent to which an innovation or program has been implemented, and b) describing the causal explanations that confirm the innovation effects revealed during the study. Through exploration and inductive analysis, the case study method is effective for obtaining an in-depth understanding of the situation so that the study's end results can have some meaning for involved parties (Merriam, 1998).

The case study methodology is suitable for answering "how" and "why" research questions, especially when it is difficult to separate the phenomenon's variables from the context (Yin, 1994). The following research questions focused on answering how teachers used Teacher Tools, and how performance and technology attitudes changed:

Participants. There were four middle school teachers representing four individual cases, which is an adequate number for multi-case studies (Creswell, 1998). Maximum variation, a type of purposeful sampling, was used for determining the participants because it reveals common themes or outcomes across a variety of participant characteristics (Patton, 1990). The participant selection was based on at least one teacher from each of the four primary content areas and at least one teacher from sixth, seventh, and eighth grades. In addition, the perspective of a new teacher was considered important to the study. It can take at least five years for a beginning teacher to master the demands of a teacher's work environment (Huling-Austin, 1992). Thus, at least one teacher with less than five years of teaching experience was an additional criterion for the variation of participants.

Process. The data collection process began with the teachers completing a questionnaire that addressed current tasks performed by teachers, computer experience, technology skill level, and attitudes toward using computer technology. After teachers participated in a training session, a researcher observed the teachers in the classroom, computer lab, and with team members. Teachers were instructed to use an anecdotal log to document features used and the amount of time using Teacher Tools. Another method to collect usage and performance data was the database program. The program kept a record of the login name, date of use, Teacher Tools feature accessed, action performed in the module, and the time that the user started the action. During exit interviews, a general interview guide was used for each session to ensure that all relevant topics were discussed while allowing the interviewer to spontaneously word questions and establish a "free-flowing" conversation (Patton, 1990). Information obtained from the other data sources was used to define specific questions relating to their patterns of computer tool usage and technology attitudes.

Analysis. A typical method for analyzing multiple cases is to conduct a within-case analysis followed by a cross-case analysis (Creswell, 1998). The within-case analysis facilitates the detailed description of the case and any themes that emerge. The cross-case analysis looks for similar themes and patterns across all of the cases in the study. This method helps to build general explanations that can fit each case, although the cases may have some variations.

The research findings indicated a decrease in the time to perform tasks. Work responsibilities, accessibility to computers, change agent interventions, technology support personnel, and Teacher Tools attributes were common themes that affected computer usage, performance, and attitudes. As the designers developed the performance tool, teachers were presented prototypes that reflected their ideas and suggestions. As a result, the teachers became more interested in using the tool. This communication and engagement between the designers and users during the development process contributed to a positive relationship between designers and users, and positive attitude toward using the tool.

Teacher Tools Usage.The most common tasks performed with Teacher Tools were progress reporting and managing student information. Occasionally, one teacher searched for lesson plans and managed student information. Another teacher stored information in the Calendar. The main modules used by participants were Communications, Calendar, and Lesson Planning.

Task Performance.Completing team progress reports became an easier task for teachers. Teachers were using paper forms to describe student performance in each subject. Teachers were not responsible for the same subjects, thus more than one teacher would complete a form for each student. By using the Teacher Tools database, the teachers could complete the assessment for all of their students without having to wait for another teacher. Teachers used a menu to quickly select comments, such as "Excellent" or "Satisfactory," which were typical comments provided by the teachers. Also, the computer tool allowed teachers to include additional comments for further detail. With the additional comments feature, they were providing more information than before because it was easier and quicker to type versus to write feedback.

Technology Attitudes.The teachers emphasized that technology support affected their attitude toward using Teacher Tools. The ability to communicate their needs with technology support was important for the teachers using the computer tool. One teacher stated the quality of the technology support had a direct effect on her attitude toward using any technology. Another teacher with little technology experience exhibited the most change in attitude. This teacher did not use technology before the study because she was afraid to use it and was unaware of the technology capabilities. She became more confident and developed new skills because of her involvement with the design and from using Teacher Tools.

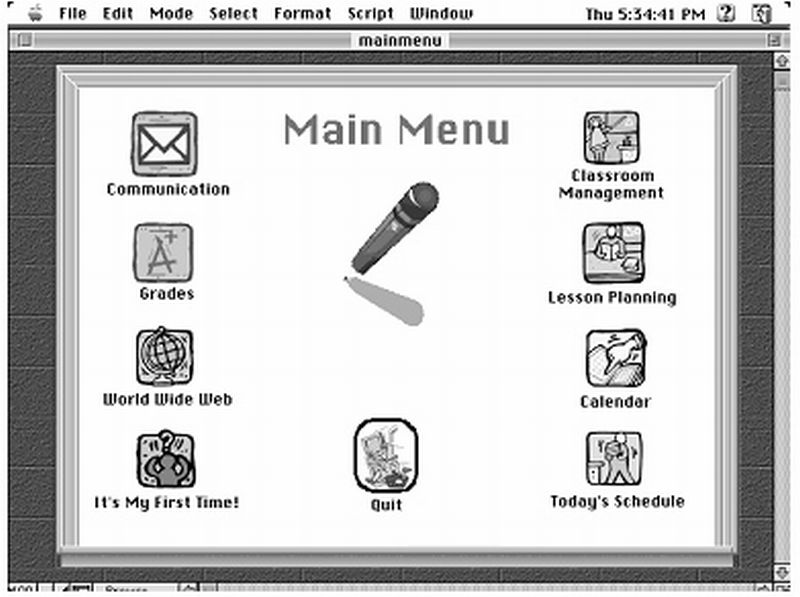

Based on the theories related to the implementation process and the Teacher Tools implementation findings (Moore & Orey, 2001), the results provide a framework for understanding usage, performances, and attitudes toward technology innovations. This framework reflects the roles and characteristics of the innovation user (i.e., teacher), change agent, technology support personnel, and technology developers. There are four major phases that represent the implementation process: collaborative design, usage, intervention, and diffusion.

The collaborative design phase emphasizes communication among primary members of the implementation process, including the innovation user (i.e., teacher), change agent, technology support personnel, and technology innovation developers. During the initial stages of an implementation, the members discuss the design and development of the innovation. The change agent and technology support personnel analyze user characteristics such as technology attitudes, technology experience, and attitudes toward change. The obvious theme of the collaborative design phase involves working together with mutual respect. Teachers must be able to communicate their concerns, needs, and ideas without feeling misunderstood or unimportant. The change agent and technology support personnel view the teacher as the authoritative information provider in regard to the types of tasks that the tool can support. In the end, the objective is that the teacher will be motivated to use the technology innovation and that any negative attitudes toward technology will become positive.

The usage phase involves the teacher performing tasks with the innovation. The actual performance provides feedback to the teacher, change agent, technology support personnel, and developers. Information relating to user and innovation efficiency, teacher suggestions, and teacher attitudes is applied to the collaborative design and intervention phases.

The intervention phase incorporates strategies to encourage and sustain use of the technology innovation. The change agent determines the intervention plans by analyzing the current technology attitude as well as teachers' personal characteristics, such as skills, knowledge, and abilities. Interventions might come in the form of individual or group training, and schedules for completing tasks with the innovation. The technology developers make the necessary changes to the technology innovation. These changes should reflect the user's needs and technology experience. Again, these interventions affect the technology attitudes, which can influence usage.

The diffusion phase occurs throughout the implementation process. Usage of the innovation influences unanticipated and anticipated users. The teachers who are using the technology will communicate their level of satisfaction to non-users or non-users will observe the performance of current users. This interaction with current users can influence the technology attitudes of non-users. Then, the non-users may decide whether or not they want to use the innovation. If non-users decide to adopt the innovation, then they become the innovation users at the collaborative planning, usage, or intervention phases. The non-user does not have to start at the collaborative design or intervention phase. The new user may directly start using the innovation without any training or collaboration with the change agent and/or technology support.

The model for the adaptive implementation process integrates three

different themes: communication, change, and diffusion (See Figure 2).

The arrows between the phases reflect relationships, but not

necessarily a linear process. The side arrows reflect that user

performance and attitudes during one phase can lead to a previous

phrase. For example, if the teacher is not satisfied with some of the

technology functions during the usage phase, then the collaborative

design phase begins again. Furthermore, phases can occur

simultaneously. Each element and phase in the process adapts to the

results of other phases in order to create a systematic method for

successful implementation. Hall and Hord (1987) define change as a

"process, not an event" and this process requires time (p. 8).

Facilitation of changes occurs best when everyone, not just the change

agent, in the environment has responsibility to encourage usage.

Communication among users, change agent, technical support, and

innovation developers is critical for success (Barrow, 1992).

Figure 2. Adaptive Implementation Process

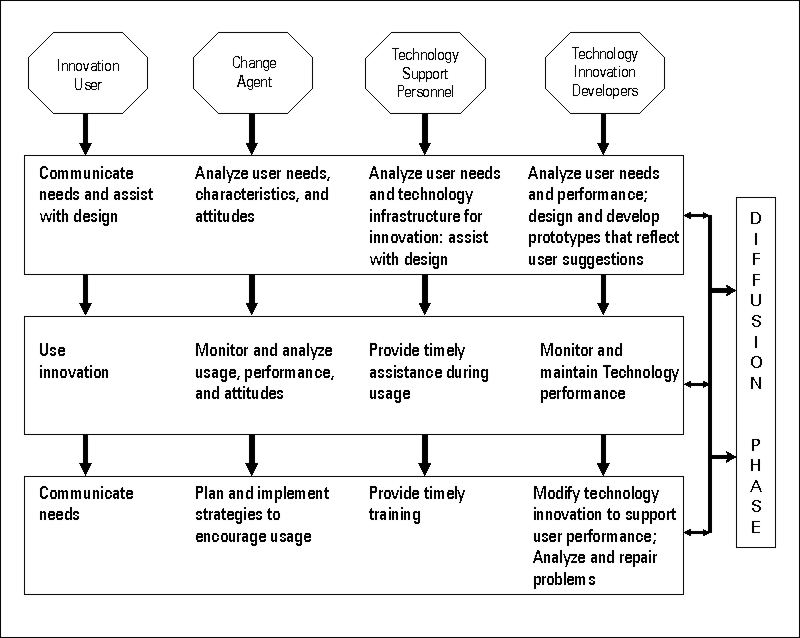

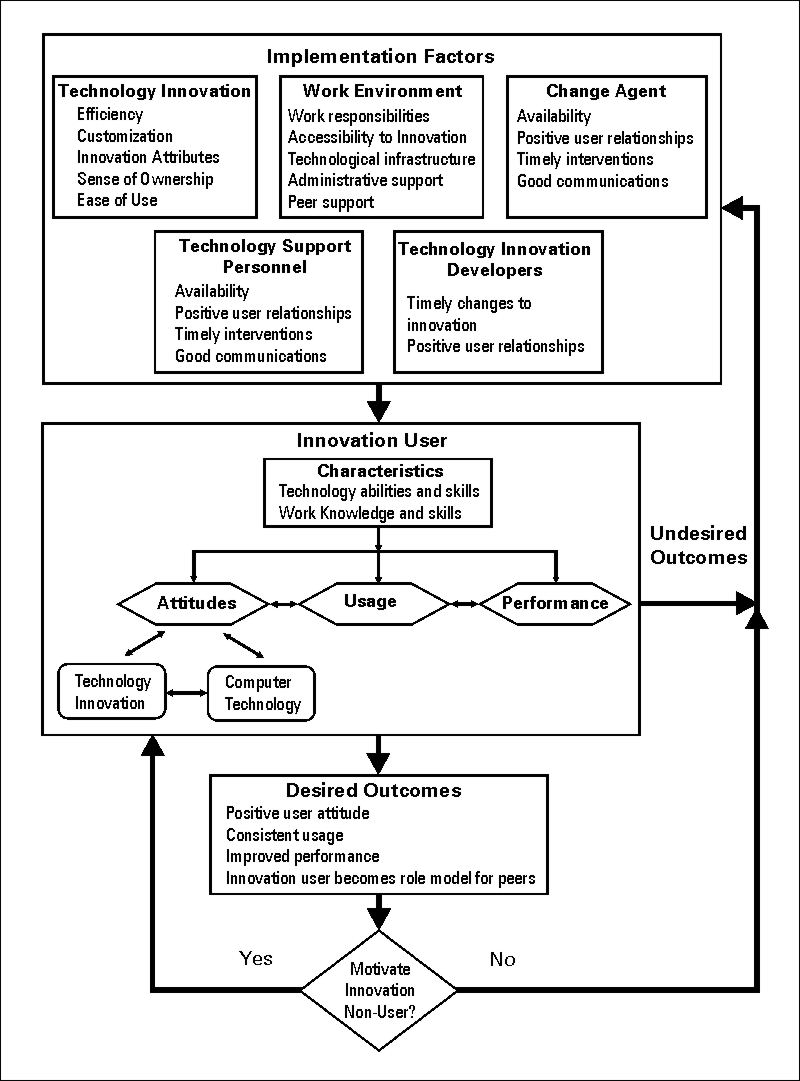

The implementation of performance tools in any work environment does not always contribute to positive changes in performances. Developers and practioners need to be aware of the different factors that can negatively affect the implementation process. The research findings reveal the factors that can influence usage, performance, and computer technology attitudes (Moore & Orey, 2001). These findings can be important for understanding why a technology innovation is not used or used infrequently. The introduction of performance technology into a work environment does not guarantee positive changes in performances. To encourage these changes, implementation factors must be considered. Figure 3 provides a visual representation of causal relationships between implementation factors, innovation user, implementation outcomes, and innovation non-user.

Figure 3. Implementation Factors and Causal Relationships

This model shows a process of iteration and feedback that depends on the implementation factors and how they may affect a teacher's usage, performance, and attitude. The arrows between the boxes indicate that there are relationships that affect the other elements in the model. The "values" of the implementation factors can affect the teacher's usage, performance, and attitude. The teacher has several characteristics such as technology and work skills that directly impact how they use the technology. Depending on their performance, a teacher may develop a positive or negative attitude toward the innovation and technology in general.

Technology innovation characteristics such as efficiency, customization, ease of use, "perceived" innovation attributes, and sense of ownership are obvious elements that affect implementation outcomes. Specifically, attitudes toward technology can be affected by the characteristics and functions of the technology. Teachers will resist technology innovations that do not match the context in which they work (Cuban, 1986). For example, a new software package might provide help with grading and reporting. However, it is not user-friendly if it requires more effort to perform the work than anticipated or if it requires more effort than previous methods. These attributes can cause a negative attitude toward the technology, which can then lead to less usage. Teachers may think that it is much easier to manage their information through traditional methods, such as grade books and paper forms, instead of trying to learn to use the software. Obviously, good software applications are worthless if the target users do not have a positive attitude regarding the technology and do not choose to use it.

Work responsibilities, accessibility to the innovation, technological infrastructure, and support from administrators and peers are examples of work environment factors. Teachers expressed concern about having enough time to use the new techology because current work responsiblities demanded a great amount of time. As explained by past research (Cuban, Kirkpatrick, & Peck, 2001), the insufficient time to use technology is an issue for teachers. However, the perception of more time needed to use the technology can be eliminated when teachers realize that the technology is an easier method for performing some of their current work tasks.

The implementation results revealed that accessibility to technology and the technological infrastructure were key factors that impacted usage (Moore & Orey, 2001). During planning periods or free time, teachers would access computers from a lab. Occasionally, teachers would have to wait to use the computers, because the lab would be scheduled for class use. Clearly, having computers in each classroom for teachers to perform their work tasks would eliminate the accessibility issue. In spite of this, research has shown that accessibility may not be enough to encourage usage (Cuban et al., 2001; Zhao, Pugh, Sheldon, & Byers, 2002). For example, the technological infrastructure may make it difficult to access student information from the school databases, which could be integrated in performance tools.

The change agent, technology support personnel, and technology developers must be able to communicate with teachers in a manner that reflects genuine interest for their ideas and concern for improving their performances and/or the technology. This was substantiated by a teacher who stated that her past experience with technology support made her feel inferior (Moore & Orey, 2001). The teacher could not understand the instructions or explanations provided by technology support personnel. In addition, timely interventions, assistance and modifications to the innovation can also motivate usage and affect attitudes toward the technology innovation and technology in general.

The teacher's technology and work proficiency are important factors in the implementation process. It is important that teachers understand the essential functions of technologies (Zhao et al., 2002). Limited technology abilities and skills, can have a negative influence on their attitudes toward the innovation, which subsequently negatively affects their motivation for usage. In addition, attitudes toward any computer technology and specifically toward the technology innovation can directly impact each other. A user may have little knowledge or information about the technology innovation being introduced, but a positive or negative technology attitude can affect the attitude toward the innovation. For example, one of the teachers had a positive attitude towards technology before using Teacher Tools, and indicated that her experience and skills made it easier to try new innovations (Moore & Orey, 2001).

The desired outcomes of the implementation are that the teachers will consistently use the technology after the implementation process has finished, their task performances will improve, and they will have positive attitudes toward using the innovation and any technology. To achieve these outcomes, the process requires an internal advocate, such as a teacher using the innovation, who understands the potential of the system, can motivate users, and provide support (Forman & Kaplan, 1994). It may become easier to influence non-users when a peer is the change agent, but this may not be feasible in a school environment because teachers have enough tasks without the extra responsibility of determining interventions for usage. However, current innovation users can show the positive results of the usage and influence others to adopt the tool (Zhao et al., 2001; Hubbard & Ottoson, 1997). When people see someone successfully using the system, they know that they have a peer who can relate to their needs and concerns. Teachers learning and working together can create the desired social environment that can promote change and adoption.The innovation will have a better chance of spreading and being adopted when peers recommend usage rather than admistrators. When the desired outcomes do not occur, then change agents must create new interventions, designers must ensure that the technology meets the needs of the user, and technology support must be accessible for additional assistance or training. The arrows from the innovation user to the implementation factors reflect this activity.

The challenge of most collaborative design methodologies is to determine how and when to apply techniques involving users in the design. Teachers must be co-constructers of the technology innovation and involved in the design decisions (Honey, McMillan, & Carrigg, 2000). User involvement is important, but communication amongst the design team, which includes the user, can be difficult when there are a myriad of disciplines, experiences, and responsibilities. Therefore, developing a positive relationship and a common language that includes all members is essential for communicating user needs and design issues.

An additional challenge is determining the amount of customization necessary for software applications. How can we better design tools that fit the needs of users without having to create a new tool for different types of users and work environments? We can begin by having tools that apply to common tasks and performance problems and allowing users to have some customization options concerning the interface and information processing.

The models provide awareness and understanding of the causal relationships and implementation factors that affect usage, performance, and attitudes. Change agents, technology designers and developers, technology support personnel, and implementation researchers can utilize the models as guides for technology innovation projects. In addition, successful technology implementation depends on the specific characteristics and circumstances of the school environment (Van Melle, Cimellaro, & Shulha, 2003). Thus, the work environment factors can be critical decision points when determining intervention strategies.

Although the implementation process can become complex because of the simultaneous events and phases, the people involved can properly manage it through good communication and trust of each other. In the end, the models may be able to assist with changing how to present technology to skeptical teachers who are often asked to try some technical solution that is supposedly designed to support and/or improve their performance.

Barrow, C. (1992). Implementing an executive information system: Seven steps for success. In H. J. Watson, R. K. Rainer, & G. Houdeshel (Eds.), Executive information systems: Emergence, development, impact, (pp. 107-116). New York: Wiley.

Berry, B. & Ginsberg, R. (1991). Effective schools and teachers' professionalism: Educational policy at a crossroads. In J. Bliss, W. Firestone, & C. Richards (Eds.) Rethinking effective schools: Research and practice (pp. 138-153). Englewood Cliffs, NJ: Prentice Hall.

Creswell, J. W. (1998). Qualitative inquiry and research design. Thousand Oaks, Ca: Sage.

Cuban, L. (1986). Teachers and machines: The classroom use of technology since 1920. New York: Teachers College Press.

Cuban, L., Kirkpatrick, H., Peck, C. (2001). High access and low use of technologies in high school classrooms: Explaining an apparent paradox. American Educational Research Journal, 38(4), 813-834.

Forman, D. C., & Kaplan, S. J. (1994). Continuous learning environments: Online performance support systems. Journal of Instruction Delivery Systems, 8(2), 6-12.

Gery, G. J. (1995). Attributes and behavior of performance centered systems. Performance Improvement Quarterly, 8(1), 47-93.

Gould, J. (1988). How to design usable systems. In M. Helander (Ed.), Handbook of human-computer interaction. North Holland: Elsevier Science.

Hall, G. E., & Hord, S. M. (1987). Change in schools: Facilitating the process. Albany, New York: State University of New York Press.

Honey, M., McMillan, G., & Carrigg, F. (2000). Perspectives on technology and education research: Lessons from the past and present. Journal of Educational Computing Research, 23(1), 5-14.

Hubbard, L. A., & Ottoson, J. M. (1997). When a bottom-up innovation meets itself as a top-down policy. Science Communication, 19(1), 41-55.

Huling-Austin (1992). Research on learning to teach: Implications for teacher induction and mentoring programs. Journal of Teacher Education, 43(3), 173-180.

International Society of Performance Improvement (n.d.) What is human performance technology? Retrieved August 11, 2003, from http://www.ispi.org/index5/whatshpt.htm.

Jones, P. (1994). Education as an information based organization. (ERIC Document Reproduction Service No. ED 372 728)

Karat, J. (1997). Evolving the scope of user-centered design. Communications of the ACM, 40(7), 33-38.

Merriam, S. B. (1998). Qualitative research and case study application in education. San Francisco: Jossey-Bass.

Meyer, M., Johnson, J. D., & Ethington, C. (1997). Contrasting attributes of preventive health innovations. Journal of Communication, 47(2), 112-131.

Moore, J. L., & Orey, M. (2001). The implementation of an electronic performance support system for teachers: An examination of usage, performance, and attitudes. Performance Improvement Quarterly, 14(1), 26-56.

Moore, J. L., Orey, M. & Hardy, J. V. (2000). The development of an electronic performance support tool for teachers. Journal of Technology and Teacher Education, 8(1), 29-52.

Patton, M. Q. (1990). Qualitative evaluation and research methods (2nd ed.). Newbury Park, CA: Sage.

Reichardt, C. S. & Cook, T. D. (1979). Beyond qualitative versus quantitative methods. In T. D. Cook and C. S. Reichardt (Eds.), Qualitative and quantitative methods in evaluation research (pp.7-32). Thousand Oaks, Calif.: Sage.

Robertson, J. W. (1994). Usability and children's software: A user-centered design methodology. Journal of Computing in Childhood Education, 5(3/4), 257-271.

Rogers, E. M. (1995). Diffusion of innovations (4th ed.). New York: The Free Press.

Rummler, G. A., & Brache, A. P. (1992). Transforming organizations through human performance technology. In H. D. Stolovitch, & E. Keeps (Eds.), Handbook of human performance technology: A comprehensive guide for analyzing and solving performance problems in organizations (pp. 32-49) San Francisco: Jossey-Bass.

Schramm, W. (1954). The process and effects of mass communication. Urbana: University of University of Illinois Press.

Schuler, D., & Namioka, A. (Eds.). (1993). Participatory design: Principles and practices, Hillsdale, NJ: Lawrence Erlbaum Associates.

Stolovitch, H. D. & Keeps, E. (1992). What is human performance technology? In H. D. Stolovitch, & E. Keeps (Eds.), Handbook of human performance technology: A comprehensive guide for analyzing and solving performance problems in organizations (pp. 3-13). San Francisco: Jossey-Bass.

Surry, D.W., & Farquhar, J. D. (1997). Diffusion theory and instructional technology. Journal of Instructional Science and Technology 2(1). Retrieved December 1, 2003 from http://www.usq.edu.au/electpub/e-jist/docs/old/vol2no1/article2.htm

Tripp, S. D., & Bichelmeyer, B. (1990). Rapid prototyping: An alternative instructional design strategy. Educational Technology Research and Development, 38(1), 31-44.

Van Melle, E, Cimellaro, L, & Shulha, L. (2003). A dynamic framework to guide the implementation and evaluation of educational technologies. Education and Information Technologies, 8(3), 267-285.

Whitney, D. M., & Lehman, J. D. (1990). A study of one school system's implementation of a commercial computer managed instruction program. Journal of Educational Computing Research, 6(1), 49-63.

Yin, R. K. (1994). Case study research: Design and methods (2nd ed.). Thousand Oaks, California: Sage.

Zhao, Y., Pugh, K., Sheldon, S., & Byers, J. L. (2002). Conditions for classroom technology innovations. Teachers College Record, 104(3), 482-515.

© Canadian Journal of Learning and Technology