Author:

Elizabeth Murphy (emurphy@mun.ca) is an Assistant Professor in the Faculty of Education at Memorial University of Newfoundland, Canada. Correspondence concerning this article should be addressed to: Elizabeth Murphy, Faculty of Education, Memorial University, St. John’s, NL, Canada A1B 3X8.

Abstract: This paper reports on a study involving the development and application of an instrument to identify and measure ill-structured problem formulation and resolution (PFR) in online asynchronous discussions (OADs). The instrument was developed by first determining PFR processes relevant to ill-structured problems in professional practice. The processes were derived from a conceptual framework. Further refinement of the instrument was achieved by the addition of indicators of processes. These indicators are derived through application of the instrument to an actual discussion in which the processes are operationalized. Results of the application of the instrument indicated that participants engaged more in problem resolution than in problem formulation. The instrument can be further developed and refined through its application in other contexts by researchers or practitioners interested in the design and use of OADs.

Résumé: Cet article présente une étude consacrée à la création et à l’application d’un instrument permettant d’identifier et de mesurer la formulation et la résolution de problèmes (PFR) mal structurés lors de discussions asynchrones en ligne (OAD) L’instrument a été développé en déterminant tout d’abord quels étaient les processus PFR applicables aux problèmes mal structurés dans la pratique professionnelle. Les processus ont été tirés d’un cadre conceptuel. L’instrument a été perfectionné par l’ajout d’indicateurs de processus. Ces indicateurs découlent de l’application de l’instrument à une véritable discussion dans laquelle les processus ont été opérationnalisés. Les résultats de l’application de l’instrument ont démontré que les participants se sont davantage employés à résoudre les problèmes qu’à les formuler. Cet instrument peut être développé davantage et perfectionné à travers son application à d’autres contextes que ce soit par les chercheurs ou les praticiens intéressés par la conception et l’utilisation d’OAD.

Computer-mediated communication (CMC) in general, and online asynchronous discussions (OADs) in particular, offer many benefits for learning. The time- and place- independent nature of the OAD facilitates self-directed learning (Harasim, 1990) as well as greater flexibility of communication with fewer social constraints (Feenberg, 1987; McComb, 1993). The medium allows for a more reflective learning process, as students are free to read and respond to others’ contributions at their own pace and are able to refer back to the cumulative record of discussions (Harasim, 1993; Kaye, 1992; Morgan, 2000). As Hara, Bonk and Angeli (2000) observe, “such technology provides a permanent record of one’s thoughts for later student reflection and debate” (p. 116). This record creates what Schrage (1995) refers to as “group memory,” transforming the ephemeral nature of an oral conversation into “an act of shared creation” (p. 126).

While many potential educational benefits of OADs have been identified, there remains an imperative to determine whether or not these potential benefits are actually being realized. As Gunawardena, Lowe and Anderson (1997) observe, “the utilization of the medium in education has in many respects outstripped the development of theory on which to base such utilization” (pp. 397-398). It is not surprising, therefore, that Henri (1992) claims in relation to CMC and online discussions that educators are not making use of them to further the process of learning. She grounds her claim by arguing that we have “no means of dealing with the abundance of information...nor of interpreting the elements of meaning which have significance for the learning process” (p. 119). Henri’s concerns are echoed by Blake and Rapanotti (2001) who propose the need for “focussed studies ... on how the technology enhances and redefines academic learning environments” so as to “assess the quality of interactions and the quality of the learning experience” (p. 1). The specific dynamics of an OAD and its role in fostering and cognitive and metacognitive development needs to be considered (Hara, Bonk & Angeli, 2000).

The increased interest in OADs has led researchers to develop tools and instruments for analysis with the aim of determining the significance of OADs for the learning process and the degree to which they achieve the goals for which they were intended. A number of models and instruments have been developed in the past decade. Henri’s (1992) content-analysis model was pivotal and seminal. Henri highlighted five dimensions of the learning process found in online messages: the participative, interactive, social, cognitive, and metacognitive dimensions.

Other content analysis models and instruments developed include those that have followed Henri, such as Zhu’s (1996) analysis of knowledge construction and meaning negotiation, further refined by Fahy et al. (2000). A similar attempt at analysis was made by Gunawardena, Lowe and Anderson (1997) with their model of collaborative knowledge construction in an online debate. This model was further developed by Kanuka and Anderson (1998). Other studies have focused on attempting to measure specific cognitive processes, such as Bullen’s (1998) analysis of students’ levels of critical thinking in an online university course. Newman, Webb and Cochrane (1995) compared an online with a face-to-face course in an attempt to measure critical thinking skills. Other attempts at content analysis include Hara, Bonk and Angeli’s (2000) study of the social, cognitive, and metacognitive elements in an OAD. Marttunen (1997) analyzed levels of argumentation and counter argumentation, and Garrison, Anderson and Archer’s (2000) “Community of Inquiry” model measures the elements of social, cognitive, and teaching presence in CMC in higher education courses.

Critical thinking skills, social presence, argumentation, and knowledge construction have thus been the focus of attention of researchers interested in content analysis of online asynchronous discussions. However, the review of the literature completed for this paper did not uncover any studies of analysis of problem solving. Like critical thinking, argumentation, and knowledge construction, problem solving also merits attention from researchers interested in assessing the educational benefits of OADs. Problem solving is not only important to the learning process but, as Jonassen (1997) claims, “problem solving is among the most meaningful and important kinds of learning and thinking” (p. 65). Understanding how problem solving occurs in the learning process in general and in OADs in particular can assist in the design of learning opportunities and environments. This understanding can also be of use to those who moderate and evaluate participation in OADs.

The purpose of the study described in this paper was to devise a means to identify and measure problem formulation and resolution in OADs. The goal was to develop an instrument comprised of PFR processes as well as their related indicators. The instrument was developed by first determining PFR processes relevant to ill-structured problems in professional practice. Further refinement of the instrument was achieved by the addition of indicators of processes. These indicators are derived through application of the instrument to an actual discussion.

The paper begins with a conceptual framework related to ill-structured problem formulation and resolution. The framework provides the basis for the determination of processes related to PFR and for a model of these processes. This model of processes provides the basis for the main categories of an instrument to identify and measure PFR in an OAD. Following the framework is a description of the context in which the instrument was further refined through its application to an actual OAD. The application allowed for indicators to be added to each of the processes. The indicators provide insight into how the processes are operationalized and how they manifest themselves in actual contexts of interaction and discussion between learners. The description of the development and application of the instrument provide insight into both the design of the instrument as well as the degree to which participants in the OAD actually engaged in PFR. Implications for practice and research and for further refinement of the instrument are discussed.

Problems in professional practice are best described as ill-structured problems, which “possess multiple solutions, solution paths, fewer parameters which are less manipulable, and contain uncertainty about which concepts, rules, and principles are necessary for the solution or how they are organized and which solution is best” (Jonassen, 1997, p. 65). As Schön (1987) argues, “The problems of real-world practice do not present themselves to practitioners as well-formed structures. Indeed, they tend not to present themselves as problems at all but as messy, indeterminate situations” (p. 4). Unlike well-structured textbook problems, the ill-structured, real-world problems of professional practice are not pre-defined but emergent (Jonassen, 1997).

Moving from the ambiguous, ill-defined problems of professional practice towards solutions that can be applied and tested requires what Lester (1995) describes as the creative-interpretive model of professional work, in which the practitioner is seen as working in “a complex, dynamic system in which there are less often neat problems than `messes’ which defy technical solution” (Lester, 1995, 6). Such problems are not easily approached with a simple step-by-step approach to problem solving. Instead, what is needed is an approach that recognizes the complexity of the processes involved and that considers both problem understanding and resolution. Jonassen’s (1997) model for solving ill-structured problems presents an approach to problem solving that includes various processes as follows:

Jonassen’s first two steps correspond to problem formulation. Problem formulation is a prerequisite to problem solving. As Bransford (1993) argues, “The ability to identify the general problem and generate the sub problems to be solved is crucial for real-world problem solving” (p. 178). Voss and Post (1988) refer to problem formulation as the “representation” phase, and describe it as being “extremely important, in the sense that once a specific representation is developed, a particular solution will follow from that representation; that is, the representation largely determines the solution” (p. 265). From the messy, indeterminate “swamp” (to borrow Schön’s 1987 metaphor) of ill-structured problems in professional practice, the practitioner must identify and formulate a problem to be resolved.

The process of formulation involves understanding the problem within its context. “Situated, real world problems are emergent, not pre-defined. So, the solver must examine the context from which the problem emerged and determine what the nature of the problem is” (Jonassen, 1997, p. 79). Problem formulation also involves building a body of knowledge about the problem area. “How much someone knows about a domain is important to understanding the problem and generating solutions” (Jonassen 2000, p. 69).

Both these processes within the problem formulation phase - understanding the problem in context, and building knowledge of the problem - can be supported through interaction with others. Meacham and Emont (1989) describe the process of ill-structured or “everyday” problem solving as a uniquely interpersonal process in which “other people facilitate recognition, acceptance, definition of the problem...[and] information [is] gathered with help of other people” (p. 19). Problems in everyday life, they suggest, are solved through “interpersonal, social, problem solving conversations” (p. 10).

This emphasis on interpersonal interaction continues beyond the problem formulation phase into the problem resolution phase, represented by steps three through seven in Jonassen’s model. Ill-structured problems typically do not have a single “right” solution, but multiple possible solutions. It is for this reason that the term problem resolution is favoured in this paper over problem solving. Voss and Post (1988) describe a “good” solution to an ill-structured problem as one in which “the solution must be judged pragmatically, the judgement being made by other members of the problem-solving community” (p. 281). Jonassen (1997) agrees:

Since ill-structured problems typically do not have a single, best solution, a learner’s representation of it should assume the form of an argument for a preferred solution (...) The `best solution’ is the one that is most viable, that is, most defensible, the one for which the learner can provide the most cogent argument”. (p. 81)

This process of evaluating and testing possible solutions within the context of what Lave and Wenger (1991) have called the “community of practice” requires exposure to multiple perspectives. These multiple perspectives, argue Murphy and Laferrière (2003), can be provided through virtual communities such as those created in OADs:

Participation in the virtual community provide[s] opportunities to view problems in multiple contexts and to see the different ways they might be identified, named, and potentially solved. The collaborative virtual space presents occasions to consider alternate perspectives, contrary ideas, and new insights that might sometimes confirm existing conceptions and ideas, and other times challenge them. (p. 80)

Just as viewing the perspectives of others appears to be essential to the formulation of ill-structured problems, having one’s proposed solutions challenged by others, and defending and revising them accordingly, is crucial to the problem resolution phase. “Articulation of one’s thoughts - externalisation of ideas - enables reflection, and promotes conceptual refinement and deeper understanding. Making one’s beliefs explicit reveals points of disagreement with others and renders problematic what one previously took for granted” (Steeples, Goodyear & Mellar, 1994, p. 87). This argument is consistent with Piaget’s conclusion that “social interaction...leads to a recognition of alternative perspectives, which in turn produces a cognitive conflict and thus motivates the coordination of alternative perspectives to arrive at a solution” (O’Malley 1995, p. 285).

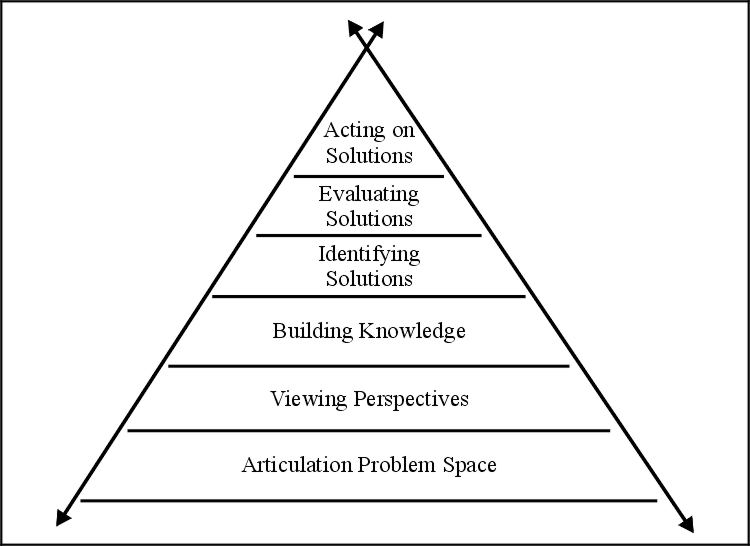

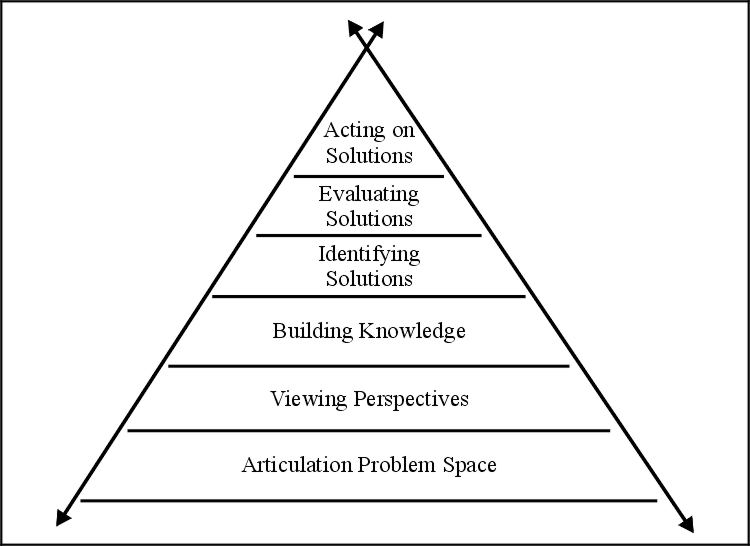

From this consideration of the literature, we can derive processes that support identification of PFR in a context of teaching and learning with OADs. The first process involves consideration of what Jonassen (1997) refers to as “Articulating the Problem Space”. This process involves specifying what the problem is that must be discussed. It involves setting up broad boundaries within which the problem can then be further represented, identified, formulated and understood. The following two processes of “Viewing Perspectives” and “Building Knowledge” involve formulation of the problem through consideration of multiple perspectives on the problem. These processes are essential in a context where problems are messy and ill-structured and include opportunities for interpersonal interaction and negotiation. Through exposure to the perspectives of others and through filling in gaps in knowledge, individuals can begin to formulate the problem in such a way that it is possible to begin considering solutions. Solutions must first be identified through a consideration of multiple perspectives and subsequently critiqued and questioned until consensus and coherence allow for the identification of a valid solution. The final process of “Acting on Solutions” represents the culmination of PFR whereby individuals can apply the results of the processes to a problem in an actual context. This conceptual framework of the processes involved in PFR can be represented in a model as follows:

Figure 1. Problem Formulation and Resolution processes

These six processes provide a starting point for the identification of problem formulation and resolution in an online asynchronous discussion. They can be used as the main categories for an instrument, which can be applied to an OAD in order to measure PFR. However, reliance on these six processes alone may not ensure a high degree of reliability and validity in the use of the instrument. For this reason, there is a need to further define and describe the processes through consideration of the ways in which the processes manifest themselves in actual contexts of discussion in OADs. By specifying indicators of the processes as well as examples of each of these indicators, the identification and subsequent measurement of PFR can be facilitated. Development of indicators of the processes was completed through transcript analysis of an actual OAD. The following section of this paper describes the OAD that was analysed.

The discussion used to develop the PFR instrument was part of a web-based learning module called Solving Problems in Collaborative Environments (SPICE), designed to help practitioners such as social workers, nurses or teachers advance their practice through a process of collaborative problem formulation and resolution (Murphy, 2003). The module was used for a period of four weeks in a context of an undergraduate methods course with a group of eleven French as a second or foreign language teachers in training (pre-service). The problem specified in advance in the module was that of the lack of use of the target language during instruction.

The first two steps of the SPICE approach, Consult and Gather, emphasize problem formulation, while the final step, Act, emphasizes problem resolution. Both the Consult and Gather steps support problem formulation through exposure to multiple perspectives. These perspectives are represented in video segments of interviews with practitioners as well as in an online bibliography of research articles related to the problem. The final step in the process, Act, provides an opportunity to present solutions to the problem. Participants use a shared workspace to upload a solution in the form of a document such as a short or long-term action plan, a description of an activity, or a lesson plan. Participants are able to view and download each other’s solutions.

Each of the three steps in the SPICE approach to PFR is followed by engagement in Shared Reflection using an OAD. The Shared Reflection invites participants to describe how the multiple perspectives presented in the Consult and Gather phases differ from or resemble their own. Participants are also invited to compare their own perspectives with those of other participants. Following the Act step, participants are provided with an opportunity to discuss the various solutions to the problem proposed by participants. Participation in Shared Reflection through the OAD involves numerous and varied problem formulation processes such as the opportunity to identify and explore causes, contexts, nature and extent of the problem, and to build knowledge of the problem area. It also provides an opportunity for engagement in processes of problem resolution such as proposing and evaluating solutions.

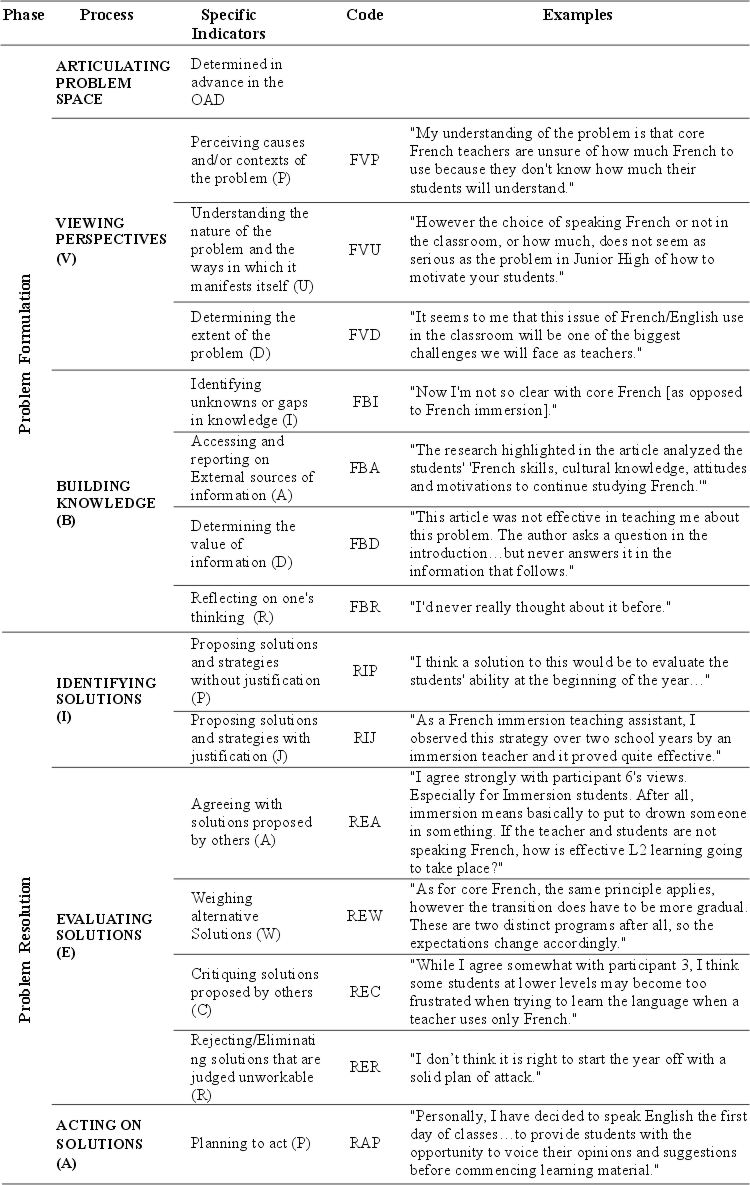

The conceptual framework presented in an earlier section of this paper provides a starting point for the development of an instrument to facilitate identification and measurement of PFR processes. The refinement of the instrument required specifying indicators and examples of the indicators for each process. These indicators and examples were determined through an analysis of the processes in the transcript of the SPICE online asynchronous discussion (OAD). The transcript was analysed simultaneously by two individuals, the principal investigator and a graduate student assistant. Of the 114 messages in the SPICE OAD, 20 of the messages contained no evidence of problem formulation or resolution: these were primarily the moderator’s instructions and the participants’ self-introductions. The remaining 94 messages were analysed using the message as the unit of analysis. During the initial analysis, all processes related to problem formulation and resolution that occurred in the transcript were noted. This initial analysis noted only whether a particular process occurred in a message: it did not measure how many times the process or occurred within that message, nor did it distinguish between a message in which a given process appeared briefly (for example, in a single sentence) and one in which the same process was developed in detail (in an entire paragraph).

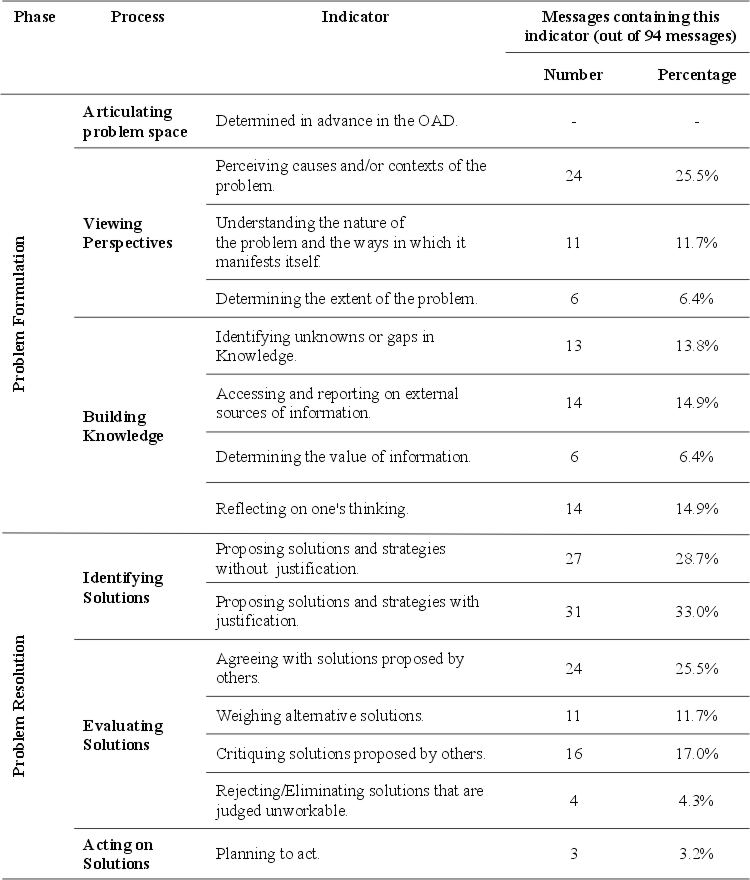

Following the initial analysis, which identified processes related to PFR, the transcript was analysed a second time in order to identify indicators associated with each of the processes. These were grouped together with the associated process and an example and code were provided for each. There were no indicators provided for the process of Articulating the Problem Space as the problem in the SPICE OAD had been articulated in advance for the participants. The result of this analysis is the instrument presented in Table 1.

Table 1

Instrument for identifying and measuring PFR in an OAD

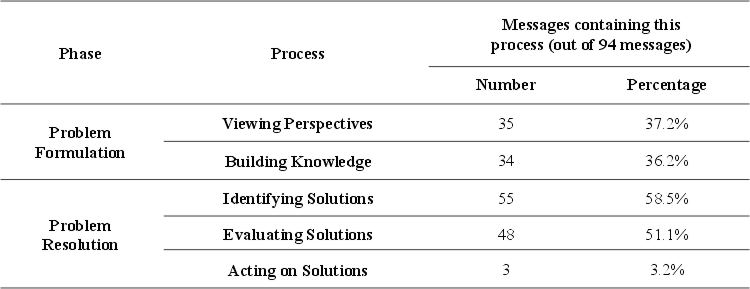

Using the message as the unit of analysis, the SPICE OAD was analyzed in order to measure PFR. The results of the analysis are presented in Tables 2 and 3.

Table 2

Measurement of PFR processes in the SPICE OAD

Table 3

Measurement of PFR indicators in the SPICE OAD

The totals for each set of indicators in Table 3 add up to more than the summary results for each process as shown in Table 2. The difference is visible with the first process of Viewing Perspectives for which Table 2 indicates that 37.2% of messages reflected this process while Table 3 indicates that a total of 43.6% for the same process. This discrepancy occurs because the message was used as the unit of analysis, and most messages contained two or more indicators. For example, in a single message, a participant might identify unknowns or gaps in knowledge (FBI) and might also reflect on his/her own thinking (FBR). In Table 2, this message would be counted as a single incidence of the process Building Knowledge within the Formulation phase (FB), but in Table 3, each indicator would be reported separately and the same message would be counted twice. Similarly, multiple occurrences of the same indicator within a single message were coded only once: if a participant proposed 10 solutions within one message, the table indicates that occurrence as one message containing the code RIP.

The process of developing this instrument highlights some of the methodological issues related to analysis of online asynchronous discussions. One of these issues is the choice of the unit of analysis. Using the message as the unit of analysis has certain advantages: it is more easily defined than other syntactical units such as the sentence or paragraph, and unlike a thematic unit it is, as Rourke et al. (2001) argue, “objectively identifiable,” which leads to a high degree of interrater reliability (p. 12). However, in analyzing an OAD using this method, much important information is lost: as Henri (1992) explains, no distinction is made between lengthy, detailed messages and short, incomplete messages. Henri’s solution was to identify thematic meaning units, which offers many advantages but greatly decreases objectivity and interrater reliability, as coders may disagree on what constitutes a meaning unit.

It would be valuable to observe the results of applying the same instrument to a different OAD transcript in order to test replicability. More importantly, application of the instrument to code other transcripts would provide insight into its validity in terms of the processes and indicators. We can hypothesize that some of the indicators might not be relevant in other contexts and that some indicators could be added to those listed in the instrument. This potential difference in the indicators is likely to occur in instances where the instrument is used to measure PFR in an OAD that was not specifically designed or structured for problem solving as was the SPICE OAD. Measurement could also be completed either at the level of the processes or at the level of the indicators.

Results of the application of the instrument to the SPICE OAD, indicate that participants posted more messages coded at the Resolution level, and comparatively fewer coded at the Formulation level. Furthermore, these processes were not followed in a linear manner, with some of the very earliest messages showing evidence of attempts to resolve the problem. One possible explanation for the emphasis on solutions is that the participants in the OAD were pre-service teachers who may have been anxious to find a “quick fix” to problems they expected to face in the classroom. Repeating the study with a group of in-service teachers might confirm the hypothesis that these individuals are less likely to focus on resolution and more likely to focus on problem formulation. However, Kelsey’s (1993) experience of teaching problem formulation in a face-to-face educational administration graduate seminar for in-service administrators suggests that in-service practitioners may not, in fact, be very different from pre-service practitioners in this area. Kelsey found that in his classes, “Initial discussion of problems is usually oriented toward solving them rather than gaining a fuller understanding of them” (p. 248).

Almost half the messages in the OAD (58.5%) proposed some solutions or strategies and were coded at the Resolution: Identifying Solutions (RI) level. The instrument distinguished between solutions that were proposed without any justification (RIP) and those for which the participant provided some justification (RIJ). The process of testing possible solutions as outlined by Jonassen (1997) and Voss and Post (1988) is an iterative process in which problem-solvers propose solutions, evaluate and critique one another’s solutions, justify and defend their own solutions, weigh the merits of alternative solutions and refine their solutions based on the process of evaluation. Table 2 shows that, in the SPICE OAD, 51.1% of the messages were coded Resolution: Evaluating Solutions. However, more detailed analysis in Table 3 reveals that 25.5% of the messages included statements agreeing with or accepting solutions proposed by others (REA) (whether in the videos and articles provided, or from other participants in the forum). Yet only 17% of messages included evaluation and critique of solutions, 11.7% included statements weighing alternative solutions, and 4.3% of messages contained statements rejecting or eliminating unworkable solutions. In general, participants posted more messages proposing and defending their own solutions, or accepting solutions proposed by others, rather than evaluating and testing proposed solutions. This finding is congruent with Henri (1995), who found that:

The learners presented their view of the problem, set up hypotheses and justified their point of view without reference to the solutions offered by their colleagues. The teleconferences read like a series of distinct presentations on the same subject. The learners do not mention overlaps, similarities or differences between their presentations and those of their co-learners. (pp. 157-158)

The final process of problem resolution, Acting on Solutions, was least evident in the discussion. Only three messages contained statements in which the participants planned to act on their proposed solutions (RAP). In part, the low number of messages coded Acting on Solutions may also be due to the fact that this study was conducted with pre-service practitioners. Unlike in-service practitioners, they would not have the opportunity to immediately put their solutions into action in practice. The three statements that were coded as Acting on Solutions were the participants’ predictions of what they planned to do when they did face a real classroom of students.

This study reported on in this paper involved the development of an instrument for the identification and measurement of problem formulation and resolution in an online asynchronous discussion. The instrument consisted of six processes derived from the literature. Indicators for each of the processes were derived from application of the instrument in an actual OAD designed for problem solving. Further testing of the instrument in other contexts with different transcripts of OADs would allow for refinement and testing of the processes and indicators. Coding might be completed at the level of the processes only using the indicators to guide the interpretation of the processes.

Although the instrument identifies instances when participants provide justification for their proposed solutions, it does not distinguish among the different types of justification that might be provided, such as justification based on personal experience, justification based on reasoning and explanation, or justification based on research in external sources. Researchers may wish to develop instruments that distinguish among the types of justification provided for solutions.

Results of the application of the instrument to the transcript of the SPICE discussion suggest that problem formulation did occur, but that participants engaged more in problem resolution than in problem formulation. Further studies might investigate how online asynchronous discussions can be structured, organized or moderated to place greater emphasis on problem formulation. The results of such studies would be of direct relevance to instructors interested in promoting problem solving in course-related online asynchronous discussions.

The instrument represents an example of the types of tools we can develop to gain insight and understanding into the processes in which learners engage while participating in online asynchronous discussion in a context of learning. The use of a conceptual framework outlining major processes represents a starting point for the design of such instruments. The application of the instrument to an online discussion represents a means to analyse and understand how these processes manifest themselves in real contexts of discussion and interaction between learners. It also represents a means to further develop and refine the instrument itself. Such instruments can represent a means to promote more effective discussions specifically and more effective learning in general.

This project was partially funded through a grant from Inukshuk Canada and by a grant from the Social Sciences and Humanities Research Council of Canada (SSHRC). Thanks to Graduate Research Assistant Trudy Morgan-Cole for her invaluable assistance with this research and the writing of this paper.

Blake, C.T. & Rapanotti, L. (2001). Mapping interactions in a computer conferencing environment. In P. Dillenbourg, A. Eurelings, & K. Hakkarinen. (Eds.), Proceedings of the European Perspectives on Computer Supported Collaborative Learning Conference,EuroCSCL 2001, University of Maastricht. Retrieved April 29, 2003 from http://www.mmi.unimaas.nl/euro-cscl/Papers/163.pdf

Bransford, J.D. (1993). Who Ya Gonna Call? Thoughts About Teaching Problem-Solving. In P. Hallinger, K. Leithwood, & J. Murphy (Eds), Cognitive Perspectives on Educational Leadership (pp. 171-191). New York: Teachers College Press.

Bullen, M. (1998). Participation and Critical Thinking in Online University Distance Education. Journal of Distance Education, 13(2), 1-32.

Fahy, P.J., Crawford, G., Ally, M., Cookson, P., Keller, V. & Prosser, F. (2000). The Development and Testing of a Tool for Analysis of Computer Mediated Conferencing Transcripts. The Alberta Journal of Educational Research, XLVI(1), 85-88.

Feenberg, A. (1987). Computer conferencing and the Humanities. Instructional Science, 16, 169-186.

Garrison, D. R., Anderson, T. & Archer, W. (2000). Critical Inquiry in a Text-Based Environment: Computer Conferencing in Higher Education. Internet and Higher Education, 11(2), 1-14. Retrieved Feb. 21, 2003 from http://www.atl.ualberta.ca/cmc/CTinTextEnvFinal.pdf

Gunawardena, C., Lowe, C.A. & Anderson, T. (1997). Analysis of a global online debate and the development of an interaction analysis model for examining social construction of knowledge in computer conferencing. Journal of Educational Computing Research, 17(4), 397-431.

Hara, H., Bonk, C. J. & Angeli, C. (2000). Content analysis of online discussion in an applied educational psychology. Instructional Science, 28(2), 115-152.

Harasim, L. (1990). Online Education: An Environment for Collaboration and Intellectual Amplification. In L. Harasim (Ed.), Online Education: Perspectives on a New Environment (pp. 39-64). New York: Praeger.

Harasim, L. (1993). Global networks: Computers and International Communication. Cambridge, MA: MIT Press.

Henri, F. (1992). Computer conferencing and content analysis. In A. R. Kaye (Ed.), Collaborative Learning Through Computer Conferencing (pp. 117-136). Berlin: Springer-Verlag.

Henri, F. (1995). Distance Learning and Computer-Mediated Communication: Interactive, Quasi-Interactive or Monologue? In C. O’Malley (Ed.), Computer Supported Collaborative Learning (pp. 145-164). Berlin: Springer-Verlag.

Jonassen, D.H. (1997). Instructional Design Models for Well-Structured and Ill-Structured Problem-Solving Learning Outcomes. Educational Technology: Research and Development, 45(1), 65-95.

Jonassen, D.H. (2000). Toward a Meta-Theory of Problem Solving. Educational Technology: Research & Development, 48(4), 63-85.

Kanuka, H. & Anderson, T. (1998). Online social interchange, discord, and knowledge construction. Journal of Distance Education, 13(1), 57-74.

Kaye, A.R. (1992). Learning Together Apart. In A. R. Kaye (Ed.), Collaborative Learning Through Computer Conferencing (pp. 1-24). Berlin: Springer-Verlag.

Kelsey, J.G.T. (1993). Learning from Teaching: Problems, Problem-Formulation, and the Enhancement of Problem-Solving Capability. In P. Hallinger, K. Leithwood, and J. Murphy (Eds)., Cognitive Perspectives on Educational Leadership (pp. 231-252). New York: Teachers College Press.

Lave, J. & Wenger, E. (1991). Situated Learning: Legitimate Peripheral Participation. Cambridge: Cambridge University Press.

Lester, S. (1995). Beyond Knowledge and Competence: Towards a framework for professional education. Capability, 1(3), 44-52. Retrieved March 27, 2003 from http://www.devmts.demon.co.uk/beyond.htm

Marttunen, M. (1997). Electronic mail as a pedagogical delivery system: an analysis of the learning of argumentation. Research in Higher Education, 38(3), 345-363.

McComb, M. (1993). Augmenting a group discussion course with computer-mediated communication in a small college setting. Interpersonal Computing and Technology Journal, 1(3). Retrieved October 3, 2002 from http://jan.ucc.nau.edu/~ipctj/1993/n3/mccomb.txt

Meacham, J.A. & Emont, N.C. (1989). The Interpersonal Basis of Everyday Problem Solving. In J.D. Sinott (Ed.) Everyday Problem Solving: Theory and Application (pp. 7-23). New York: Praeger.

Morgan, M. C. (2000). Getting beyond the chat: Encouraging and managing online discussions. Retrieved March 25, 2002 from http://cal.bemidji.msus.edu/english/morgan/onlinediscussion/

Murphy, E. (2003). Moving from theory to practice in the design of web-based learning from the perspective of Constructivism. The Journal of Interactive Online Learning, 1, (4). Retrieved January 14, 2004 from http://www.ncolr.org/jiol/archives/2003/spring/4/MS02028.pdf

Murphy, E. & Laferrière, T. (2003). Virtual communities for professional development: Helping teachers map the territory in landscapes without bearings. The Alberta Journal of Educational Research, XLIX(1), 71-83.

Newman, D.R., Webb, B., & Cochrane, C. (1995). A content analysis method to measure critical thinking in face-to-face and computer supported group learning. IPCT. Interpersonal Computing and Technology Journal, 3(5), 56-77.

O’Malley, C. (1995). Designing Computer Support for Collaborative Learning. In C. O’Malley (Ed.), Computer Supported Collaborative Learning (pp. 282-297). Berlin: Springer-Verlag.

Rourke, L., Anderson, T., Garrison, D.R., & Archer, W.(2001) Methodological Issues in the content analysis of computer conference transcripts. International Journal of Artificial Intelligence in Education 12 (1), 8-22. Retrieved February 17, 2003 from http://www.atl.ualberta.ca/cmc/2Rourke_et_al_Content_Analysis.pdf

Schön, D.A. (1987). Educating the Reflective Practitioner: Toward a New Design for Teaching and Learning in the Professions. San Francisco: Jossey-Bass Limited.

Schrage, M. (1995). No More Teams! Mastering the Dynamics of Creative Collaboration. New York: Doubleday.

Steeples, C., Goodyear, P., & Mellar, H. (1994). Flexible Learning in Higher Education: The use of Computer-Mediated Communications. Computers in Education, 22(1/2), 83-90.

Voss, J.F. and Post, T.A. (1988). On the Solving of Ill-Structured Problems. In M.T.H. Chi, R. Glaser, & M.J. Farr (Eds.) The Nature of Expertise (pp. 261-285). Hillsdale, NJ: Lawrence Erlbaum Associates.

Zhu, E. (1996). Meaning negotiation, knowledge construction, and mentoring in a distance learning course. In Proceedings of Selected Research and Development Presentations at the 18th National Conference of the Association for Educational Communications and Technology, Indianapolis, IN. (ERIC Document Reproduction Service, ED 397 849).

© Canadian Journal of Learning and Technology