About the Authors

Simone Conceição is an Assistant Professor in the Department

of Administrative Leadership at the University of Wisconsin-Milwaukee and

has research interests in learning objects and the impact of technology in

teaching and learning. University of Wisconsin-Milwaukee, School of Education,

P.O. Box 413, Milwaukee, WI 53201, email: simonec@uwm.edu.

Rosemary Lehman is Senior Outreach/Distance Education Specialist at the

Instructional Communications Systems, University of Wisconsin-Extension and

has research interests in learning objects and the educational applications

of media and technology. The Pyle Center, 702 Langdon Street, Madison, WI,

53706, email: lehman@ics.uwex.edu.

Little attention has been given to involving the deaf community in distance teaching and learning or in designing courses that relate to their language and culture. This article reports on the design and development of video-based learning objects created to enhance the educational experiences of American Sign Language (ASL) hearing participants in a distance learning course and, following the course, the creation of several new applications for use of the learning objects. The learning objects were initially created for the web, as a course component for review and rehearsal. The value of the web application, as reported by course participants, led us to consider ways in which the learning objects could be used in a variety of delivery formats: CD-ROM, web-based knowledge repository, and handheld device. The process to create the learning objects, the new applications, and lessons learned are described.

On s’est peu préoccupé de faire participer la communauté des sourds à l’enseignement et à l’apprentissage à distance ou à la conception des cours qui concernent leur langage et leur culture. Cet article rend compte de la conception et du développement d’objets d’apprentissage sur vidéo créées pour rehausser les expériences éducatives des étudiants non-sourds du langage ASL, dans le cadre d’un cours d’apprentissage à distance et, à la suite du cours, rend compte de la création de plusieurs applications nouvelles en vue d’utiliser des objets d’apprentissage. Les objets d’apprentissage ont été créées à l’origine pour le Web, en tant que composant des cours pour la révision et l’étude. La valeur de cette application au Web, telle que l’ont commentée les participants au cours, nous a amené à envisager des moyens permettant d’utiliser les objets d’apprentissage dans des formats de transmission variés : CD-ROM, logithèque de connaissances sur le Web et appareils portatifs. Nous rendons également compte du processus de création des objets d’apprentissage, des nouvelles applications et des leçons apprises.

Today, 54 million Americans, 20 percent of the population, have some form of disability that affects their capabilities of hearing, seeing, or walking (Freedom Initiative, 2001). Nearly 20 million of this number nationally and 500 million worldwide are deaf and hard of hearing individuals (National Deaf Education Network and Clearinghouse, 1989). It is common knowledge that historically society has tended to isolate and segregate people with disabilities, and, despite improvements, such forms of discrimination against these individuals continue to be a serious and pervasive problem, persisting in many areas.

To help rectify this type of discrimination, the Americans with Disabilities Act was signed into law by President George Bush in July of 1990. This Act describes a clear and comprehensive national mandate to provide consistent and enforceable standards to address any type of discrimination against individuals with disabilities. Later Acts, such as the Telecommunications Act, Section 508 of the Rehabilitation Act Amendments of 1998, and the Workforce Reinvestment Act are more recent mandates that address technology accessibility and instructional design, requiring systems to be designed with accessibility built-in, where possible, as well as to be appropriately designed instructionally (Freedom Initiative, 2001). These mandates have served to raise awareness of the importance of technology accessibility and program design. In spite of this new awareness, however, little consideration has been given to involving the deaf community, their language, and their culture in distance teaching and learning.

One Midwestern University in the United States has been particularly cognizant of this need. This university, in fact, hired a deaf instructor specifically to teach American Sign Language (ASL) technology-based courses and to develop these courses according to instructional design principles for distance learning. These courses are framed by the instructor in the context of the important elements of the deaf culture.

In the summer of 2001, the instructor designed the first technology-based course in distance education. The introductory ASL course, part of a pilot project, was offered to undergraduate students between two campuses as a way to reach out to learners in areas of the state who did not have access to ASL instruction. This pilot course was delivered via videoconferencing and web-based technologies and included a deaf instructor, a deaf site coordinator, and 12 hearing students. The students met once a week for three hours during seven weeks via videoconferencing, along with participating in instructional activities on the web using the course management tool BlackboardR . In BlackboardR, learners accessed course content that included 351 video-based "learning objects" of the ASL level one signs. The learning objects were linked to a streaming video server so that learners could review the signs after each videoconferencing class session.

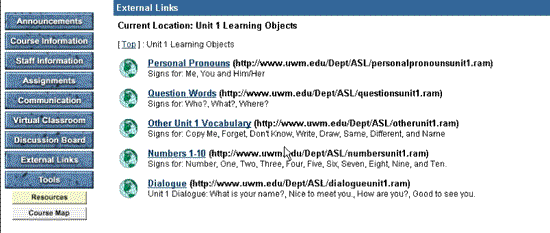

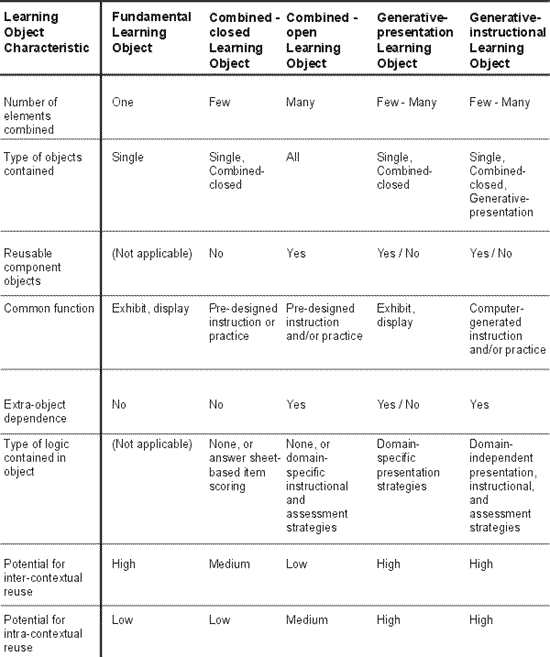

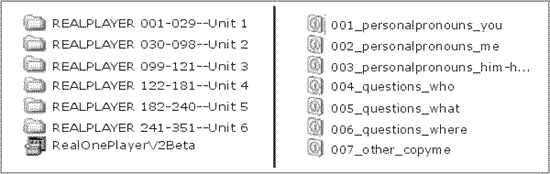

The video-based learning objects were initially created for use on the web as a course component for review and rehearsal of the signs following the class sessions. Learners logged onto the course via Blackboard R to download the software for displaying the learning objects. After installation of the software, learners accessed the video-based learning objects by selecting a link in the BlackboardR web site. The learning objects were produced specifically for the course as short video clips with text, showing the deaf ASL instructor demonstrating words and phrases integral to the course. The learning objects were organized in Blackboard R into units that paralleled the course content. Within each unit the learning objects were divided into categories. (See Figure 1 for organization of learning objects in two screens in Blackboard R)

Figure 1. Organization of Learning Objects in BlackboardR.

Upon completion of the ASL pilot course, the learning objects were highly rated by the learners in the course evaluation. For this reason, we decided to go beyond the use of the learning objects that had been produced exclusively for a course on a web environment by placing the learning objects on a CD-ROM for easy access, formatting them for global sharing in a knowledge repository, and loading them into a handheld device for portable use. The process used to create the learning objects, the new applications, and lessons learned are described in this article.

"Learning objects" is a term that originated from the object-oriented paradigm of computer science. The idea behind object-orientation is that components ("objects") can be reused in multiple contexts (Wiley, 2000). According to the Learning Technology Standards Committee, learning objects are defined as:

Any entity, digital or non-digital, that can be used, re-used or referenced during technology-supported learning. Examples of technology-supported learning applications include computer-based training systems, interactive learning environments, intelligent computer-aided instruction systems, distance learning systems, web-based learning systems and collaborative learning environments (Learning Technology Standards Committee, 2000).

For the purpose of this article, learning objects are digital entities deliverable over the web (individuals may access and use them simultaneously), accessible through a CD-ROM (for individual use via a computer at the person’s own pace), retrievable from a knowledge repository (for global sharing,) and viewable on a handheld device (for individual mobile use).

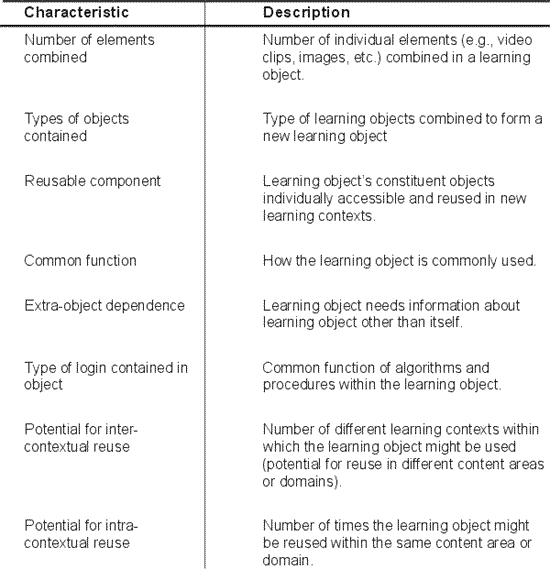

According to Wiley (2000), there are different types of learning objects. Wiley (2000) created a taxonomy for use in instructional design that differentiates these types of learning objects. What separates each type is "the manner in which the object to be classified exhibits certain characteristics" (p. 22). These characteristics are the same across environments, no matter where the learning objects reside.

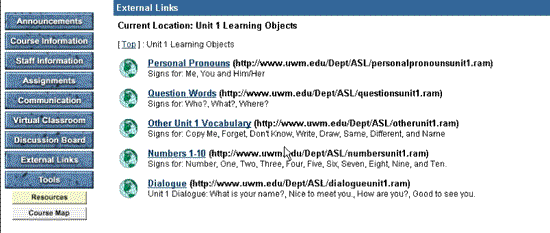

The following learning objects’ characteristics are suggested by Wiley (2000):

Table 1. Learning Objects’ Characteristics.

Table 2 provides a preliminary taxonomy of learning object types based on Wiley’s (2000) description. Based on Wiley’s (2000) taxonomy, it is our contention that the ASL video-based learning objects fall into the category of Combined-Closed type of learning objects because they are single purpose and provide users with review and practice. Each ASL video-based learning object is an entity unto itself. The parts that comprise each learning object (text and video images) cannot be separated. The parts would lose their meaning. Without the text the user would not know the meaning of the sign and without the visual image, there would be no sign.

Table 2. Preliminary Taxonomy of Learning Object Types from Wiley (2000). (Used with permission)

The value of the learning objects as small units of educational material and their acceptance among the learners in the ASL pilot course led us to consider alternate delivery formats for the learning objects. Each format is intended for a different educational application. The first delivery format is the web site used for the pilot ASL course, organized into curricular units defined by the course. The second delivery format is the placement of the learning objects on a CD-ROM so that learners can access the learning objects without web access. Like the original web site, the CD-ROM is organized by the units of the course. The third delivery format is also web-based, but its organization is no longer tied to the ASL course. Instead, it provides a variety of organizational paradigms for the learning objects, using database technology to support both search and browsing. It is intended for global sharing through a repository of knowledge. The fourth delivery format places the learning objects on a handheld device in order to support mobile use.

The development of the video-based learning objects initially involved the instructor organizing the signs into units and then into categories (e.g., personal pronouns, question words, vocabulary, dialogue, etc.) according to the content addressed in the course. After that the instructor was videotaped performing the signs. The signs were then edited into a video-based format using the following sequence:

The video sequences were grouped into categories, saved as Real Media TM, and stored on a Real MediaTM server. A link to the Real MediaTM server was created in the Blackboard R component of the ASL course for retrieval by learners. In this delivery format, the ASL learning objects served as a major review and rehearsal component of the course.

The video-based learning objects and their use received high ratings in the overall course evaluation. Participants of the ASL course considered the learning objects "amazing" because they helped them to see the signs needed shortly after they had been presented in the class. One of the participants stated, "I thought [the learning objects] worked very well" because it was a very helpful medium to use." Another participant said, "the learning objects were a great resource, but cumbersome to use - couldn’t go directly to a particular sign, for instance." This statement helped us reevaluate how we might reorganize the signs for future use. Initially the signs were organized into categories and played in the Real Media TM format as a category, which did not allow learners to select individual signs or phrases. Formatting the signs and phrases individually, as well as in categories would make them more easily searchable and accessible for users.

The decision to place the edited video-based learning objects on a CD-ROM was made for two reasons: archiving and retrieval by learners who would not be able to access the learning objects through the web. The CD-ROM is organized in folders named after each unit. Within each folder, files are named including the sign number, category, and sign description. Included in the CD-ROM is the software for displaying the video clips. (See Figure 2 for organization of signs in the CD-ROM format)

Figure 2. Organization of Signs in the CD-ROM Format

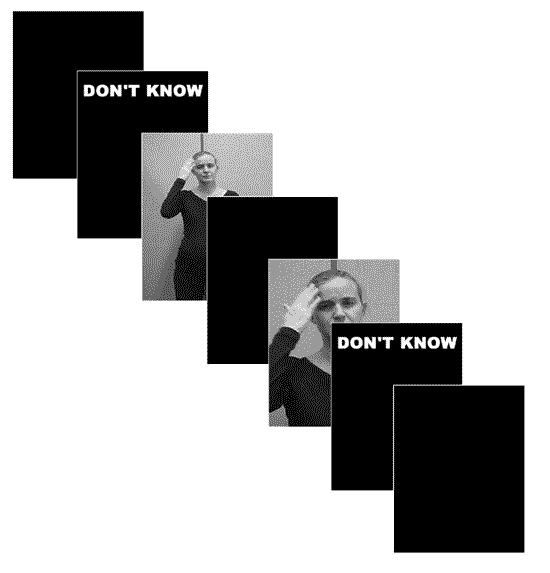

The third delivery format involves the reediting and meta-tagging of the learning objects for global sharing and use through a repository of knowledge. In this format, the categories of learning objects created for the ASL pilot course were additionally divided, reedited, and retagged as individual signs for database searching purposes. Each learning object sequence was formatted in the following order using AdobeR Premiere (See Figure 3 for graphic display of the sequence):

Figure 3. Graphic Display of Learning Object Sequence

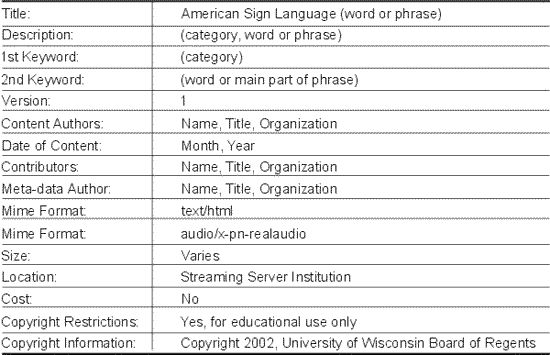

The reediting added three new sections of black to the sequence to add visual contrast and clarity to the video-based learning objects. To ensure that the learning objects were readily accessible via various modems and connections and across platforms, prototype objects in four video-based formats (Windows Media TM player, QuickTimeR, RealOneTM player, and MPEG) were developed and evaluated. As a result of the evaluation, the RealOne TM media player was selected as best meeting the usability criteria. After the selection of the video format, all of the learning objects were formatted for the RealOneTM media player and tagged using categories of meta-data as listed in Table 3.

Table 3. Meta-data categories.

The revised video-based learning objects were then transferred to the Advanced Distributed Learning Co-Laboratory (ADL Co-Lab) repository server. We worked closely with ADL Co-Lab personnel to ensure that the ASL learning objects were SCORM (Sharable Content Object Reference Model) compliant for the repository. According to the ADL Co-Lab (2002), "the SCORM is a collection of specifications adapted from multiple sources to provide a comprehensive suite of e-learning capabilities that enable interoperability, accessibility and reusability of web-based learning content."

The ASL learning objects were then validated using testing features of the HDF Software TEAMSS repository software in order to ensure that the learning object repository was correctly configured. The TEAMS S software required that the meta-data were converted to a particular format, based on XML (Extensible Markup Language, the universal format for structured documents and data on the web), and that each learning object and its meta-data were combined into a single package (a .zip file) (World Wide Web Consortium, 2002). These packages were then imported into the repository and accounts developed. A web site has been created to provide information on how to log into the repository for learning object retrieval (http://www.learningobjects.soe.uwm.edu). Those who wish to access the objects will be asked to provide information about likely uses of the learning objects and the purpose(s) for their use. We will keep a usage and purpose log for future research.

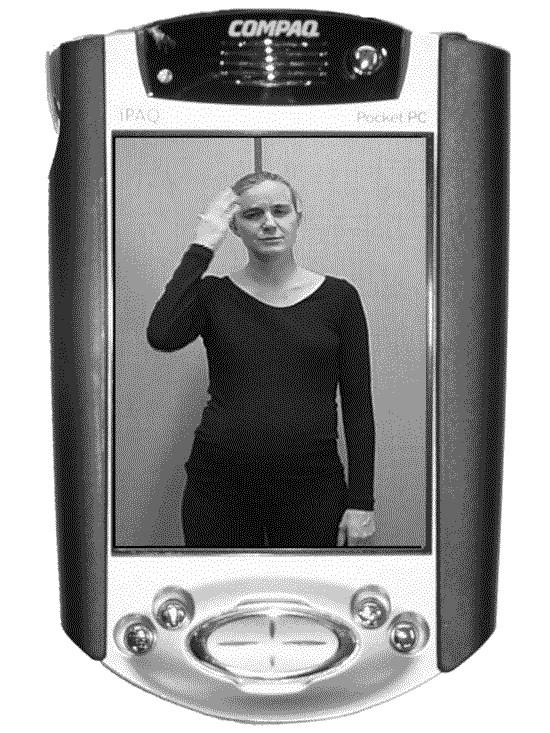

In order to reach out to a wide variety of users who like to learn in a self-paced and mobile learning environment, the video-based ASL learning objects were loaded to a handheld device (See Figure 4) using the iPAQ TM software MicrosoftR ActiveSyncR. The synchronization process was completed in a couple of minutes. The Compaq TM iPAQ TM 3870 was selected for the handheld device because of its picture and motion quality and its memory capacity. The iPAQTM 3870 is able to hold all 351 of the ASL learning objects saved at 15 frames per second in the Real MediaTM format.

Figure 4. iPAQ 3870

As we worked with the design project, we realized how flexible and accessible these learning objects could be if we were able to use a variety of technology delivery formats. When we first made the learning objects available, they were limited to the use on the web site by the learners in the ASL pilot course. Because participants of the ASL pilot course gave high ratings to the video-based learning objects, we decided to pursue new ways to use them.

In the development of the first delivery format, we learned that it was important to develop a script that would accommodate the text of the ASL sign, as well as video shots of the instructor. We also became aware of the fact that ASL involves not only the hands, but also the body and facial expressions. All are necessary to accurately present the signs. For this reason the script included both a mid-shot and close-up of the instructor performing the sign.

The creation of the second delivery format resulted from a decision to place the signs on a CD-ROM for the purpose of archiving and easy accessibility for users who would not have access to the web. At this point, we do not know if the small units of content on the CD-ROM format will have an impact on student learning. An investigation of the effectiveness of the learning objects in this format in the teaching of introductory ASL to undergraduate students is underway.

The development of the third delivery format, retrieval of the ASL learning objects from a knowledge repository, presented us with the greatest challenge and afforded us valuable lessons that continue to evolve as we work with the ADL Co-Lab. Our learning objects were the first to be imported into the ADL Co-Lab repository. For that reason, it was essential to carefully work through the entire process to make decisions about meta-tagging following SCORM compliance.

During the development of the fourth delivery format, the learning objects were loaded into the handheld device. Our technical personnel researched handheld devices and selected the CompaqTM iPAQTM 3870. As we experimented with this device, we learned that we would have to save the original learning objects at fewer frames per second (15 frames as compared to the original 30) in order to import all 351 sign sequences. Another lesson learned was the need to insert several frames of black at the beginning and ending of the learning objects and between the two shots of the instructor signing. The purpose of these insertions was for clarity - to set off the text and more clearly differentiate between the two shots of the instructor and the text that explained the sign.

The actual use of the learning objects in the iPAQTM has revealed new considerations. For example, while using the iPAQTM with the learning objects at 15 frames per second, we discovered that there is an accessory that provides additional memory, which would allow us to use 30 frames per second for the video sequences, providing an even better video quality for the learner. Also, we found out that another accessory to the iPAQTM will enable the learning objects to be projected from the iPAQTM to a large screen for demonstrating the instructional uses of the learning objects in the iPAQTM to a large crowd.

A number of mandates for the past decade have served to raise awareness of the importance of technology accessibility and program design. This awareness was the motivation for the development of the ASL pilot project and the design of the learning objects in order to meet the learning needs of students in the context of the deaf culture.

There are several other reasons for designing and developing learning objects for educational purposes. Longmire (2000) suggests that flexibility (use in multiple contexts); ease of updates, searches, and content management; customization; interoperability; facilitation of competency-based learning; and increased value of content are good arguments for creating reusable learning objects. Kaiser (2002) recommends to keep in mind that learning objects should be activity-sized (large enough to be used as an activity within a lesson or module, or large enough to be a lesson by itself); accessible (easy to locate and use); durable (retain utility over a long period of time); interoperable (can be used on a variety of platforms or course management systems); and reusable (can be used to create other learning activities within a given content area or other content areas).

The development of the ASL video-based learning objects has heightened our awareness of the need to design these objects to fit flexible modes of instruction in order to enhance their accessibility and use for learners. In the process we have come to realize the importance of the requirements of standards, tests, and evaluation.

Because of the value of the ASL learning objects expressed by the participants in the ASL pilot course, we are now considering developing ASL video-based signs for all levels of American Sign Language. Feedback from those who have heard about our project and have seen the ASL learning objects suggest additional uses for the learning objects. For example, creating video-based learning objects to simulate a procedure in medicine or to present case studies in psychology. Further, we hope that by sharing our experience, our project can serve as a stimulus and a model for other designers and educators to create learning objects for other content areas.

Advanced Distributed Learning Co-Laboratory (ADL Co-Lab). (2000). Retrieved September 1, 2002, from http://adlnet.org.

Freedom Initiative: Fulfilling America’s Promise to Americans with Disabilities. (2001, February). White House. Retrieved September 1, 2002, from http://www.whitehouse.gov/news/freedominitiative/freedominitiative.html

HDF Software (TEAMSS). (2002). Retrieved September 4, 2002, from http://www.hdf-software.com/o-nas.html

Kaiser, G. E. (2002). Constructing Learning Objects. Retrieved September 1, 2002, from the www.cat.cc.md.us/~gkaiser/microrlo/LO.html

Learning Technology Standards Committee. (2000). LOM working draft v4.1 Retrieved September 1, 2002, from http://ltsc.ieee.org/doc/wg12/LOMv4.1.htm

Longmire, W. (2000). A primer on learning objects. ASTD learning circuits. Retrieved September 1, 2002, from www.learningcircuits.org/mar2000/primer.html

MicrosoftR ActiveSyncR. Available at: http://www.microsoft.com/mobile/pocketpc/downloads/activesync35.asp

National Deaf Education Network and Clearinghouse (NCIC). (1989). Gallaudet University.

QuickTimeR. Available at http://www.quicktime.com

Wiley, D. A. (2000). Connecting learning objects to instructional design theory: A definition, a metaphor, and a taxonomy. In D. A. Wiley (Ed.), The Instructional Use of Learning Objects: Online Version. Retrieved September 1, 2002, from http://reusability.org/read/chapters/wiley.doc

Windows MediaTM. Available at http://www.windowsmedia.com/mg/home.asp?

World Wide Web Consortium (2002). Retrieved September 4, 2002, from http://www.w3.org/XML

© Canadian Journal of Learning and Technology