Bani Arora, University of Bahrain

Abdulghani Al-Hattami, University of Bahrain

This descriptive study examines the effectiveness of ZipGrade, a digital assessment tool, in the context of formative evaluations within classroom settings, focusing on its deployment for multiple-choice question quizzes. This research contributes to the dialogue on the integration of information and communication technology to promote quality education and address the literature gap in providing immediate feedback to enhance the learning outcomes. Drawing on a sample of 63 fourth year B.Ed. students in Bahrain, the study combines quantitative and qualitative methodologies to assess student perceptions about the utility and effectiveness of ZipGrade. Data were collected through a semi-structured questionnaire following the administration of a series of formative tests across selected course segments. The findings reveal a predominantly positive reception of ZipGrade among students, highlighting its ease of use, immediate feedback provision, and potential to more effectively engage learners in the assessment process. Challenges such as the necessity to physically print answer sheets, a predisposition towards multiple-choice questions, and infrastructural and policy-related barriers were identified, suggesting areas for further development and support.

Keywords: digital assessment, education quality, student feedback, teaching effectiveness, ZipGrade

Cette étude descriptive examine l’efficacité de ZipGrade, un outil d’évaluation numérique, dans le contexte des évaluations formatives en classe, en se concentrant sur son déploiement pour les questionnaires à choix multiples. Cette recherche contribue au dialogue sur l’intégration des technologies de l’information et de la communication pour promouvoir une éducation de qualité et combler les lacunes de la littérature concernant la transmission d’une rétroaction immédiate afin d’améliorer les résultats de l’apprentissage. Utilisant un échantillon de 63 personnes étudiantes de quatrième année du B.Éd. à Bahreïn, l’étude combine des méthodologies quantitatives et qualitatives pour évaluer les perceptions des personnes étudiantes sur l’utilité et l’efficacité de ZipGrade. Les données ont été collectées à l’aide d’un questionnaire semi-structuré après l’administration d’une série de tests formatifs sur des segments du cours sélectionnés. Les résultats révèlent un accueil majoritairement positif de ZipGrade parmi les personnes étudiantes, soulignant sa facilité d’utilisation, sa capacité à fournir une rétroaction immédiate et son potentiel à impliquer les personnes étudiantes de manière plus efficace dans le processus d’évaluation. Des défis tels que la nécessité d’imprimer physiquement les feuilles de réponses, une prédisposition envers les questionnaires à choix multiple, et des barrières liées à l'infrastructure et à la politique ont été identifiés, suggérant des éléments pouvant être développés et soutenus davantage dans le futur.

Mots-clés : évaluation numérique, qualité de l’éducation, rétroaction des personnes étudiantes, efficacité de l’enseignement, ZipGrade

Using digital technology for teaching and learning has become necessary, especially after the COVID-19 pandemic and subsequent lockdowns. Through several studies (Henderson et al., 2015; Muslu, 2017; Perrotta, 2013; Prensky, 2001), the efficacy of digital tools and applications has been established in terms of knowledge retention, independent learning, collaboration, and for learning life skills. Technology use makes teaching and learning more convenient, efficient, engaging, and enjoyable.

Assessment is as crucial as teaching and learning. It ensures that students and teachers have understood various aspects of a concept and helps identify gaps in learning when the teaching matter has not been fully absorbed (Bhuvaneswari & Elatharasan, 2019). Using technology in assessment has created new possibilities and approaches. Depending on the purpose of assessment, including diagnostic, formative, or summative, different assessment tools have been designed and implemented for subjects like math, science, history, and languages (Bhuvaneswari & Elatharasan, 2019; Şimşek et al., 2017). For larger classrooms and more timely feedback, mobile camera-based educational tools have been tested (Blattler et al., 2023; Wagstaff et al., 2019) and a few digital applications evaluated (Cherner et al., 2016; Muslu, 2017; Şimşek et al., 2017). Further, Lookadoo et al. (2017) also experimented with instructional video games.

Research on the use of digital technology for assessment has focused on students in K-12 and higher education community colleges. It is imperative to consider the attitude and perception of teachers and students toward different types of assessments, including digital assessments (Goodenough, 2019; Guo & Yan, 2019; Hendrith, 2017; Ningsih & Mulyono, 2019; Wagstaff et al., 2019). Over the last decade, digital software tools examined within their theoretical and practical frameworks included: Kahoot, Quizlet live, Socrative, Nearpod, ZipGrade, Snaptron (an education tool for exam grading using machine learning and OMR), instructional video games, Plickers (an educational tool for assessment by scanning student cards), Electronic Based Assessment, GeoGebra, the QR Code Reader, Schoology, and The Physics Classroom.

Many teachers, particularly those from older generations, resist using digital technology primarily due to its challenging implementation in a traditional classroom (Atkins & Vasu, 2000). Prensky (2001) introduced the term, Digital Immigrants, for people who were not born into the digital world and adopted aspects of new technologies at a later point in their lives. Prensky further calls younger generations, Digital Natives, describing them as young people who are native, digital language speakers of computers or Internet who began by processing information differently. These digital natives want instant gratification for their work, which for educators requires multitasking and parallel thinking. Prensky (2009) suggests that we should focus on the development of what he calls digital wisdom and emphasizes, “Digital technology, I believe, can be used to make us not just smarter but truly wiser” (para. 2).

E-learning initiatives in the Kingdom of Bahrain, where this study was conducted, have been launched since the 1990s through several projects at different levels. Integrating information and communication technology (ICT) in the field of education and training is also part of Bahrain’s Economic Vision 2030 (Abdul Razzak, 2018). The King Hamad Schools of the Future Project (KHSFP), launched in 2003 and the UNESCO King Hamad Prize in 2005, among many other government initiatives, deserve special mention for nurturing a knowledge-based economy and better job prospects for sustainable communities. In higher education in Bahrain, hybrid learning was adapted by only one organization with 25% face-to-face teaching and learning. E-learning practice in traditional universities relies heavily on learning management systems like Blackboard or Moodle (Abdul Razzak, 2018).

Despite some noteworthy success stories, e-learning in Bahrain schools as well as in higher education faces serious challenges in terms of updated ICT literacy, ICT-based pedagogical training, and the availability of culturally relevant teaching software in the native Arabic language. Moreover, inadequate documented research on e-learning and a lack of alignment between the overall organizational ICT infrastructure and strategies of the education system make the task more daunting (Abdul Razzak, 2018; Ghanem, 2020). To fill this research gap, the present study examined the effectiveness of integrating educational technology using ZipGrade to conduct formative assessments in face-to-face classrooms and provide immediate feedback. It attempted to achieve learning outcomes by reinforcing instruction and promoting the quality of teaching and learning in higher education.

During the transition from face-to-face learning to e-teaching and e-learning due to COVID-19, the need to offer immediate feedback to support better achievement, positive motivation, and self-esteem were emphasized in the study conducted in the same locale by Al-Hattami (2020). The study examined the effectiveness of e-teaching and e-learning in general with a focus on the implementation of e-assessments in particular. A sample of 118 teachers and 539 students responded to two independent questionnaires analyzed quantitatively. The instructors who participated in the study reported using Kahoot (31%), Google Forms (17%), Socrative (7%), Quizlet (5%), Near Pod (1%), and Mentimeter (1%). The findings support the use of e-learning and technological applications for formative assessment. However, face-to face communication was preferred for summative assessment (Al-Hattami, 2020).

ZipGrade, one of the apps approved by the Ministry of Education in Bahrain1, is known to be an effective and efficient tool and popular among teachers. Several research studies have reported incorporating it for instant data collection and analysis purposes (Celik & Kara, 2022; Louis, 2016; Serrano, 2023; Suhendar et al., 2020). For teachers, it makes grading effortless without requiring a Scantron machine or sheetfed scanner. Useful data on multiple-choice question assessments can be captured, stored, and quickly reported, offering students immediate feedback on written tests (Cortez et al., 2023).

Once shown to be accurate and efficient, this tool can be recommended to faculty in higher education for its efficacy and time-saving reliability in grading objective type/multiple-choice questions, while also ensuring students receive timely feedback on their attempt.

Although the history of educational technology is about a hundred years old, academic research that centred on the impact of technology for teaching and learning has been limited. This literature review focuses mainly on the use of recent digital technology in two forms of assessment — formative or summative. The review covers the application of technological tools in class, related similar technological innovations, action research, and some perception studies. In addition, it describes digital devices in formative assessments and evaluation studies; specifically, the information collected pertaining to the benefits and challenges of using ZipGrade.

Recent developments and their implications in assessment practice in math education are reviewed and analyzed by Bhuvaneswari and Elatharasan (2019) in a paper which was presented at the National Conference on Technology Enabled Teaching and Learning in Higher Education, held in India. The article enlists the strengths and limitations of five assessment tools namely: Kahoot, Quizlet live, Socrative, Nearpod, and ZipGrade. It further concludes that qualities like persistence, self-regulating behaviour, participation, and a special enthusiasm for learning are developed through assessment tests.

Şimşek et al. (2017) use document analysis of written and visual materials containing information to discuss the assessment and evaluation process. Şimşek et al. discuss potential usage of Kahoot, Plickers, Electronic Based Assessment, ZipGrade, and GeoGebra in the preparation and evaluation of exams. The study enlists developing technological pedagogical content knowledge of educators; more active participation; creating an interesting and conceptual learning environment saving time in assessment as the potential benefits of using software in math assessment and evaluation. Further, it recommends the integration of ICT in teacher training institutes and in-service training programs.

A study presented at the 24th International Conference on Intelligence User Interface (IUI’19 — Companion) by Wagstaff et al. (2019) aimed at exam grading using a mobile camera. Conducted at the University of California, Los Angeles (UCLA), it offered a digital solution called Snaptron, an education tool for exam grading using machine learning and OMR. Teachers can take a picture of students’ responses on an assessment, send it to a server for grading, and instantly receive the results. The survey study implemented grading scripts for four different exam formats such as bubble style, ZipGrade style, one-word free response, and mixed response. The article concluded that Snaptron was a scalable product for multiple exam formats and offered a low-cost, fast, and strong alternative to traditional exam grading.

Blattler et al. (2023) introduced a “One-shot grading” automatic answer sheet checker at the IEEE International Conference on Industrial Engineering and Engineering Management (IEEM). The system utilizes machine vision and image processing to grade multiple-choice and true/false questions. It offers adaptability to different examination weights and formats. Compared to ZipGrade as a standard, the reliability and accuracy of this innovative design was confirmed and highlighted to a superior level.

The effective twinning of formative assessment with summative in a West Texas history class by examining students’ perspective was emphasized through action research by Goodenough (2019). Data were collected through various sources such as: distributing surveys, conducting six student interviews and one administrator interview, and observing student conversations. Goodenough (2019) found the process unique and successful in many ways in achieving the school’s goals. The outlined benefits were an opportunity to improve grades from one test to the next, relearn information, and master the concepts. ZipGrade was used for formative and summative assessments provided instantly to students and additionally for item analysis examine focused reviews between the two assessments. Another action research conducted on 15 teachers in the Philippines by Cortez et al. (2023) revealed that ZipGrade is more useful to teachers in checking students’ long tests and unit tests, and aids in analyzing the result of assessment and increasing the speed of time spent in correcting student responses.

Perrotta (2013) explored student and teacher perspectives using survey data from 683 teachers in 24 secondary schools across the United Kingdom to analyze the factors influencing how the benefits of digital technology are being experienced. The study pointed to the need to develop a more rounded picture of the relationship(s) between the social contexts that surrounded schools, teachers, and technology use.

Hendrith (2017) studied the professional development of primary school teachers. The selected teachers were trained through a technology integration workshop and later assisted with implementation through follow-up. An increase in teacher confidence and implementation along with a positive attitude were reported in the study. Similar results have been reported in terms of educators gaining confidence through a greater number of hours spent on technology training in another study conducted by Atkins and Vasu (2000).

Ningsih and Mulyono (2019) also investigated teachers’ use and perception of digital assessment resources in primary and secondary school classrooms in Indonesia by administering a self-reflection survey to 18 educators using Kahoot and ZipGrade for assessment. The findings revealed a positive attitude towards the applications for their practicality, fun learning environment, instant scoring, and direct feedback. However, the respondents also reported challenges about policy on in-school mobile phones and inadequate timely technical support for teachers.

A case study of 24 senior preservice teachers by Özpınar (2020) examined their perception of Web 2.0 technology-based tools used for assessment, namely: Plickers, Kahoot, Edmodo, and ZipGrade. The teachers had generally positive opinions about all the tools in assessing students’ knowledge and progress emphasizing the need for reliable Internet and availability of technical devices. Out of the four tools, ZipGrade was rated as the most time-saving tool (Özpinar, 2020).

Perceptions of both teachers and students were analyzed in an assessment of learning outcomes conducted through ZipGrade in a quasi-experimental Indonesian vocational school study by Suhendar et al. (2020). The experience using the ZipGrade application was favourable in finding the work easier and more simplified. It provided information on the results of the validity analysis, the level of difficulty of the questions, and the distinguishing features of each item. Such results can further enhance teachers’ understanding of student needs and abilities to perform better in the subject (Suhendar et al., 2020).

Lookadoo et al. (2017) explored the role of educational video games in formative and summative assessments of 172 randomly assigned undergraduate Biology students in an experimental study conducted in the United States. The findings showed an increase in post-test knowledge after playing in all game conditions. It was important to note in this digital device study that formative assessment reflected both better learning and engagement.

Elmahdi et al. (2018) investigated the effectiveness of a classroom response system introducing Plickers for formative assessment. Adopting a descriptive mixed method design, the researchers collected data from 166 students at Bahrain Teachers College using a questionnaire. The findings emphasized the benefits of the digital tool such as improved student participation, saving on learning time, equal participation opportunity for all, and a fun and exciting learning environment. The study recommended the integration of Plickers in classroom teaching for formative assessment to help support learning.

In a more recent study, reported from the University of Missouri, Columbia, Muslu and Siegel (2024) assert that assessment feedback is an essential way to promote student learning. Using typological data analysis, the respondents rated ZipGrade high in visibility dimension in terms of monitoring the progress of both individual students and the entire class (Muslu & Siegel, 2024). The teacher had immediate access to the statistical information and individual student responses. The study concluded with promoting the viewpoint that a balanced approach to technology-based assessment was the most effective way to assess learning outcomes where apps become complementary tools leading to innovations in pedagogy.

The above-mentioned research introduced mobile technology features and educational applications. Some also report instruments developed to evaluate applications geared towards student learning, but there is no instrument to evaluate teacher resource applications. The five-point evaluation or rating on App Store or Google Play does not explain the meaning of the points/stars for each application. This significant observation was reported by Cherner et al. (2016) where they combined research related to designing instructional technologies and the best practices for supporting teachers. The information obtained was used to construct a comprehensive rubric for assessing teacher resource applications. Cherner et al. (2016) meticulously presented the need for a rubric, the process to create such a rubric, the presentation of said rubric, and a discussion on its merits.

This study focuses on ZipGrade in the context of formative assessment. The literature review also provides specific and comprehensive details about this app. The integration of digital and physical assessment methods offers a blend of benefits and challenges. According to Cherner et al. (2016), one key strength of this approach is its ability to combine digital and physical elements to enhance the assessment process. It provides instant feedback to students, which is facilitated by using phones as scanners. This saves both printing time and effort and allows for the reuse of materials, thereby significantly shortening the time required for assessment and evaluation.

The feedback dimension of the studies on ZipGrade emphasizes visibility, where students receive their individual scores promptly. This immediate feedback mechanism is beneficial for both students and teachers, as it allows teachers to access not only individual scores but also statistical information for item analysis, facilitating a deeper review of student performance (Cherner et al., 2016; Muslu & Siegel, 2024).

The rubric rating for ease of use, classified under Domain C (Design) as dimension C2, highlights the system's user-friendliness (Cherner et al., 2016). Teachers can navigate the system with minimal guidance, with clear, thoughtful icons and visual aids that symbolize complex functions. This design enables them “to understand the visual components on screen, identify interactive buttons, make selections, and execute various tasks” (p. 126), offering a significant advantage over manual scoring methods or the use of systems like Scantron.

However, this method is not without its limitations. The need to physically print answer sheets remains a constraint. The system's preference for multiple-choice questions may limit its applicability across various assessment types. Digital grading systems might make errors, and issues with inappropriate scanning can lead to test items not being scored. Additionally, challenges such as school infrastructure, policies regarding technology and mobile phone availability, teachers’ lack of knowledge about the application, and unreliable Internet access can influence its effectiveness (Ningsih & Mulyono, 2019; Özpinar, 2020). The present research study aims to analyze the effectiveness and reliability of ZipGrade as a formative assessment tool in a face-to-face classroom administering multiple-choice question tests. The research questions addressed through the study are:

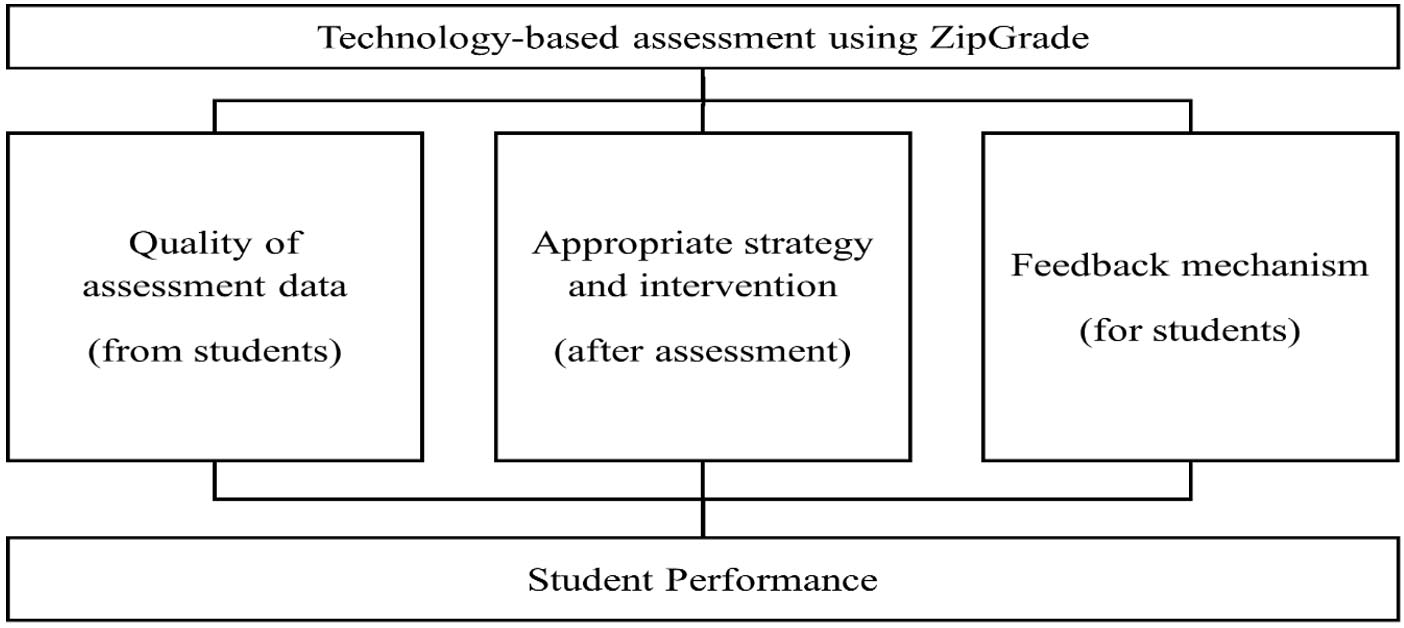

The Technological Pedagogical Content Knowledge (TPACK) framework explains how teachers can effectively integrate technology into their teaching practices. Originally developed by Mishra and Koehler (2006), TPACK addresses the dynamic interplay between three core domains: Content Knowledge (CK), Pedagogical Knowledge (PK), and Technological Knowledge (TK). This interaction allows teachers to deliver content effectively using technology while employing sound pedagogical methods. TPACK serves as a bridge between research and pedagogy, highlighting the complex relationships that drive technology integration in education (Arora and Arora, 2021; Palanas et al., 2019).

This study explores the use of ZipGrade as a technology-based assessment tool. The TPACK framework guides how educators not only use technological tools (TK) but also make informed pedagogical choices (PK) while delivering subject-specific content (CK). This tool helps automate the grading process and provides data-driven insights into students' learning progress. The intersection of these three domains in the TPACK model helps explain how teachers can effectively use ZipGrade to improve student learning outcomes, offering a transferable framework for other educators. Technology-based assessment tools like ZipGrade fit within the TK component of TPACK but require careful alignment with PK and CK to be effective. The TPACK framework highlights that simply using a tool is insufficient; educators must understand how to implement it to foster deeper learning.

ZipGrade serves as more than a grading tool. It enables educators to conduct assessments that provide immediate and accurate feedback, making the process more efficient. By leveraging the data provided by the tool, teachers can identify gaps in students' understanding and apply targeted teaching strategies (PK) that are informed by the assessment results. This process aligns with formative assessment practices, where the goal is to improve student performance through timely intervention.

Moreover, the feedback mechanism is a critical component of this framework. Research on formative assessment emphasizes the importance of feedback in guiding learning (Hattie & Timperley, 2007). In technology-based assessment, this feedback is delivered more quickly and with greater accuracy, allowing for immediate instructional adjustments. Therefore, ZipGrade not only supports efficient assessment but also enhances pedagogical practices by providing actionable insights into student learning.

The adoption of formative assessment theory complements the TPACK framework in this study (Black & Wiliam, 1998). Formative assessment emphasizes ongoing evaluation to monitor student learning and provide feedback that can improve teaching and learning in real-time. This theoretical underpinning makes the observations from the use of ZipGrade transferable to other classrooms because it is grounded in well-established educational practices.

By incorporating both TPACK and formative assessment theory, this study creates a conceptual framework that links technology, pedagogy, and assessment. This framework underscores the importance of valid and accurate data from technology-based assessments and the role of feedback mechanisms in promoting effective teaching strategies and interventions (Figure 1).

Figure 1

Conceptual Framework of the Research

Data were collected in the 2023-2024 academic year. A set of formative assessment tests with multiple-choice questions were prepared for shortlisted courses based on a portion of the curriculum. These tests were administered in classrooms and marked using ZipGrade. Student scores were shared with them immediately. This process was repeated with another set of formative assessment tests from a different portion of the curriculum at least three to five times for each group of students to check for the consistency of the results. At the end of the semester, students responded to a questionnaire about their perception of the ZipGrade tool, its utility and effectiveness. The content validity was checked by three experts in educational assessment and reliability was found to be 0.87. The data were analyzed descriptively (mean and standard deviation) using SPSS program.

The study sample was comprised of 63 fourth year students (18 males and 45 females) in the first semester of the academic year. Respondents were preservice teacher candidates from a teachers’ college. Besides the students, the two authors of the present study were the educators who administered ZipGrade application in their respective sections.

The ZipGrade application is straightforward to use. Teachers print test forms from the ZipGrade website and create class lists in the specified format. They then fill in the correct answers for their tests in the application. Students complete the multiple-choice forms and submit them for marking. Using the ZipGrade application and the built-in tablet camera, the system automatically analyzes student answers and records the grades in a digital gradebook.

This scoring process emphasises the system's efficiency and advantages over traditional scoring methods. In addition, the use of intuitive buttons and icons in the application make significant improvement when compared to long hours spent manually grading (Cherner et al., 2016).

A questionnaire was developed and used to collect student perceptions about using ZipGrade for marking. It was designed to assess user experiences and opinions of the ZipGrade grading tool, widely utilized for evaluating multiple-choice tests. Beginning with background information, it collected demographic details and previous exposure to ZipGrade, including program level, gender, familiarity, and usage history with the tool.

Before distributing the survey questionnaire, the purpose of the research and its voluntary participation were explained to the students. Students were assured that information provided would be confidential and used for research purposes. A consent letter with a thank you note was printed on the backside of the survey form providing contact details for the two investigators, in case of any questions/ concerns.

The survey was comprised of nine statements for respondents to rate their level of agreement on a five-point scale, ranging from "Strongly Agree" to "Strongly Disagree." These statements explored various facets of the user experience, such as the enjoyment, excitement, and satisfaction derived from using ZipGrade, the preference for immediate scoring versus detailed feedback, intentions regarding future use for student motivation, and perceptions of the tool's ease of use and learning curve. An open-ended question for additional comments was also provided, offering participants the opportunity to share insights or suggestions beyond the structured queries.

As English is the second language of the target group, the research tool was translated into Arabic, and the rating statements were provided in both English and Arabic to ensure clarity and comprehension. Student scores on formative assessments of a portion of the courses taught by the researchers were used to examine the students’ performance. Since the application gave the instructors a quick distribution of marks for individual students and the whole class, it also provided them with an opportunity to clarify student doubts and revise topics. Qualitative observations were made throughout the process of conducting formative assessments and reteaching portions from the curriculum where student scores were low.

The survey in this study exclusively targeted participants from the "Year 4" program, capturing a diverse gender distribution but, coincidentally, with a higher female representation. A significant majority of the respondents (50) were introduced to ZipGrade for the first time as part of this survey. Additionally, a large portion of the group has known about the tool for "less than a year," which aligns with the high number of first-time introductions. Regarding personal use of ZipGrade, most respondents (59) had not used ZipGrade themselves, with only 4 indicating personal usage.

This distribution suggests that ZipGrade is a relatively new or previously underutilized application among this group of respondents. The skew towards non-use and new introduction could influence perceptions and experiences reported in other parts of the survey, highlighting potential areas for educational focus or resource allocation to enhance familiarity and engagement with ZipGrade as an educational tool.

Table 1

Demographic Details and Background Information

| Variable | Level | Count | Percentage |

| Gender | Female | 45 | 71.43% |

| Male | 18 | 28.57% | |

| First time introduced to ZipGrade | Yes | 50 | 86.21% |

| No | 8 | 13.79% | |

| Time known this tool | Less than a year | 50 | 78.13% |

| More than 2 years | 11 | 17.19% | |

| More than a year | 2 | 3.13% | |

| Used ZipGrade yourself | Yes | 4 | 6.35% |

| No | 59 | 93.65% |

The descriptive statistics results generally indicate a positive perception of ZipGrade among respondents. The mean ratings for all aspects related to the use of ZipGrade for tests fall well above the midpoint of the scale, suggesting that participants found the tool enjoyable, exciting, and fun to use. Specifically, the enjoyment of taking tests with ZipGrade answer forms and the ease of learning the ZipGrade format both received the highest mean ratings of 4.57, accompanied by relatively low standard deviations (0.69 and 0.71, respectively), indicating a strong consensus among participants about these aspects (Table 2).

Similarly, the satisfaction with how tests were administered using ZipGrade and the ease of use of the ZipGrade format also scored high (mean of 4.51 and 4.56, respectively), further underscoring the tool’s positive reception. Despite the overall enthusiasm, the intention to use ZipGrade for formative assessments in the future received the lowest mean rating (4.30), though still significantly positive, with a slightly higher standard deviation (0.93), suggesting somewhat more variability in participant intention to use the tool in future teaching practices.

Table 2

Descriptive Statistics of the Responses

| Statements | Mean | Standard Deviation |

| Enjoyment of taking tests using ZipGrade forms | 4.57 | 0.69 |

| Excitement to use ZipGrade answer forms | 4.52 | 0.78 |

| Fun in using ZipGrade answer forms | 4.35 | 0.77 |

| Satisfaction with the way tests were administered using ZipGrade | 4.51 | 0.82 |

| Preference for detailed feedback from teachers on multiple-choice questions instead of marks | 4.37 | 0.89 |

| Intention to use ZipGrade for formative assessments in the future | 4.30 | 0.93 |

| ZipGrade format is easy to use | 4.56 | 0.69 |

| ZipGrade format is quick to learn | 4.57 | 0.71 |

Respondents also expressed a preference for receiving detailed feedback from teachers on multiple-choice questions over mere marks (mean of 4.37). This indicates a desire for more formative feedback to support learning, which ZipGrade can facilitate by providing immediate test results.

Responses to the open-ended question for additional comments were positive and included frequent comments about the application as “interesting”, “easy”, and “useful”. There were some who favored the app for its “immediate feedback” and others found the feedback with correct answer given beside an incorrect answer as “constructive”. A couple concerns were raised in terms of giving immediate feedback to a class of over 30 young students, and the feedback on an answer sheet which had overwriting, and some responses were changed. It was noted by the app administrator that the answer sheets with changed answers or with writing on the margins did not get scanned. In such cases, the students were given another blank response sheet to redo the assessment and record their answers clearly.

Overall, findings suggest that ZipGrade is well-received by student users who appreciate its user-friendly and engaging format. The slight variability in the intention to use ZipGrade for future assessments suggests that while respondents recognize its value, there may be factors influencing their ability or desire to adopt it more broadly in their teaching practices. This positive feedback, combined with a preference for detailed feedback, points towards a constructive reception of technology-enhanced learning tools that facilitate immediate feedback and engage students in the learning process.

The authors of this study were the faculty who administered the application in their respective sections of year four classes. One is a frequent user of ZipGrade in classrooms and is familiar with it. The other is a first-time user who received a few hours training in tailoring the multiple-choice questions in ZipGrade format, preparing the answer key, and doing a few trials before using the application in the classroom. After the initial one-time investment in setting up the app on the mobile phone, creating the answer keys and printing the answer sheets, the swift outcome observed in the classroom was found to be fascinating and impressive.

An important insight gained was that students seemed more comfortable with such frequent formative assessments with immediate feedback as compared to traditional end-of-topic review quizzes. With the immediate feedback received and the item analysis of question items through ZipGrade, the components on which students scored low were discussed right away. Although the gains in subsequent formative assessments were not analyzed quantitatively, students’ improved competence in tackling assessments with multiple-choice questions was evident from the boost in their confidence. The increase in understanding with ease is consistent with the findings of Suhendar et al. (2020). In addition, with this format the formative test questions did not need to be printed separately for all the sections, and the same question papers could be handed to another section with a blank answer sheet separately.

The findings provide valuable insights into the effectiveness and reliability of ZipGrade as a formative assessment tool in the classroom setting. Consistent with previous literature on digital tools for assessment, this study highlights the strengths of ZipGrade, particularly its ability to provide instant feedback, saving time and effort in grading; its ease of use facilitated by intuitive design and visual aids. These findings resonate well with the advantages of ZipGrade discussed by several researchers (Cherner et al., 2016; Cortez et al., 2023; Goodenough, 2019; Ningsih & Mulyono, 2019; Özpinar, 2020; Palanas et al., 2019; Suhendar et al., 2020). The majority of participants reported a positive experience with ZipGrade, emphasizing its potential to enhance learning outcomes by providing immediate results and enabling a more engaging assessment process. Action research by Goodenough (2019) on student perspectives on both formative and summative assessments emphatically supports the scope for improvement in student scores and conceptual understanding in subsequent tests. Muslu and Siegel (2024) reinforce the potential to support the reflection and action dimensions while discussing the guiding impact of feedback through digital application affordances which include ZipGrade.

This study also shed light on limitations associated with the tool. The requirement to physically print answer sheets and the tool's inclination towards multiple-choice questions may limit its broader applicability across different assessment types. Furthermore, issues such as potential inaccuracies in digital grading and challenges related to school infrastructure, policy on technology use, and varying levels of teacher familiarity with the application were noted. These challenges underscore the necessity for comprehensive support and training for educators, alongside infrastructural improvements, to fully leverage the benefits of ZipGrade in educational settings. The need for teacher training is supported through studies conducted by Suhendar et al. (2020) and Cortez et al. (2023). The need for basic infrastructure like Internet access and technological devices to use Web 2.0 tools has also been emphasized by preservice teachers in the study by Özpinar (2020) and primary school teachers in the study by Ningsih and Mulyono (2019).

Survey responses also indicated a strong preference among students for receiving detailed feedback on their assessments, beyond the scores. This resonates with an Indonesian study in which university students stated insufficient feedback as one of the drawbacks of formative assessment (Taufiqullah et al., 2023). This suggests a critical opportunity for educators to use tools like ZipGrade not only for efficiency in grading but also as a mechanism for delivering constructive, formative feedback that can further student learning and understanding.

ZipGrade provides information on the results of validity analysis, the level of difficulty of the questions, and the distinguishing features of each item on the assessment (Suhendar, 2020). With all these provisions, the educators have an opportunity to impart quality education. Students also have an opportunity for individual learning and assessing their understanding of course content assuming an independent role using such instruments. This is precisely “the recommended guideline” (p. 109) for quality assurance of e-learning in higher education programs in Bahrain within the student assessment dimension (Abdul Razzak, 2022).

ZipGrade as a formative assessment tool represents a significant step forward in integrating technology into educational assessment practices. This study confirms the utility of ZipGrade in providing a user-friendly, efficient, and engaging method for conducting formative assessments, aligning with the broader goals of e-learning initiatives in Bahrain and beyond. The positive reception of ZipGrade by both students and educators highlights its potential as a transformative tool for formative assessment, contributing to the creation of a more interactive and responsive learning environment.

To maximize its benefits, it is essential for educational institutions to address the limitations identified in this study, including the need for physical infrastructure, teacher training, and policies that support the use of technology in assessment. Palanas et al. (2019) recommend the formulation and implementation of a local policy on digital evaluation. Moreover, educators are encouraged to complement the use of ZipGrade with substantive feedback, leveraging the tool's capabilities to enhance student learning and performance comprehensively.

In conclusion, ZipGrade offers a promising avenue for enhancing the efficiency and effectiveness of formative assessments in classrooms. By addressing its current limitations and focusing on the broader educational context, educators can further harness the potential of this tool to support student learning, and the achievement of course intended learning outcomes. As technology evolves, ongoing research and adaptation will be crucial in ensuring that digital assessment tools like ZipGrade remain relevant and effective in meeting the changing needs of educators and students alike.

Abdul Razzak, N. (2018). Bahrain. In E-Learning in the Middle East and North Africa (MENA) Region. Ed. A. S. Weber and S. Hamlaoui, 27-53. Cham: Springer. https://doi.org/10.1007/978-3-319-68999-9_2

Abdul Razzak, N. (2022). E-Learning: From an option to an obligation. International Journal of Technology in Education and Science (IJTES), 6(1), 86-110. https://doi.org/10.46328/ijtes.314

Al-Hattami, A. (2020). E-Assessment of students’ performance during the e-teaching and learning. International Journal of Advanced Science and Technology, 29(8), 1537-1547.

Arora, B., & Arora, N. (2021). Web enhanced flipped learning: A case study. Canadian Journal of Learning and Technology, 47(1), 1-18. https://doi.org/10.21432/cjlt27905

Atkins, N. E., & Vasu, E. S. (2000). Measuring knowledge of technology usage and stages of concern about computing: A study of middle school teachers. Journal of Technology and Teacher Education, 8(4), 279-302. Charlottesville, VA: Society for Information Technology & Teacher Education. https://www.learntechlib.org/primary/p/8038/

Bhuvaneswari, S., & Elatharasan, G. (2019). New possibilities for assessment in Mathematics. International Journal of Research in Engineering, 9, 704-711. Special edition. https://indusedu.org/pdfs/IJREISS/IJREISS_2811_32310.pdf

Black, P., & Wiliam, D. (1998). Assessment and classroom learning. Assessment in Education: Principles, Policy & Practice, 5(1), 7-74. https://doi.org/10.1080/0969595980050102

Blattler, A., Sittiwanchai, T., Tareram, P., Chenvigyakit, W., & Silaars, C. (2023, December). One-shot grading: Design and development of an automatic answer sheet checker. In 2023 IEEE International Conference on Industrial Engineering and Engineering Management (IEEM) (pp. 0562-0566). https://doi.org/10.1109/IEEM58616.2023.10406437

Celik, B., & Kara, S. (2022). Students' perceptions of the general English proficiency test: A study on Tishk International University students in Erbil, Iraq. Amazonia Investiga, 11(59), 10-20. https://doi.org/10.34069/AI/2022.59.11.1

Cherner, T., Lee, C-Y., Fegely, A., & Santaniello, L. (2016). A detailed rubric for assessing the quality of teacher resource apps. Journal of Information Technology Education: Innovations in Practice, 15, 117-143. https://doi.org/10.28945/3527

Cortez, M. K. M., Singca, M. D., & Banaag, M. G. E. (2023). Use of ZipGrade: A revolutionary tool for grade 7 teachers in checking students’ long tests and unit tests. EPRA International Journal of Research and Development (IJRD), 8(7), 151-156. https://doi.org/10.36713/epra13848

Elmahdi, I., Al-Hattami, A., & Fawzi, H. (2018). Using technology for formative assessment to improve students’ learning. Turkish Online Journal of Educational Technology, 17(2), 182-188. https://files.eric.ed.gov/fulltext/EJ1176157.pdf

Ghanem, S. (2020). E-learning in higher education to achieve SDG 4: Benefits and challenges. In 2020 Second International Sustainability and Resilience Conference: Technology and Innovation in Building Designs (51154). https://doi.org/10.1109/IEEECONF51154.2020.9319981

Goodenough, B. (2019). Formative and summative test process: The students’ perspectives. Masters of Education in Teaching and Learning, 25. https://digitalcommons.acu.edu/metl/25

Guo, W. Y., & Yan, Z. (2019). Formative and summative assessment in Hong Kong primary schools: Students’ attitudes matter. Assessment in Education: Principles, Policy and Practice, 26(6), 675-699. https://doi.org/10.1080/0969594X.2019.1571993

Hattie, J., & Timperley, H. (2007). The power of feedback. Review of Educational Research, 77(1), 81-112. https://doi.org/10.3102/003465430298487

Henderson, M., Selwyn, N., Finger, G., & Aston, R. (2015). Students’ everyday engagement with digital technology in university: exploring patterns of use and ‘usefulness.’ Journal of Higher Education Policy and Management, 37(3), 308-319. https://doi.org/10.1080/1360080X.2015.1034424

Hendrith, S. Q. (2017). Professional development of research-based technology strategies: The effectiveness of implementation in the elementary classroom. (Doctoral dissertation). Available from ProQuest Dissertations and Theses database. (10258462) https://www.proquest.com/openview/06230e344d9d6b3d0f3acaa2f65efcfb/1?pq-origsite=gscholar&cbl=18750

Lookadoo, K. L., Bostwick, E. N., Ralston, R., Elizondo, F. J., Wilson, S., Shaw, T. J., & Jensen, M. L. (2017). “I forgot I wasn’t saving the world”: The use of formative and summative assessment in instructional video games for undergraduate Biology. Journal of Science Education and Technology, 26, 597-612. https://doi.org/10.1007/s10956-017-9701-5

Louis, C. M. (2016). Grammar & writing: Pedagogy behind student achievement. St. Catherine University. https://sophia.stkate.edu/maed/131

Ministry of Education. (2020). Guide to digital tools 2019-2020. Kingdom of Bahrain. digitaltoolsguidance/moe/2020

Ministry of Education. (2022). Guide to educational digital tools (2nd ed.). Kingdom of Bahrain. digitaltoolsguidance/version2/moe/2022

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record, 108(6), 1017-1054. https://doi.org/10.1111/j.1467-9620.2006.00684.x

Muslu, N. (2017). Exploring and conceptualizing teacher formative assessment practices and digital applications within a technology-enhanced high school classroom (Doctoral dissertation, University of Missouri-Columbia). https://doi.org/10.32469/10355/66733

Muslu, N., & Siegel, M. A. (2024). Feedback through digital application affordances and teacher practice. Journal of Science Education and Technology, 33, 729-745. https://doi.org/10.1007/s10956-024-10117-9

Ningsih, S. K., & Mulyono, H. (2019). Digital assessment resources in primary and secondary school classrooms: Teachers’ use and perceptions. International Journal of Interactive Mobile Technologies (iJIM), 13(8), 167-173. https://doi.org/10.3991/ijim.v13i08.10730

Özpınar, İ. (2020). Preservice teachers’ use of Web 2.0 tools and perspectives on their use in real classroom environments. Turkish Journal of Computer and Mathematics Education (TURCOMAT), 11(3), 814-841. https://doi.org/10.16949/turkbilmat.736600

Palanas, D. M., Alinsod, A. A., & Capunitan, P. M. (2019). Digital assessment: Empowering 21st century teachers in analyzing students’ performance in Calamba city. JPAIR Institutional Research, 13(1), 1-14. https://doi.org/10.7719/irj.v13i1.779

Perrotta, C. (2013). Do school-level factors influence the educational benefits of digital technology? A critical analysis of teachers’ perceptions. British Journal of Educational Technology, 44(2), 314-327. https://doi.org/10.1111/j.1467-8535.2012.01304.x

Prensky, M. (2001). Digital natives, digital immigrants. On the Horizon, 9(5), MCB University Press.

Prensky, M. (2009). H. Sapiens Digital: From Digital Immigrants and Digital Natives to Digital Wisdom. Innovate: Journal of Online Education, 5(3). https://www.learntechlib.org/p/104264/

Serrano, A. Z. (2023). Experiential mobile learning: QR code-driven strategy in teaching thermodynamics. Studies in Technology and Education, 2(1), 36-42. https://doi.org/10.55687/ste.v2i1.43

Şimşek, Ö., Bars, M., & Zengin, Y. (2017). The use of information and communication technologies in the assessment and evaluation process in Mathematics instruction. Uluslararası Eğitim Programları ve Öğretim Çalışmaları Dergisi [International Journal of Educational Programs], 7(13), 206-207. https://ijocis.com/index.php/ijocis/article/view/151

Suhendar, Surjono, H. D., Slamet, P. H., & Priyanto. (2020). The effectiveness of the Zipgrade-assisted learning outcomes assessment analysis in promoting Indonesian vocational teachers' competence. International Journal of Innovation, Creativity and Change, 11(5), 701-719. https://www.ijicc.net/images/vol11iss5/11543_Suhendar_2020_E_R.pdf

Taufiqullah, Nindya, M. A., & Rosdiana, I. (2023). Technology-enhanced formative assessment: Unraveling Indonesian EFL learners’ voices. English Review: Journal of English Education, 11(3), 981-990. https://doi.org/10.25134/erjee.v11i3.9076

Wagstaff, B., Lu, C., & Chen, X. A. (2019, March). Automatic exam grading by a mobile camera: Snap a picture to grade your tests. In Companion Proceedings of the 24th International Conference on Intelligence User Interfaces (IUI’19-companion) (pp. 3-4). https://doi.org/10.1145/3308557.3308661

Bani Arora is a Lecturer at the Bahrain Teachers’ College of the University of Bahrain and holds a Fellowship of the Advance Higher Education Academy in the United Kingdom. Her research interests include teaching English as a second/foreign language (ESL/EFL) to young adults using flipped learning, scaffolding instruction, and educational technology. Email: barora@uob.edu.bh ORCID: https://orcid.org/0000-0003-1564-6712

Abdulghani Al-Hattami is the Head of Initial Teacher Education at Bahrain Teachers College, and specializes in educational psychology, assessment, and research. A Senior Fellow of Advance Higher Education, Abdulghani holds a Ph.D. degree from the University of Pittsburgh, USA. His expertise includes statistical analysis, psychometrics, and English language teaching. Email: aalhattami@uob.edu.bh ORCID: http://orcid.org/0000-0003-1705-2117