Areej Tayem, University of Ottawa, Canada

Isabelle Bourgeois, University of Ottawa, Canada

Despite the widespread adoption of data-based decision making (DBDM) policies in schools around the world, there is limited understanding of how teachers use DBDM in K-12 classrooms and the impact of DBDM training on teacher practices and student outcomes. This scoping review aims to provide an overview of the existing literature on the uses of DBDM by teachers globally and identify gaps in the field. The findings (a) highlight a geographical and temporal clustering, with a notable emphasis on studies conducted in the United States and the Netherlands and published in 2016–2017 and 2020–2022; (b) identify a gap in the literature, particularly in the context of online and secondary schools, where the predominant focus has been on elementary and in-person settings; and (c) suggest that although DBDM interventions have been found helpful in altering teacher practices and student outcomes, there is still a need for more sustainable support to enhance DBDM implementation. The study concludes with recommendations for future DBDM research, building on implications from previous interventions.

Keywords: data-based decision making, K-12 education, teacher practices, student outcomes

Malgré l'adoption généralisée des politiques de prise de décision fondée sur les données probantes (PDDP) dans les écoles à travers le monde, peu d’information est disponible au sujet de l’utilisation de la PDDP par les enseignants œuvrant aux paliers primaire et secondaire, ainsi que sur l'impact de la formation en PDDP sur le comportement des enseignants et les résultats scolaires. Cette recension exploratoire vise à fournir un aperçu des écrits actuels sur les usages de la PDDP par les enseignants à l'échelle mondiale et à identifier les lacunes dans le domaine. Les résultats mettent en évidence les points suivants : (a) les études réalisées jusqu’à présent peuvent être groupées de manière géographique et temporelle, et ont surtout été réalisées aux États-Unis et aux Pays-Bas; de plus la majorité des études ont été publiées en 2016-2017 et 2020-2022 ; (b) il existe des lacunes importantes dans les écrits actuels, notamment par rapport au contexte des écoles en ligne et secondaires - les études actuelles reflètent davantage un intérêt pour les écoles élémentaires et les contextes d’études en présentiel ; et (c) les études recensées suggèrent que, bien que les interventions relatives à la PDDP se soient révélées utiles pour modifier les pratiques des enseignants et les résultats scolaires, les enseignants ont besoin d’un soutien plus durable pour améliorer la mise en œuvre de la PDDP. Enfin, l'article fournit des recommandations pour la recherche sur la PDDP, en s'appuyant sur les conclusions des interventions précédentes.

Mots-clés : prise de décision fondée sur les données probantes, éducation primaire et secondaire, pratiques enseignantes, résultats des élèves

Educational technology developments over the past two decades have resulted in increased amounts of data available to decision-makers and innovative ways of utilizing them, particularly in the kindergarten through Grade 12 (K-12) context (Behrens et al., 2018; Datnow & Hubbard, 2015). Edtech tools, such as learning management systems, adaptive learning platforms, and digital assessments, generate vast amounts of data about student learning behaviours, engagement, and performance. These technologies afford educators real-time access to detailed information about student progress, which allows for more personalized instruction and timely interventions (Weller, 2020). In addition, they facilitate the collection of data that can be used not only for student assessment but also for pedagogical decision-making, helping teachers make data-driven improvements to their teaching practices.

In education, data-based decision making (DBDM) refers to the use of empirical evidence to inform educational policies, practices, and decisions (Schildkamp & Ehren, 2013). At its core, DBDM involves the systematic collection, examination, and utilization of various types of educational data (e.g., summative and formative assessments, behavioural data, attendance records, demographic information, to-class and homework assignments, classroom observations, etc.), with a primary objective of enhancing student performance and tailoring educational strategies to meet their individual needs (Marsh, 2012; Marsh et al., 2006). Through the analysis of such data, educators can pinpoint areas where students require additional support, adapt instructional strategies, and implement targeted interventions (Carlson et al., 2011; Faber et al., 2018; Heinrich & Good, 2018; Tsai et al., 2019).

The adoption of DBDM has gained global attention, recognizing its significance in ensuring accountability and driving effective decision-making (Cheng, 1999; Cheng & Curtis, 2004; Maier, 2010). On an international scale, numerous interventions and policies have been implemented to encourage teachers and school leaders to embrace DBDM in conducting well-informed, high-quality decisions. Some of these interventions have focused on specific schools or districts such as the AZiLDR model in Arizona (Ylimaki & Brunderman, 2019), Instructional Coaches in Texas (Rangel et al., 2017), and The Learning Schools Model in New Zealand (Lai et al., 2014). Others have been larger in scope and involved nationwide efforts, such as the Focus Intervention, a 2-year training project in the Netherlands through which all primary school teachers in Dutch public schools were trained on using DBDM to improve their teaching methods (van Geel et al., 2016). The goal of these interventions, regardless of their scope, is to equip teachers with the knowledge and abilities necessary to implement and sustain DBDM. However, due to policies that caused high accountability pressure in some education systems around the world, such as the No Child Left Behind Act in the United States (Kempf, 2015), mandatory test-based school accountability policies in Germany (Maier, 2010), Ofsted Inspections and League Tables in the United Kingdom (Schildkamp et al., 2017), and the Education Quality and Accountability Office assessments in Ontario, Canada (Kempf, 2015), the focus of DBDM interventions has mainly been on the use of data from standardised assessments to demonstrate school accountability rather than to enhance the teaching and learning experience (Kempf, 2015).

This scoping review aims to examine comprehensively the landscape of DBDM in the K-12 context for instructional purposes by including studies that assess established interventions targeting the use of data by teachers at the classroom level, as well as how, and the extent to which, teachers use data in their daily practice to inform their instruction. A scoping review was chosen because it is well-suited to mapping a broad and diverse body of literature, offering a comprehensive overview of the topic across various methodologies and contexts. This approach helps identify research gaps and provides a global perspective on the impact of DBDM. However, given that some of the studies included can lack quality and/or methodological rigour, scoping reviews can be challenging. Additionally, although scoping reviews are effective for identifying trends and gaps in the literature, they do not offer in-depth analyses of individual studies, which can limit the researcher’s ability to draw detailed conclusions about specific interventions or outcomes. Despite these challenges, a scoping review is ideal for answering the following research questions: 1. How do teachers around the world engage in DBDM for instructional purposes? and 2. To what extent do DBDM interventions influence teachers’ instructional practices and student outcomes?

The review follows PRISMA-ScR guidelines (Tricco et al., 2018) to systematically map evidence, identify key concepts, and uncover knowledge gaps. This framework ensured a rigorous approach to searching, screening, and selecting articles on data use for decision-making in K-12 education.

With the assistance of a university librarian, a comprehensive search across four electronic databases was conducted (i.e., Education Source, ERIC, Web of Science, and Academic Search Complete). Keywords and controlled vocabulary related to the research question were used as illustrated in Table 1.

Table 1

Keywords and Controlled Vocabulary Used in the Search

| Criteria | Search words |

| Context 1 | Data use/ or data-based decision making/ or data-driven decision making/ or learning analytics |

| Context 2 | K-12/ or schools/ or elementary schools/ or middle schools/ or private schools/ or public schools/ or secondary schools/ elementary school students/ or middle school students/ or secondary school students/ secondary school teachers/ or public school teachers/ or elementary school teachers/ or high school teachers/ or junior high school teachers/ or middle school teachers/ (school* or kindergarten*). ti,ab |

Note. Key terms were searched in article titles and abstracts using the ti,ab field code to ensure relevance and precision in the results.

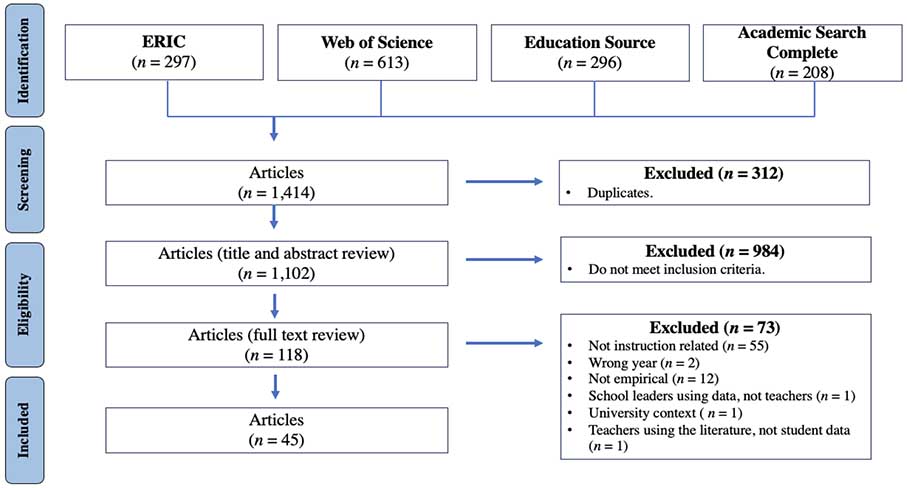

To be included in the review, studies had to be conducted in K-12 settings and published in English between 2013 and 2023. Studies also needed to explore how teachers use data to improve teaching practices and make pedagogical decisions at the classroom level. Studies examining data use by school leaders, districts, or for purposes other than teaching improvement, such as accountability, were excluded. The initial search identified 1,414 articles, with 312 duplicates removed. Interrater reliability was ensured through independent screening of 5% of the articles, showing a 92% agreement. Discrepancies were resolved through follow-up discussions. Following the Search Process Flow diagram (Tricco et al., 2018), 984 articles were excluded as they did not meet the inclusion criteria. Additionally, 73 articles were excluded during the screening process for the following reasons: 55 were not instruction-related, 12 were not empirical, two were published in the wrong year, one focused on school leaders’ use of data rather than by teachers, one was set in a university context, and two involved teachers using literature rather than student data. Ultimately, 45 articles were selected for data extraction (Figure 1).

Figure 1

PRISMA-ScR Diagram

Note. Adapted from Tricco et al. (2018).

For a systematic and flexible data extraction process, a template was developed to capture comprehensive information that included article identifiers and overview, context of the study, DBDM intervention details, and outcomes of the intervention (Table 2).

Table 2

Elements in the Data Extraction Template

| Variables | Detailed elements |

| Article identifiers | author, title, journal, year |

| Context of the study | country, subject matter, teaching environment (i.e., online/in-person), grade level |

| Aim and research design | aim of the study, number of teachers, number of students, research design |

| DBDM intervention details | intervention type, sources of data used by teachers, previous DBDM professional development, duration of the intervention, reported outcome on teacher practices, reported outcome on student’s academic performance, implications regarding training or challenges |

This review has several limitations. It includes English-language studies only, potentially missing relevant research published in other languages. Additionally, the review’s data, gathered in June 2023, may not cover the most recent studies, particularly those on online learning published after that date. Finally, the focus was primarily on the effects of DBDM interventions on teacher practices and student outcomes, possibly overlooking other variables like school culture and leadership support that might impact intervention effectiveness.

To provide succinct responses to the two research questions, the findings are organized into two main sections. First, an overview of the research on DBDM will provide key contextual elements that describe the body of studies included as part of the review, and second, a more in-depth analysis of the findings of the included studies will focus on the effects of DBDM interventions on teaching practices.

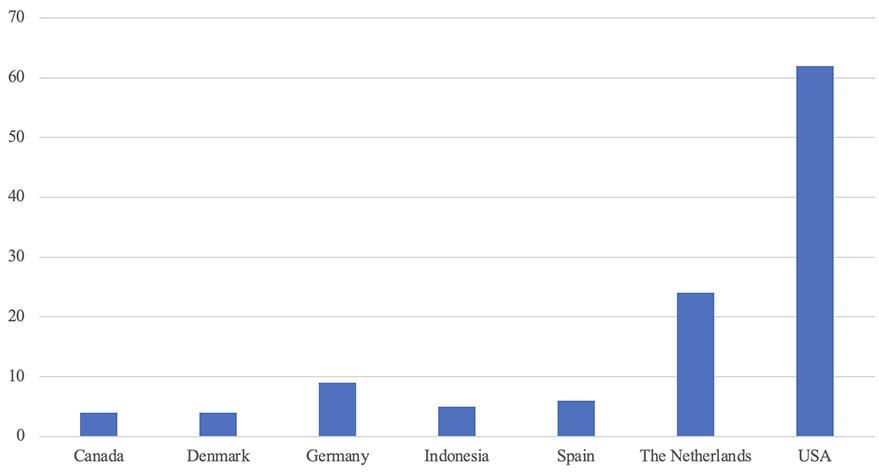

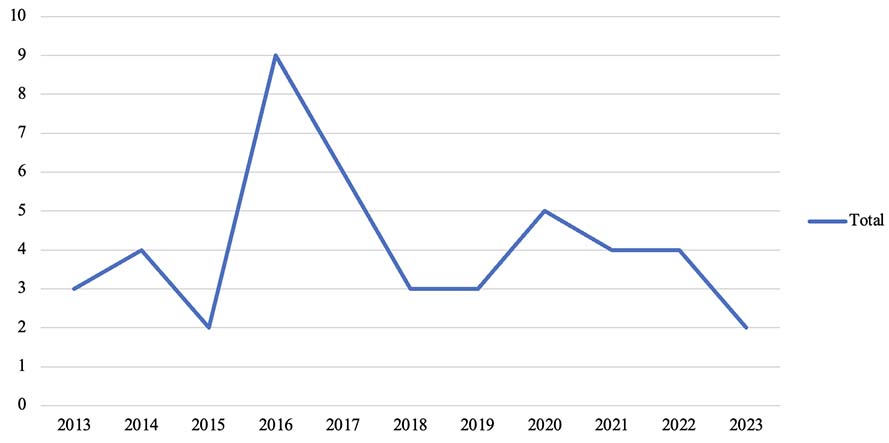

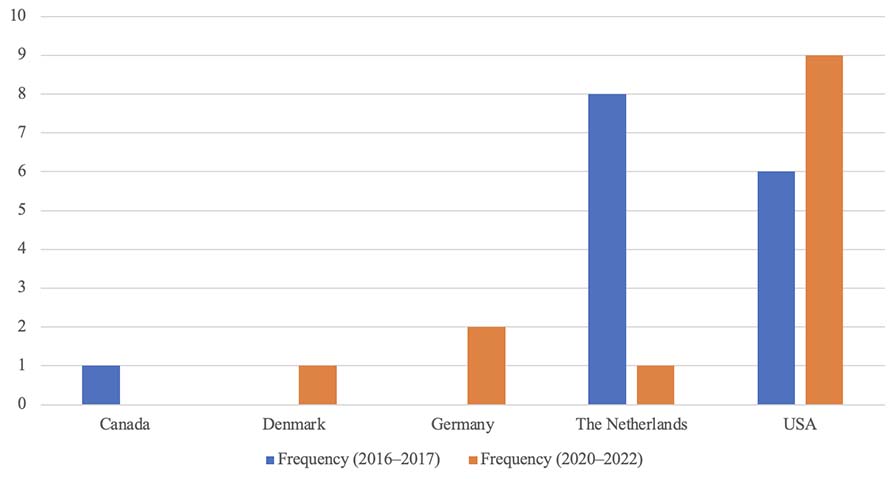

To understand the evolving landscape of DBDM, a critical examination of the included studies reveals a notable concentration of research in the United States (62%) and the Netherlands (25%), clustered around two key periods: 2016–2017 and 2020–2022. Although countries such as Canada, Denmark, Germany, Indonesia, and Spain also contributed to the advancement of research in this field, with almost 13% combined, their collective efforts did not exhibit the same level of magnitude, reflecting a more limited engagement with DBDM in schools. Figure 2 shows the distribution of studies by country and Figure 3 displays their temporal distribution.

As can be seen in Figure 4, the findings demonstrate that during the first period (2016–2017), both the USA and the Netherlands are the leading countries by the number of research studies conducted within this timeframe. However, a notable shift is observed in subsequent years with a significant decline in research efforts in the Netherlands and a small increase in the USA, which retained its lead in this field. The number of research articles in each country could be an indicator of DBDM institutional support, educational priorities, and the integration of DBDM into teaching practices. For example, the higher research output in the USA may suggest a stronger emphasis on studying and implementing DBDM for instructional practices, supported by policies, funding, and professional development. In contrast, the decline in research in the Netherlands could indicate a reduced focus or prioritization of DBDM, possibly pointing to less frequent use or investigation of these practices among teachers.

Figure 2

Geographical Distribution of Studies Reviewed

Figure 3

Temporal Concentration of Studies on Teachers’ Use of DBDM for Instructional Purposes Published Worldwide (2013–2023)

Figure 4

Number of Studies Addressing Teacher Utilization of Data to Improve Teaching Practices in 2016–2017 and 2020–2022

A number of factors related to the educational setting within which DBDM interventions took place were examined as part of the review; these include the specific grade levels targeted by DBDM initiatives, and the online or in-person contexts that influenced the design and implementation of these initiatives.

Most of the studies reviewed (71%; n = 32) were conducted in elementary schools that include Grades 1 to 8. In addition to these, approximately 18% of the studies reviewed (n = 8) were conducted in K-12 schools. Although categorized separately, K-12 schools overlap significantly with elementary schools. The focus on the elementary context in the reviewed studies emphasizes the potential role played by DBDM in primary schooling; however, this emphasis also raises questions about the lack of attention given to DBDM interventions conducted in Grades 9 through 12. As seen in Table 3, these studies account for only 11% of the studies reviewed (n = 5).

Table 3

Studies Investigating Teacher Data Utilization to Improve Teaching Practices in Each Grade Level

| Grade/School level | Frequency | Percentage | Cumulative |

| Elementary | 32 | 71.11 | 71.11 |

| K-12 | 8 | 17.78 | 88.89 |

| Secondary/High school | 5 | 11.11 | 100.00 |

| Total | 45 | 100.00 |

This scoping review included studies conducted within traditional, in-person educational settings. While a handful of these studies (i.e., Admiraal et al., 2020; Campos et al., 2021; Peters et al., 2021; Regan et al., 2023; Truckenmiller et al., 2022) included learning analytics and computer-based assessment methods, it should be noted that these advancements were implemented within a conventional classroom environment. Furthermore, these studies are relatively recent, potentially indicating a recent surge in technology-assisted educational data use within traditional in-person classrooms. This focus on in-person contexts underscores a significant gap in understanding how teachers use DBDM in other modalities such as online or hybrid learning environments.

More than half of the studies reviewed (n = 25) focused on teacher engagement with DBDM for instructional purposes, highlighting four main themes. Most studies (n = 14) examined teachers’ data literacy skills, which showcase varying proficiency levels in using data to inform instruction, directly addressing how teachers engage with DBDM (e.g., Gelderblom et al., 2016, Ho, 2022; Hoover & Abrams, 2013; van den Bosch et al., 2017). Five studies focused on data accessibility and types of data available, which demonstrate that easy access to relevant data enhances instructional decision-making (e.g., Abdusyakur & Poortman, 2019; Admiraal et al., 2020; Farley-Ripple et al., 2019). Three studies explored teachers’ perceptions and self-efficacy regarding DBDM, showing that confidence and attitudes influence data use (e.g., De Simone, 2020; Reed, 2015). Lastly, five studies identified factors that facilitate or hinder DBDM, offering insights into the contextual barriers and supports that affect its implementation (e.g., Abdusyakur & Poortman, 2019; Copp, 2017; Schildkamp et al., 2017).

The main purposes of DBDM are to support the improvement of instructional practices as well as student outcomes. As shown in Table 4, 20 studies focused on evaluating these potential impacts in a variety of different interventions. The studies can be classified according to the length or duration of the DBDM intervention evaluated, the extent to which participants had received professional development (PD) related to data use prior to the evaluated interventions, and the sources of data used by teachers.

Table 4

DBDM Interventions Overview

| Study | Intervention | PD | Data source | Change in teacher practices | Change in student outcome |

| Duration: < 1 Year | |||||

| Andersen (2020) | Data-Informed Evaluation Culture: Aims to create a data-informed evaluation culture within participating schools in Denmark through comprehensive data training. | Yes | Student assessment | N | NT |

| Dunn et al. (2013) | Statewide PD Program: Aims to increase teacher use of DBDM in a Pacific Northwestern state. The intervention was evaluated using the Data-Driven Decision Making Efficacy and Anxiety (3D-MEA) inventory. | Yes | Different sources | N | NT |

| Filderman et al. (2019) | Guidelines for DBDM Implementation: Offers guidelines to support effective DBDM implementation for students with or at risk for reading disabilities in secondary grades. | No | Computer adaptive testing | P | NT |

| Rodríguez-Martínez et al. (2023) | Personalized Homework Intervention: Assists teachers in using learning analytics to personalize students’ homework based on formative assessment results. | No | Computer adaptive testing | NT | H |

| Schifter et al. (2014) | The Using Data Workshop: Offers a workshop to help teachers interpret and use data from project dashboards, with a focus on PD during summer institutes. | Yes | Learning analytics | P | NT |

| Duration: 1 Year | |||||

| Christman et al. (2016) | The Linking Intervention: Focuses on teacher learning about mathematics instruction and aims to elevate data utilization practices. | Yes | Student assessment | P | NT |

| Curry et al. (2016) | Data-Informed Instructional Model: Provides K-12 teachers with a model for data-informed instruction, which enhances teaching and learning at the classroom level. | NM | Different sources | P | H |

| Marsh et al. (2015) | Coaching and PLC Intervention: Combines coaching and PLC to support teachers in data utilization. | Yes | Student assessment | P | NT |

| Peters et al. (2021) | Teacher Training in Differentiated Instruction: Provides teacher training in differentiating instruction using learning progress assessment and reading sportsman materials. | Yes | Computer adaptive testing | NTR | M |

| Regan et al. (2023) | Technology-Based Graphic Organizer Intervention: Utilizes technology-based graphic organizers, online modules, long-range planning, and virtual PLC activities to support data utilization. | Yes | Computer adaptive testing | P | NT |

| van der Scheer et al. (2016) | DBDM Intervention for Grade 4 Math Teachers: Focuses on data-based decision making for Grade 4 math teachers. | Yes | Student assessment | P | H |

| van der Scheer & Visscher (2017) | DBDM Intervention for Grade 4 Math Teachers: Focuses on data-based decision making for Grade 4 math teachers. | Yes | Student assessment | P | NT |

| Duration: 2 Year | |||||

| Campos et al. (2021) | Learning Analytics Dashboard Support Intervention: Focuses on assisting teachers in utilizing data from a learning analytics dashboard designed to facilitate student collaboration and discussion in math. Its goal is to deepen conceptual understanding in math. | Yes | Learning analytics | P | NT |

| Ebbeler et al. (2016) | Teams Intervention: Forms teams of teachers and teacher leaders to create a community of practice focused on using data to enhance instruction. | Yes | Different sources | P | NT |

| Faber et al. (2018) | Differentiated Instruction Training: Provides teachers with training to give differentiated instruction to students. | Yes | Student assessment | NT | L |

| Keuning et al. (2016) | The Focus Intervention: A two-year training course for primary school teams aimed at acquiring knowledge and skills related to DBDM for instructional purposes. | Yes | Student assessment | NT | H |

| Staman et al. (2014) | The Focus Intervention: A two-year training course for primary school teams aimed at acquiring knowledge and skills related to DBDM for instructional purposes. | Yes | Student assessment | P | NT |

| Staman et al. (2017) | DBDM Training for Differentiated Instruction: Trains teachers in DBDM to provide differentiated instruction. | Yes | Student assessment | P | NT |

| Duration: 4 Year | |||||

| Datnow et al. (2021) | Teacher Collaborative Efforts Intervention: Seeks to promote students’ math achievement by fostering collaborative efforts among teachers to improve instruction, including utilizing relevant data. | NM | Student assessment | P | NT |

| Hebbecker et al. (2022) | DBDM Framework-Based Intervention: Assists teachers in decision making based on data (van Geel et al., 2016) | Yes | Student assessment | P | H |

Note. PD = Professional development; PLC = Professional learning communities; NM = Not Mentioned, Teacher behaviour (P = Positive, N = Negative, NTR = Neutral, NT = Not tested); Student outcome (H = High, M = Moderate, L = Low, NT = Not tested).

The interventions included in the studies reviewed range from a condensed one-session PD workshop (e.g., Schifter et al., 2014) to a 4-year program (e.g., Hebbecker et al., 2022). To gain a clearer understanding of this dimension, interventions were categorized based on their duration (Table 4). Most interventions were conducted within one to two academic years (n = 13). A notable subset of interventions lasted less than one year (n = 5), while only two interventions, one in the Netherlands (Hebbecker et al., 2022) and one in the US (Datnow et al., 2021), took place over a comprehensive 4-year period. The findings also demonstrate that in 16 out of the 20 interventions, teachers had some level of data literacy training prior to the DBDM professional development. For two of the remaining four interventions, it was undetermined whether teachers had prior data literacy training rather than a definitive absence of such training.

More than half of the studies reviewed (n = 11) indicated that the main source of data used by teachers as part of the DBDM intervention was generated through student assessment, and four additional studies used assessment data from computer adaptive testing. Other studies also featured learning management systems as a source of data (n = 2) or different sources (n = 3).

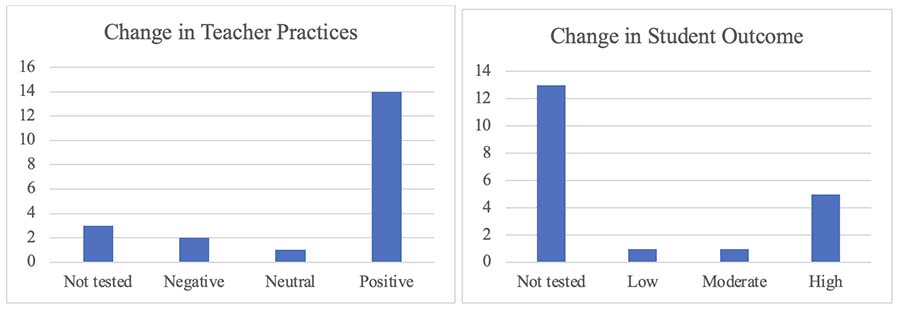

The impacts of DBDM interventions on instructional practices employed by teachers as well as on student outcomes were assessed in the 20 evaluative studies reviewed. DBDM interventions were assessed as having a “positive” impact on instructional practices if the study findings included one of the following elements: (a) increased emotional, analytical, and/or intentional data sensemaking and data literacy skills; (b) increased or enhanced discussions amongst colleagues on data use and the creation of professional learning communities; (c) instructional adjustments using data; (d) capacity-building for DBDM; (e) increased teacher awareness of data use for school development and instruction; or (f) changes in teacher efficacy related to implementing instructional strategies. The impact of the DBDM interventions on instructional practices was assessed as “neutral” if teachers maintained the same level of data use, and as “negative” if the intervention did not contribute to the development of new skills or positive attitudes related to DBDM. In some studies, no change in teacher practices was measured, as the focus was solely on student outcomes.

The impact of the DBDM interventions on student outcomes was also examined within the 20 evaluative studies. These impacts were considered “high” if the DBDM intervention resulted in: (a) increased student understanding of the learning materials; (b) increased student motivation and engagement; or (c) improved scores on standardized tests. DBDM impacts were considered “moderate” if after the intervention: (a) students recognized the importance of setting challenging learning goals; or (b) there was a small positive effect on student achievement in standardized tests. Lastly, the impact was considered “low” if no significant positive effects were found on student outcomes. In some studies, however, no measurement of student outcomes was included, as the focus was solely on changes in teacher practices.

Figure 5

The Impact of DBDM Interventions on Instructional Practices and Student Outcomes

As illustrated in Figure 5, the number of studies that evaluated the impact of DBDM interventions on instructional practices (n = 17) is higher than those focusing on student outcomes (n = 7). However, in both dimensions, the positive impacts of DBDM on instructional practices and the high impact of the interventions on student outcomes were more commonly found than neutral/moderate or negative/low impacts. Moreover, most of the reviewed studies evaluated the impact of a DBDM intervention on one of the two dimensions; studies that explored the effect of DBDM interventions on both teachers and students were less common (i.e., Curry et al., 2016; Hebbecker et al., 2022; Peters et al., 2021; van der Scheer et al., 2016).

This scoping review offers a comprehensive examination of the landscape of DBDM use by teachers in the K-12 context, which addresses geographical and temporal concentrations, educational settings, and the impact of DBDM interventions. This discussion is based on the review of all 45 articles, providing an in-depth analysis of the current state of the field.

Temporal and geographical patterns in research distribution on DBDM shed light on global variations in how teachers engage with data for instructional purposes. Temporal trends reveal how research on teacher engagement aligns with shifts in policies, technologies, and educational reforms, while geographical patterns highlight disparities driven by contextual factors such as access to resources or institutional support (Christman et al., 2016; Curry et al., 2016; Farrell & Marsh, 2016a, 2016b; Michaud, 2016; Park & Datnow, 2017). By comparing regions and time periods, we can identify factors that contributed to DBDM adoption for instructional purposes by teachers at the classroom level. Additionally, gaps in research across certain areas or periods can guide future studies to explore underrepresented contexts, offering a more comprehensive understanding of DBDM’s global impact.

The observed concentration of studies in the United States and the Netherlands found in this review aligns with the existing literature, which highlights these countries’ significant efforts in implementing and studying DBDM. The initial surge in articles during 2016–2017 coincides with pivotal policy reforms in the United States (Park & Datnow, 2017) and the Netherlands (Schildkamp et al., 2017), which aimed to reshape the educational landscape, particularly concerning data use and DBDM. Subsequently, a resurgence of research interest appears in the literature during 2020–2022. This period saw an increased focus on technology and computer-based assessment incorporation, which placed DBDM at the forefront of educational change.

Signed in 2015, the Every Student Succeeds Act marked a shift in US education policy, moving away from high-stakes testing under No Child Left Behind. This Act introduced flexible accountability measures, reduced the emphasis on standardized tests, and encouraged data use for instructional improvement (Shirely, 2017). Research from this period focused on how educators utilized diverse data forms to enhance teaching (e.g., Curry et al., 2016; Park & Datnow, 2017). Similarly, declining student performance in international assessments prompted the adoption of DBDM policies in the Netherlands. Initiatives such as the ‘Focus’ intervention equipped educators with skills to monitor progress and tailor their instruction according to students’ needs, which resulted in improvement in teaching practices and student outcomes (Faber et al., 2018; Schildkamp et al., 2017).

Studies published during this period show that teachers started to incorporate technology such as learning analytics and computer-based assessments to collect and analyze data, which reshaped and eased the use of DBDM to support learning (Admiraal et al., 2020; Campos et al., 2021; Truckenmiller et al., 2022). Although the Netherlands saw a decline in research during this period, the DBDM frameworks developed for Dutch schools, such as the Data Teams framework (Schildkamp et al., 2016) and the DBDM process model (van Geel et al., 2016), created the foundation for subsequent studies. These frameworks have been successfully adopted in various international contexts, including Denmark (e.g., Andersen, 2020), the United States (e.g., Datnow et al., 2018; Michaud, 2016; Ylimaki & Brunderman, 2019), New Zealand (e.g., Lai et al., 2014; Lai & McNaughton, 2016), and Germany (e.g., Hebbecker et al., 2022). However, there is notable limited research on teacher use of DBDM at the classroom level in Canada, with only one relevant study (Copp, 2017) addressing policy incentives and data use across Canadian schools.

The exploration of educational settings in which DBDM is implemented demonstrates that there is a predominant focus on in-person teaching at the primary/elementary level in the reviewed studies (e.g., Staman et al., 2017; van der Scheer & Visscher, 2017); this raises questions about the extent to which DBDM practices can be adapted to secondary education settings and different learning modalities. Addressing these complexities requires further study to understand the transferability and effectiveness of DBDM strategies at higher grade levels. Moreover, existing literature on online education emphasizes the potential of learning analytics and real-time data to inform personalized instruction (e.g., Behrens et al., 2018; Campos et al., 2021). However, no studies explore how educators can leverage data effectively in an online-learning environment. Thus, there is a need for research that examines the practical integration of DBDM in these educational settings.

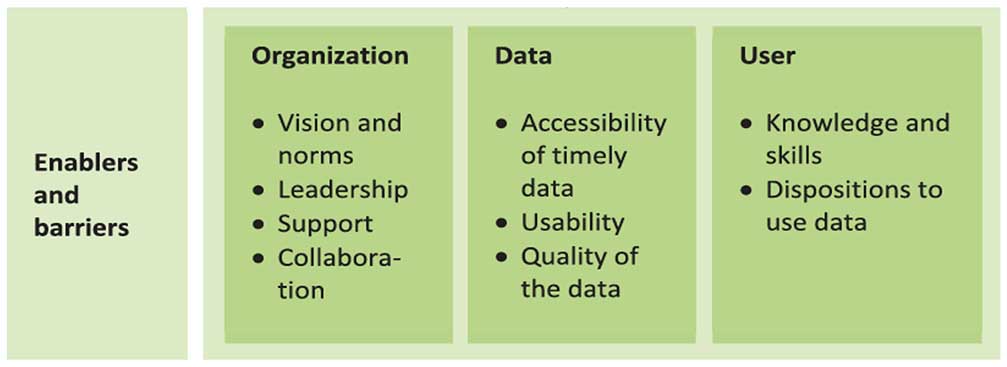

The findings underscore the importance of tailored interventions and ongoing professional development for effective DBDM implementation. This aligns with Schildkamp et al.’s (2017) DBDM Determinant Model, which highlights three key factors for successful DBDM interventions: organizational context, data characteristics, and user characteristics (Figure 6).

Figure 6

Determinant Model

Note. Schildkamp et al. (2017, p. 244).

Organizational Context: Effective DBDM requires strong leadership support, collaboration, and a clear vision, as Schildkamp et al. (2017) emphasize. Studies in this review address some aspects, such as coaching support (Andersen, 2020), collaboration through communities of practice (Marsh et al., 2015; Keuning et al., 2016), and leadership (Copp, 2017; Ylimaki & Brunderman, 2019). However, they often overlook the ‘vision and norms’ of institutions.

Data Characteristics: High-quality, timely, and usable data are crucial for DBDM. While many studies focus on assessment and standardized test data, there is a growing recognition of the need for diverse data sources. Researchers such as Curry et al. (2016), Dunn et al. (2013), and Ebbeler et al. (2016) advocate for a multifaceted data approach, emphasizing that diverse data sources enhance the impact on student outcomes. Even studies focusing on assessment data, such as Datnow et al. (2021) and Faber et al. (2018), highlight the importance of incorporating diverse sources of data.

User Characteristics: The predominant focus in the literature is on enhancing teachers’ data literacy and positive attitudes toward data use (e.g., Ebbeler et al., 2016; Staman et al., 2014; van der Scheer & Visscher, 2017). Effective training can improve these characteristics, with longitudinal, well-designed professional development showing positive effects on both teacher practices and student achievement (e.g., Andersen, 2020; Campos et al., 2021; Christman et al., 2016). However, Hebbecker et al. (2022) suggest that even short professional development sessions, combined with practical support and resources, can be sufficient for implementing DBDM effectively.

Although DBDM interventions have been found helpful in altering teacher practices and improving student outcomes, there remains a need for more sustainable and ongoing support to enhance DBDM implementation (Abdusyakur & Poortman, 2019; Admiraal et al., 2020; Staman et al., 2017). Short-term interventions or training programs, although effective in the short term, may not fully address the complexities of integrating DBDM into daily teaching practices (Andersen, 2020; van den Bosch et al., 2017). To ensure lasting change, interventions must prioritize building systemic capacity through continued professional learning opportunities, access to high-quality resources, and institutional support structures (Farley-Ripple et al., 2019; Rodríguez-Martínez et al., 2023). These efforts would enable teachers to embed data use more deeply into their instructional practices, thereby fostering sustained improvements in both teaching and learning outcomes.

This scoping review enhances understanding of DBDM in K-12 schools by examining how teachers use student data to guide their pedagogical and instructional practices. It highlights shifts from using DBDM for accountability to improving instruction (Kempf, 2015; Schildkamp & Ehren, 2013), and identifies gaps such as the need for research at the secondary/high school level and in online-learning contexts. The review also explores the impact of DBDM interventions on teacher practices and student outcomes (Hebbecker et al., 2022; Peters et al., 2021; Rodríguez-Martínez et al., 2023). As educational practices evolve, further research is needed to address gaps in secondary education, online learning, and under-represented geographical areas, aiming for a broader, more global understanding of DBDM trends. By addressing existing research gaps and fostering discussions on its implications, future studies can contribute to a more global and nuanced understanding of DBDM. Such efforts are essential for ensuring that data use in education translates into improved instructional practices and better outcomes for students across diverse contexts.

Abdusyakur, I., & Poortman, C. L. (2019). Study on data use in Indonesian primary schools. Journal of Professional Capital and Community, 4(3), 198-215. https://doi.org/10.1108/JPCC-11-2018-0029

Admiraal, W., Vermeulen, J., & Bulterman-Bos, J. (2020). Teaching with learning analytics: How to connect computer-based assessment data with classroom instruction? Technology, Pedagogy and Education, 29(5), 577-591. https://doi.org/10.1080/1475939X.2020.1825992

Andersen, I. G. (2020). What went wrong? Examining teachers’ data use and instructional decision making through a bottom-up data intervention in Denmark. International Journal of Educational Research, 102, 101585. https://doi.org/10.1016/j.ijer.2020.101585

Behrens, J., Piety, P., DiCerbo, K., & Mislevy, R. (2018). Inferential foundations for learning analytics in the digital ocean. In D. Niemi, R. D. Pea, B. Saxberg, & R. E. Clark (Eds.), Learning analytics in education (pp. 1-48). Information Age Publishing Inc.

Campos, F. C., Ahn, J., DiGiacomo, D. K., Nguyen, H., & Hays, M. (2021). Making sense of sensemaking: Understanding how K-12 teachers and coaches react to visual analytics. Journal of Learning Analytics, 8(3), 60-80.

Carlson, D., Borman, G. D., & Robinson, M. (2011). A multistate district-level cluster randomized trial of the impact of data-driven reform on reading and mathematics achievement. Educational Evaluation and Policy Analysis, 33(3), 378-398. https://doi.org/10.3102/0162373711412765

Cheng, L. (1999). Changing assessment: Washback on teacher perspectives and actions. Teaching and Teacher Education, 15(3), 253-271. https://doi.org/10.1016/S0742-051X(98)00046-8

Cheng, L., & Curtis, A. (2004). Washback or backwash: A review of the impact of testing on teaching and learning. In L. Cheng, Y. Watanabe, & A. Curtis (Eds.), Washback in language testing: Research contexts and methods (pp. 3-17). Lawrence Erlbaum.

Christman, J. B., Ebby, C. B., & Edmunds, K. A. (2016). Data use practices for improved mathematics teaching and learning: The importance of productive dissonance and recurring feedback cycles. Teachers College Record, 118(11), 1-32. https://doi.org/10.1177/016146811611801101

Copp, D. T. (2017). Policy incentives in Canadian large-scale assessment: How policy levers influence teacher decisions about instructional change. Education Policy Analysis Archives, 25, 115. https://doi.org/10.14507/epaa.25.3299

Curry, K. A., Mwavita, M., Holter, A., & Harris, E. (2016). Getting assessment right at the classroom level: Using formative assessment for decision making. Educational Assessment, Evaluation and Accountability, 28, 89-104. https://doi.org/10.1007/s11092-015-9226-5

Datnow, A., & Hubbard, L. (2015). Teachers’ use of assessment data to inform instruction: Lessons from the past and prospects for the future. Teachers College Record, 117(4), 1-26. https://doi.org/10.1177/016146811511700408

Datnow, A., Choi, B., Park, V., & John, E. S. (2018). Teacher talk about student ability and achievement in the era of data-driven decision making. Teachers College Record, 120(4), 1-34. https://doi.org/10.1177/016146811812000408

Datnow, A., Lockton, M., & Weddle, H. (2021). Capacity building to bridge data use and instructional improvement through evidence on student thinking. Studies in Educational Evaluation, 69, 100869. https://doi.org/10.1016/j.stueduc.2020.100869

De Simone, J. J. (2020). The roles of collaborative professional development, self-efficacy, and positive affect in encouraging educator data use to aid student learning. Teacher Development, 24(4), 443-465. https://doi.org/10.1080/13664530.2020.1780302

Dunn, K. E., Airola, D. T., Lo, W. J., & Garrison, M. (2013). What teachers think about what they can do with data: Development and validation of the data driven decision-making efficacy and anxiety inventory. Contemporary Educational Psychology, 38(1), 87-98. https://doi.org/10.1016/j.cedpsych.2012.11.002

Ebbeler, J., Poortman, C. L., Schildkamp, K., & Pieters, J. M. (2016). Effects of a data use intervention on educators’ use of knowledge and skills. Studies in Educational Evaluation, 48, 19-31. https://doi.org/10.1016/j.stueduc.2015.11.002

Faber, J., Glas, C., & Visscher, A. J. (2018). Differentiated instruction in a data-based decision-making context. School Effectiveness and School Improvement, 29(1), 43-63. https://doi.org/10.1080/09243453.2017.1366342

Farley-Ripple, E. N., Jennings, A. S., & Buttram, J. (2019). Toward a framework for classifying teachers’ use of assessment data. AERA Open, 5(4), 2332858419883571. https://doi.org/10.1177/2332858419883571

Farrell, C. C., & Marsh, J. A. (2016a). Metrics matter: How properties and perceptions of data shape teachers’ instructional responses. Educational Administration Quarterly, 52(3), 423-462. https://doi.org/10.1177/0013161X16638429

Farrell, C. C., & Marsh, J. A. (2016b). Contributing conditions: A qualitative comparative analysis of teachers’ instructional responses to data. Teaching and Teacher Education, 60, 398-412. https://doi.org/10.1016/j.tate.2016.07.010

Filderman, M. J., Austin, C. R., & Toste, J. R. (2019). Data-based decision making for struggling readers in the secondary grades. Intervention in School and Clinic, 55(1), 3-12. https://doi.org/10.1177/1053451219832991

Gelderblom, G., Schildkamp, K., Pieters, J., & Ehren, M. (2016). Data-based decision making for instructional improvement in primary education. International Journal of Educational Research, 80, 1-14. https://doi.org/10.1016/j.ijer.2016.07.004

Hebbecker, K., Förster, N., Forthmann, B., & Souvignier, E. (2022). Data-based decision-making in schools: Examining the process and effects of teacher support. Journal of Educational Psychology, 114(7), 1695. https://psycnet.apa.org/doi/10.1037/edu0000530

Heinrich, C., & Good, A. (2018). Research-informed practice improvements: Exploring linkages between school district use of research evidence and educational outcomes over time. School Effectiveness and School Improvement, 29(3), 418-445. https://doi.org/10.1080/09243453.2018.1445116

Ho, J. E. (2022). What counts? The critical role of qualitative data in teachers’ decision making. Evaluation and Program Planning, 91, 102046. https://doi.org/10.1016/j.evalprogplan.2021.102046

Hoover, N. R., & Abrams, L. M. (2013). Teachers’ instructional use of summative student assessment data. Applied Measurement in Education, 26(3), 219-231. https://doi.org/10.1080/08957347.2013.793187

Kempf, A. (2015). The school as factory farm: All testing all the time. In The pedagogy of standardized testing (pp. 13-28). Palgrave Macmillan US. https://doi.org/10.1057/9781137486653_2

Keuning, T., Geel, M. V., Visscher, A., Fox, J.-P., & Moolenaar, N. M. (2016). The transformation of schools’ social networks during a data-based decision making reform. Teachers College Record, 118(9), 1-33. https://doi.org/10.1177/016146811611800908

Lai, M. K., & McNaughton, S. (2016). The impact of data use professional development on student achievement. Teaching and Teacher Education, 60, 434-443. https://doi.org/10.1016/j.tate.2016.07.005

Lai, M. K., Wilson, A., McNaughton, S., & Hsiao, S. (2014). Improving achievement in secondary schools: Impact of a literacy project on reading comprehension and secondary school qualifications. Reading Research Quarterly, 49(3), 305-334. https://doi.org/10.1002/rrq.73

Maier, U. (2010). Accountability policies and teachers’ acceptance and usage of school performance feedback — A comparative study. School Effectiveness and School Improvement, 21(2), 145-165. https://doi.org/10.1080/09243450903354913

Marsh, J. A. (2012). Interventions promoting educators’ use of data: Research insights and gaps. Teachers College Record, 114(11), 1-48. https://doi.org/10.1177/016146811211401106

Marsh, J. A., Bertrand, M., & Huguet, A. (2015). Using data to alter instructional practice: The mediating role of coaches and professional learning communities. Teachers College Record, 117(4), 1-40. https://doi.org/10.1177/016146811511700411

Marsh, J. A., Pane, J. F., & Hamilton, L. S. (2006, Nov 7). Making sense of data-driven decision making in education [Occasional Paper]. RAND Corporation.

Michaud, R. (2016). The Nature of Teacher Learning in Collaborative Data Teams. Qualitative Report, 21(3), 529-545. https://doi.org/10.46743/2160-3715/2016.231

Park, V., & Datnow, A. (2017). Ability grouping and differentiated instruction in an era of data-driven decision making. American Journal of Education, 123(2), 281-306. https://doi.org/10.1086/689930

Peters, M. T., Förster, N., Hebbecker, K., Forthmann, B., & Souvignier, E. (2021). Effects of data-based decision-making on low-performing readers in general education classrooms: Cumulative evidence from six intervention studies. Journal of Learning Disabilities, 54(5), 334-348. https://doi.org/10.1177/00222194211011580

Rangel, V. S., Bell, E. R., & Monroy, C. (2017). A descriptive analysis of instructional coaches’ data use in science. School Effectiveness and School Improvement, 28(2), 217-241. https://doi.org/10.1080/09243453.2016.1255232

Reed, D. K. (2015). Middle level teachers’ perceptions of interim reading assessments: An exploratory study of data-based decision making. RMLE Online: Research in Middle Level Education, 38(6), 1-13. https://doi.org/10.1080/19404476.2015.11462119

Regan, K., Evmenova, A. S., Mergen, R. L., Verbiest, C., Hutchison, A., Murnan, R., Field, S., & Gafurov, B. (2023). The feasibility of using virtual professional development to support teachers in making data‐based decisions to improve students’ writing. Learning Disabilities Research & Practice, 38(1), 40-56. https://doi.org/10.1111/ldrp.12301

Rodríguez‐Martínez, J. A., González‐Calero, J. A., del Olmo‐Muñoz, J., Arnau, D., & Tirado‐Olivares, S. (2023). Building personalised homework from a learning analytics based formative assessment: Effect on fifth‐grade students’ understanding of fractions. British Journal of Educational Technology, 54(1), 76-97. https://doi.org/10.1111/bjet.13292

Schifter, C., Natarajan, U., Ketelhut, D. J., & Kirchgessner, A. (2014). Data-driven decision-making: Facilitating teacher use of student data to inform classroom instruction. Contemporary Issues in Technology and Teacher Education, 14(4), 419-432.

Schildkamp, K., & Ehren, M. (2013). From “intuition” to “data”-based decision making in Dutch secondary schools? In K. Schildkamp, M. K. Lai, & L. Earl (Eds.), Data-based decision making in Education (pp. 49-67). Springer Netherlands. https://doi.org/10.1007/978-94-007-4816-3_4

Schildkamp, K., Poortman, C., & Handelzalts, A. (2016). Data teams for school improvement. School Effectiveness and School Improvement, 27(2), 228-254. https://doi.org/10.1080/09243453.2015.1056192

Schildkamp, K., Poortman, C., Luyten, H., & Ebbeler, J. (2017). Factors promoting and hindering data-based decision making in schools. School Effectiveness and School Improvement, 28(2), 242-258. https://doi.org/10.1080/09243453.2016.1256901

Shirley, D. (2017). The new imperatives of educational change: Achievement with integrity. Routledge. https://doi.org/10.4324/9781315682907

Staman, L., Timmermans, A. C., & Visscher, A. J. (2017). Effects of a data-based decision making intervention on student achievement. Studies in Educational Evaluation, 55, 58-67. https://doi.org/10.1016/j.stueduc.2017.07.002

Staman, L., Visscher, A. J., & Luyten, H. (2014). The effects of professional development on the attitudes, knowledge and skills for data-driven decision making. Studies in Educational Evaluation, 42, 79-90. https://doi.org/10.1016/j.stueduc.2013.11.002

Tricco, A. C., Lillie, E., Zarin, W., O’Brien, K. K., Colquhoun, H., Levac, D., Moher, D., Peters, M. D. J., Horsley, T., Weeks, L., Hempel, S., Akl, E. A., Chang, C., McGowan, J., Stewart, L., Hartling, L., Aldcroft, A., Wilson, M. G., Garritty, C., ... Straus, S. E. (2018). PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and explanation. Annals of Internal Medicine, 169(7), 467-485. https://doi.org/10.7326/M18-0850

Tsai, Y., Poquet, O., Gašević, D., Dawson, S., & Pardo, A. (2019). Complexity leadership in learning analytics: Drivers, challenges and opportunities. British Journal of Educational Technology, 50(6), 2839-2854. https://doi.org/10.1111/bjet.12846

Truckenmiller, A. J., Cho, E., & Troia, G. A. (2022). Expanding assessment to instructionally relevant writing components in middle school. Journal of School Psychology, 94, 28-48. https://doi.org/10.1016/j.jsp.2022.07.002

van den Bosch, R. M., Espin, C. A., Chung, S., & Saab, N. (2017). Data‐based decision‐making: Teachers’ comprehension of curriculum‐based measurement progress‐monitoring graphs. Learning Disabilities Research & Practice, 32(1), 46-60. https://doi.org/10.1111/ldrp.12122

van Geel, M., Keuning, T., Visscher, A. J., & Fox, J.-P. (2016). Assessing the Effects of a School-Wide Data-Based Decision-Making Intervention on Student Achievement Growth in Primary Schools. American Educational Research Journal, 53(2), 360-394. https://doi.org/10.3102/0002831216637346

van der Scheer, E. A., & Visscher, A. J. (2016). Effects of an intensive data-based decision making intervention on teacher efficacy. Teaching and Teacher Education, 60, 34-43. https://doi.org/10.1016/j.tate.2016.07.025

van der Scheer, E. A., Glas, C. A., & Visscher, A. J. (2017). Changes in teachers’ instructional skills during an intensive data-based decision making intervention. Teaching and Teacher Education, 65, 171-182. https://doi.org/10.1016/j.tate.2017.02.018

Ylimaki, B., & Brunderman, L., (2019). School development in culturally diverse U.S. schools: Balancing evidence-based policies and education values. Education Sciences, 9(84), 1-15. https://doi.org/10.3390/educsci9020084

Areej Tayem is a Ph.D. candidate and part-time Professor in the Faculty of Education at the University of Ottawa in Canada. Her research focuses on learning analytics and data-based decision making (DBDM) in K-12 education. Email: atayem@uottawa.ca

Isabelle Bourgeois is a full Professor in the Faculty of Education at the University of Ottawa in Canada. Her main research activities focus on program evaluation and evaluation capacity building in public and community organizations. Email: isabelle.bourgeois@uottawa.ca